| Automatic Testing - Programmers Are Still The Problem |

| Written by Alex Armstrong | |||

| Friday, 16 June 2017 | |||

|

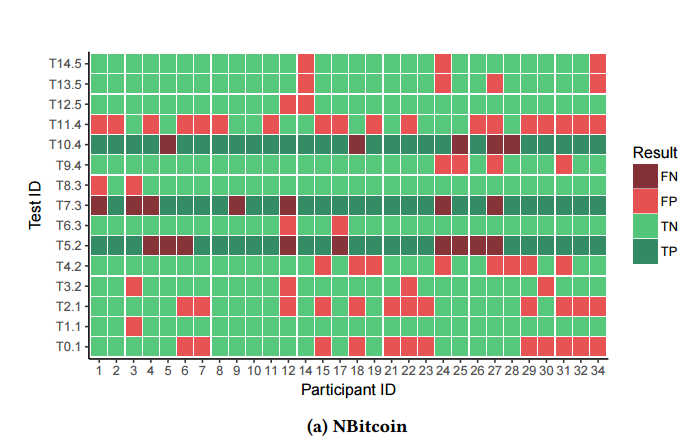

White box test generator tools can help you find bugs automatically by generating combinations of inputs that are likely to give the wrong answer. However there is a problem - how do you know when you have the wrong answer? A recent study by David Hon and Zoltan Micskei of Budapest University of Technology and Economics puts automatic testing under the spotlight. White-box test generator tools rely only on the code under test to select test inputs, and capture the implementation’s output as assertions. If there is a fault in the implementation, it could get encoded in the generated tests. Tool evaluations usually measure fault-detection capability using the number of such fault-encoding tests. However, these faults are only detected, if the developer can recognize that the encoded behavior is faulty. They carried out a study using 54 grad students and two open source projects and the IntelliTest Tool which is still better known as Pex. The tool generated test cases and the subjects were asked to identify when the output was indicative of a fault. The results were some what surprising. The results showed that participants incorrectly classied a large number of both fault-encoding and correct tests (with median misclassication rate 33% and 25% respectively). us the real fault-detection capability of test generators could be much lower than typically reported, and we suggest to take this human factor into account when evaluating generated white-box tests. You can see the confusion matrix for the NBitcoin project's tests:

FP=False Positive, FN=False Negative, TP=True Positive, TN=True Negative What this means is that in practice white box testing is subject to programmer errors in its own right. By examining videos of the subjects trying to interpret the tests the researchers note that they tended to use debugging methods to further explore the code and clarify the meaning of the test. An "exit" survey also indicates the sorts of difficulties the subjects felt they had with the task:

The key finding is: The implication of the results is that the actual fault-nding capabilities of the test generator tools could be much lower than reported in technology-focused experiments. Of course it would be better if the programmer could be taken out of the loop, but this would require the white box tester to work out what the result of the test should be and this would require a lot of AI. More InformationClassifying the Correctness of Generated White-Box Tests: An Exploratory Study Related ArticlesCode Digger Finds The Values That Break Your Code Code Hunt - New Coding Game From Microsoft Research Debugging and the Experimental Method To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Friday, 16 June 2017 ) |