| Google Announces Framework For Data Science Predictions |

| Written by Kay Ewbank |

| Thursday, 13 January 2022 |

|

Google has released Prediction Framework, which the developers describe as a time saver for data science prediction projects. The framework provides a way to put together a reusable project that includes all the steps of a prediction project: data extraction, preparation, filtering, prediction and post-processing. The Prediction Framework aims to make it easier to provide predictions in a generic and reusable way, and is aimed mainly at marketing projects.

It can be used to specify the choices in all the usual phases in this type of project, and in addition to data extraction, preparation, filtering, prediction and post-processing, it can be used to handle aspects of running the project such as backfilling, throttling for API limits, synchronisation, storage and reporting.

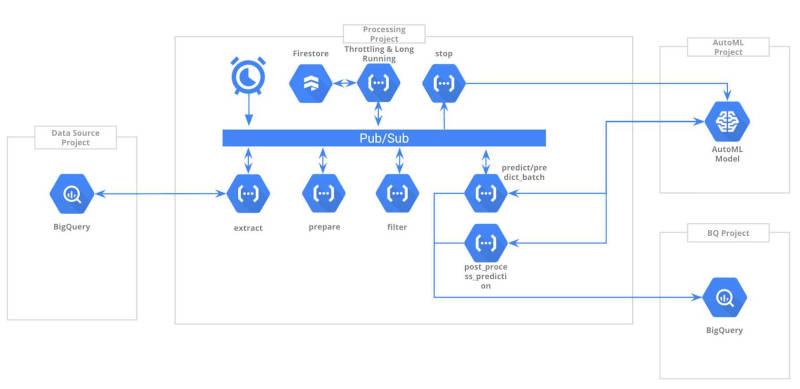

The developers say that up to 80% of the development time can be saved by using this framework to implement new marketing prediction projects. The thinking behind the framework comes from what Google customers say they need to work with marketing platforms like Google Ads. Making sure the ads work relies on analyzing first party data, performing predictions on the data and using those results to drive platforms like Google Ads. The Prediction Framework team says the ETL & prediction pipelines are very similar regardless of what the information is being used for. To use Prediction Framework you specify the input data source, the logic to extract and process the data and a Vertex AutoML model ready to use along with the right feature list, and the framework will be in charge of creating and deploying the required artifacts. The Prediction Framework was built to be hosted in the Google Cloud Platform and it makes use of Cloud Functions to do all the data processing. It uses Firestore, Pub/Sub and Schedulers for the throttling system and to coordinate the different phases of the predictive process, Vertex AutoML to host your machine learning model and BigQuery as the final storage of your predictions. In the preparation phase, once the transactions have been extracted for one specific date, the data will be picked up from the local BigQuery and processed according to the specs of the model. It is then queried and filtered, and once this has been completed, the prediction is called using Vertex API. A formula based on the result of the prediction could be applied to tune the value or to apply thresholds. Once the data is ready, it will be stored into BigQuery. You can apply a formula to the AutoML batch results to tune the value or to apply thresholds. Once the data is ready, it will be stored into the BigQuery within the target project. Prediction Framework is available on GitHub now. More InformationPrediction Framework On GitHub Prediction Framework On Google Related ArticlesGoogle Data Studio Improves Analytics Google Data Studio Improves Interactiveness Data Studio Adds Interactive Charts Google Makes Data Studio Freer Google Data Studio Adds Filters Google Announces Big Data The Cloud Way Major Update to Google BigQuery To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Thursday, 13 January 2022 ) |