| Intel RealSense - What Can YOU Do With It? |

| Written by Sue Gee | |||

| Monday, 08 September 2014 | |||

|

There is still time to come up with an idea that could win you big bucks in the Intel RealSense App. If you want inspiration there is now plenty on offer and you can also reserve a RealSense developer kit if you want to be sure of obtaining one.

Intel's new RealSense camera is a follow-on from last year's perceptual computing initiative - and it is has made significant progress in terms of technology. But why should you take RealSense seriously? I suppose one of the biggest reasons is that it is Intel behind the technology. What this means is that not only is the RealSense camera small, cheap and capable it is also going to be built into ultrabooks and other portable devices. This could very well be the coming wave of 3D and gesture input.

The spec of the new SDK means that apps really will be able to see, hear and respond to the environment in ways we could previously only dream of. And that is where the Intel RealSense App Challenge, with $1 Million in cash prizes, comes into the picture. Intel is asking developers to come up with ideas for apps that use the new capabilities of the RealSense platform - and in the first phase of the contest, which has a deadline of October 1st, 2014 all you have to do is to sign up and submit an idea that uses the RealSense camera SDK. But the SDK won't be available until after October 1st! So how can we come up with workable ideas? To help fill that information gap Intel recently ran two eseminars which explained how the platform will enter the market and provided details of both the camera and the SDK.

The camera itself is very small and while ultimately it is going to be built into Ultrabooks, notebooks, 2 in 1s and all-in-one PCs from several manufacturers, for the purposes of the development phase of the app challenge it is a separate unit. The camera has a 1080p RGB sensor plus an infrared laser and infrared sensor and is optimized for close range interactivity with a range of 0.2 to 1.2 meters of the camera.

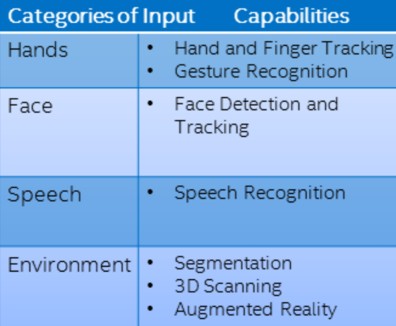

It is the combination of the camera's resolution and its SDK that make the platform so powerful. For example it has 22-point hand and finger tracking and responds to 9 static and dynamic mid air gestures. For face detection it uses 78-point landmark detection of facial features and offers pulse estimation - which might suggest an idea for an app. Emotion recognition and face recognition are features that are "coming post-beta" Speech recognition includes command and control and dictation, and giving its lots of scope for apps where you don't want users to have to rely on keyboard or mouse, and text to speech so that the computer can be given a voice giving it lots of new potential roles. Among the features associated with understanding the environment, 3D object, face and room scanning together with scene perception are going to be post-beta but you can get a long way with 2D/3D object tracking and background removal is a feature that suggests possibilities for games and for immersive collaboration - such as presentations. If the features of the SDK haven't already sparked loads of ideas have a look at what other people have already come up with. The second webinar, Enabling a More Natural Future For Your Application User Interfaces, which is available online if you sign up to it, features four apps, originally developed with the previous versions of the SDK, which took advantage of its different aspects.

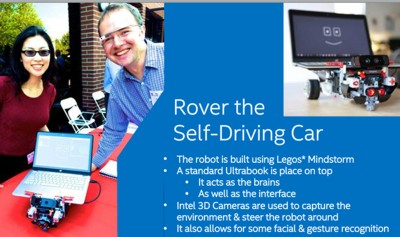

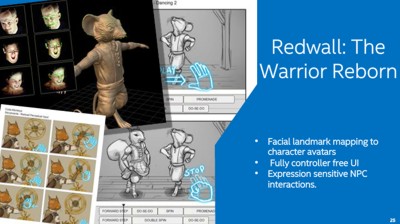

At around 15 minutes you'll find an idea from Martin Wojtcyz for a self-driving car. He built this using Lego Mindstorms with an Ultrabook as its brains and the camera as its vision system. The robot car is controlled by voice and gestures and responds to the environment negotiating around the obstacles in its path. Next Justin Link tells us about integrating gesture and voice commands in a puzzle-oriented game that also used Unity 3D. Andres Martinez has a 3D Head Scanner which he reckons will be greatly enhanced by the new SDK and Chris Skaggs of Code Monkeys talks about making controller-fee games using menu and in game voice commands. Code Monkeys is now working on a project to recognise and teach American Sign Language which of course needs hand and finger tracking. It is also working on a game that relies on facial landmark mapping and will also use emotion detection.

Chris Skaggs also takes part in this webinar at around 30 minutes:

Earlier in the webinar (from 19:30) there's a a full discussion of the different categories for the App Challenge, making the important point that if you have an idea that doesn't fall into one of the boxes Intel still want to hear about it.

There are some other novel ideas in these videos, starting with one in which hand gestures in three dimensions create not only a display but a soundscape :

Finger tracking is used in this game - simple but fun:

In this one Intel's Eric Mantion demonstrates bring the real world environment, by way of wooden building blocks, into the virtual world. His demo is a game but he also discusses how the same idea could be used in other scenarios in which capturing real world data in this way could be applicable:

Intel is hoping for loads of ideas both from developers who have experience with its Perceptual Computing SDK and also from those new contests. Accordingly it has two separate tracks in the RealSense App Challenge. There is an invitation-only Ambassador track for those who progressed to the second round one of the previous competitions and a Pioneer Track that is open to anyone over 18 either as an individual or a team or company. There's a long list of countries included, among them the United Kingdom, Keyna and Nigeria. All entries have to be in English. In the first Ideation phase of the contest you have to fill in a form. You choose which category your idea fits into, provide and application name and its overview and explain how it will use the RealSense SDK. You can upload a file which could be a longer document or an image - or a compressed file containing multiple items but this is optional. Up to 1000 entrants from the Pioneer track will be selected on the basis of this initial submission to go forward to the Development Phase, scheduled to start on October 14, 2014 and finish on January 20, 2015 - although there is an Early Submission deadline with 50 prizes on the Pioneer track of $1,000. The cash prizes for the Intel RealSense App Challenge all come in the Development phase with a $25,000 Grand Prize for the Pioneer track and $50,000 for the Ambassador track. The top scoring demo in each of the five categories will be awarded $25,000 (Pioneer)/ $50,000 (Ambassador) with 10 second place awards of $10,000 (Pioneer)/ $20,000 (Ambassador). The incentive for making a submission in the Ideation Phase is that all those chosen to go forward - up to 1000 Pioneers and 300 Ambassadors all receive the Intel RealSense Developer Kit - i.e the camera and its SDK.

If you don't want to participate but do want the Developer Kit, you need to reserve one (or up to 25 per reservation), at a cost of $99.00 before October 1st. At least one of the I Programmer team has signed up so lookout for more information as soon as the camera and SDK is launched.

More InformationIntel RealSense App Challenge 2014 Contest Registration Intel RealSense Developer Kit Reservation Related ArticlesIntel $1 Million RealSense App Challenge Learn More About Intel RealSense Technology Intel Perceptual Computing Challenge Intel's Vision - Perceptual Computing

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Friday, 28 September 2018 ) |