| Demystifying GPU Terminology |

| Written by Nikos Vaggalis | |||

| Friday, 17 January 2025 | |||

|

The developers at Modal have created the GPU Glossary to help themselves and others get to grips with termionology related to NVIDIA GPU hardware and software. They have managed to collect, clean, normalize and present the dispersed information on the subject. The rationale behind the glossary's creation is to solve a problem the people at Modal ran into when working with GPUs. the documentation is fragmented, making it difficult to connect concepts at different levels of the stack, like Streaming Multiprocessor Architecture, Compute Capability, and nvcc compiler flags . To collect, amend and present that information they went to great lengths; collecting material from official documentation to Discord-dedicated channels and even trawling through old-fashioned books.

Content wise, the material is split into three main categories:

Each section hosts a list of related terms. So for instance on Device Hardware, some terms included are :

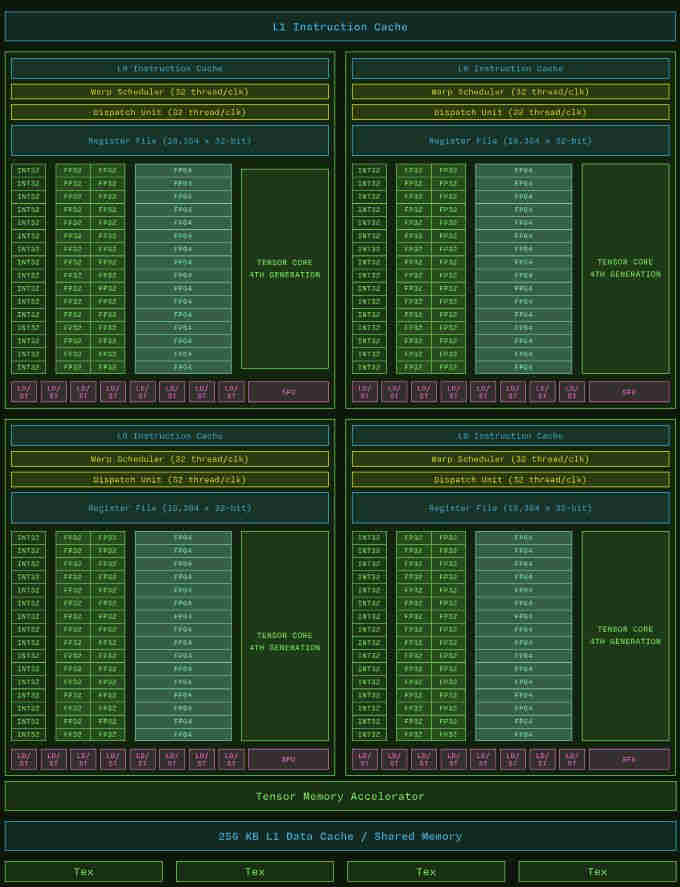

Clicking on a term it loads a plain html document with detailed info on the matter with crisp diagrams included. The additional boon here is that Javascript is not used and therefore the site loads quickly. But why is such a glossary important even nowadays where LLMs dominate and can be asked to reveal anything? It's the same case as laid out in "Explore Programming Idioms": Yet another advantage of a Wikipedia-like site like this over a chatbot is when I don't want to look up something specific but instead want to casually browse the site to discover terms that I was totally unaware of just, or discover terms related to a topic that I'm doing research on. Plus I'm sure that the information presented is factual and not a figment of hallucination. Other than that, in this era of AI in order to find your bearings you ought at some point familiarize yourself with such kind of terminology. I'm sure you've heard of Tensors but might not know what they actually are. Well here is the answer taken from the glossary: Tensor Cores are GPU cores that operate on entire matrices with each instruction. coupled with a diagram and an explanation.

That said, we initially got to know Tensor Cores in NVIDIA Releases Free Courses On AI, where I explained: NVIDIA once a company that exclusively catered for gamers by releasing state of the art GPUs, has now become an AI company. This happened as a side effect of the research they've been doing in their quest to produce ever evolving graphic cards. That lead them to the discovery of the tensor cores that are now used to train Large Language Models. Other interesting topics you'll find on the glossary are for instance crisp explanations of well known general concepts like that of a Thread, L1 Data Cache, Registers, Kernel etc, but also specific to Nvidia's technology. This includes NVIDIA's CUDA toolkit and programming model which provides a development environment for speeding up computing applications by harnessing the power of GPUs. Components of it examined are libcuda.so, NVIDIA CUDA Compiler Driver, NVIDIA CUDA Runtime Driver etc As said, the CUDA toolkit is NVIDIA specific, but last year we've looked at ZLUDA, a translation layer that lets you run unmodified CUDA applications with near-native performance on AMD GPUs, in ZLUDA Ports CUDA Applications To AMD GPUs. CUDA also requires the code to be written code in C++ but there's ways to interface to it from Java and Python as examined in Program Deep Learning on the GPU with Triton and TornadoVM Makes It Possible To Run Java on GPUs and FPGAs. I'm mentioning those articles here to hint that programming GPUs is not considered a black art anymore, but is now accessible in day to day programming such as game development, AI and many others. So whether you're in that kind of business or just a developer who wants to educate himself in some important Computer Science concepts, Modal's GPU Glossary is the right place to visit. More InformationRelated ArticlesNVIDIA Releases Free Courses On AI ZLUDA Ports CUDA Applications To AMD GPUs TornadoVM Makes It Possible To Run Java on GPUs and FPGAs Program Deep Learning on the GPU with Triton

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Friday, 17 January 2025 ) |