| Google's 25 Years of AI Progress |

| Written by Mike James | |||

| Wednesday, 27 September 2023 | |||

|

As part of Google's 25 year celebration, a blog post lists "Our 10 biggest AI moments...". It is true that Google has pushed AI to get us where we are today, but the reality is more nuanced than just ten greatest hits. Google was, and is, a search engine and as such it is clearly involved with understanding from the word go. As Larry Page said: “The perfect search engine should understand exactly what you mean and give you back exactly what you need.” The original Page Rank algorithm could almost be labeled as AI - it is mathematically advanced and seeks to extract information about popularity and usefulness from a graph of links - but the top ten list starts with spelling correction in 2001. You might not think of a spelling package as being AI, but there is a very real sense in which it is the start of the road that leads to Large Language Models (LLMs). Google launched machine translation in 2006. Initially this used a statistical approach rather than a neural network, but it was one of the first examples where having a lot of data seemed to be the key to making things better. Later, 2016, Google switched to a neural network approach, which really does lead on to LLMs. Interesting the next entry in the list isn't 2014 and GoogLeNet. This really was big data and it was the first to be good enough to think that you might be able to use machine vision for real things. It is a surprising omissions.

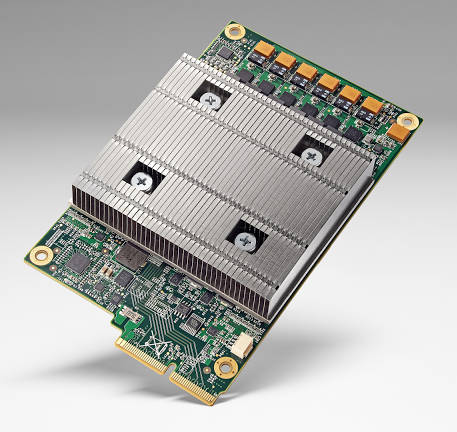

The next entry in Google's list of triumphs is TensorFlow - a software system that makes working with tensors, and hence neural networks, easy. This coupled with some "front ends" using Python did a lot to democratise neural networks. Then in 2016 Google launched the TPU - a hardware component that speeded up Tensor Flow. It is clear that Google has contributed to the way neural networks are implemented.

Google experienced big time sucess with AlphaGo when it claimed victory over Lee Sedol, one of the world's best Go players. This was astounding at the time. We all thought that Go was a game that would take so much more to master. Over time AlphaGo has been generalized to other games and scientific tasks such as protein folding. This was the first time that reinforcement learning entered the picture as a practical form of AI. But AlphaGo was the product of DeepMind, an organization that is arguably not really the same as Google, despite several attempts to make it more Google-y. Originally founded in 2010 by Demis Hassabis, London-based DeepMind Technologies was bought by Google for a very large sum of money and worked more-or-less independently of Google, until, as we reported in April 2023: "Alphabet is merging its two prominent AI research teams, Brain and DeepMind to form Google DeepMind. Given the phenomenal success of ChatGPT, this is being interpreted as an attempt to compete with OpenAI in the ongoing race towards the development and adoption of general AI." Now we come to the real breakthrough. In 2017 Google introduced the transformer. This allows feed-forward networks to do jobs that have previously needed recurrent networks, with all of their difficulties of training. A transformer can learn relationships between separate elements in the data and as such is ideal for language processing. We are still learning what the idea of "attention" and the transformer can do for us, but if you had to single out one breakthrough that Google can show for its efforts it is this. The transformer technique led more or less directly to BERT, the first of the LLMs. However it was OpenAI, co-founded in 2015 by Ilya Sutskever after two years working at Google, which took the idea and ran with it to produce ChatGPT. In response, Google declared a red alert, see Can Google DeepMind Win At AI?, and combined DeepMind and Brain to try to make more of what they had. This year the result was Bard and Google lists this as an achievement. How much of an achievement we have to wait and see. At the moment Bard can help you in the context of Gmail, Docs, Drive, Flights, Maps and YouTube and with coding - but it's nowhere near 100% trustworthy, which is what we need it to be. Meanwhile generative AI is being introduced into Search in an initiative call SGE but this only gets a passing mention in the very final item in the list which records the launch of PaLM 2, Google's next generation large language model. It features improved multilingual, reasoning and coding capabilities and gets us one step closer to search AI-based.

More InformationOur 10 biggest AI moments so far Related ArticlesGoogle's Neural Networks See Even Better TPU Is Google's Seven Year Lead In AI Google Buys Unproven AI Company, Deep Mind Why AlphaGo Changes Everything Can Google DeepMind Win At AI? Google's Large Language Model Takes Control To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 27 September 2023 ) |