| Google's Large Language Model Takes Control |

| Written by Mike James | |||

| Wednesday, 08 March 2023 | |||

|

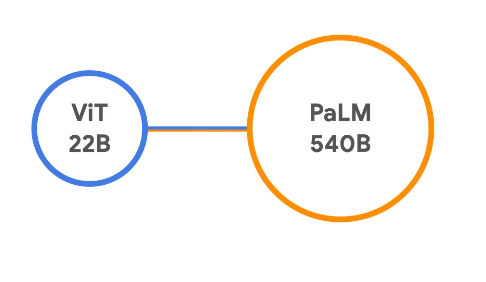

of a robot. No it isn't Skynet just yet, but it is looking a more likely scenario. Until recently I thought that much of the hype about large language models was just that - hype. Now I'm not so sure. I think the breakthrough continues.. First a few words about why the hype about Large Language Models (LLMs) goes too far. LLMs are amazing and they have all sorts of potential uses and it is very early days. My doubts are about the way that Microsoft and, with less enthusiasm, Google are trying to push them into search. At the moment this doesn't seem like a good fit for an AI that has had not chance to validate its output. In other words, LLMs don't distinguish between what is real and what could be real. They have been trained on language and have only knowledge of its structure. This means if you can say it then an LLM will say it, even if it has no grounding in reality. So putting an LLM into a search engine doesn't seem like the best idea. Its doesn't fit with the characteristics of LLMs. However, if you ground an LLM with real world consequences then maybe things will be different. You can argue that we do the job the other way round. We are embodied intelligences and we process our sensor inputs on an continuous basis and so form a model of the world. Only later, when we become homo sapiens , or thereabouts, do we start to move the model into an LLM. Our own language models capture a great deal about the world's structure, but not necessarily what corresponds to the real and the fantasy. We need the real-world grounding to filter out the fantasy. Now we have an example of the grounding of an LLM in the real world. Google, along with the University of Berlin, has created PaLM-E. a model with 562 billion parameters that integrates vision and robot sensor inputs with an LLM. It is an integration of the existing 540 billion parameter PaLM LLM and the 22 billion Vision Transformer, ViT. The new model is called PaLM-E for "Embodied": The main architectural idea of PaLM-E is to inject continuous, embodied observations such as images, state estimates, or other sensor modalities into the language embedding space of a pre-trained language model.

The idea was to convert the new data into a form that made it similar to the language data used to train the model in the first place. The output "sentences" are used to control the robot. Amazingly this is still a "language" model despite the fact that the data correspond to real world sensors. The same sort of approach seems to have worked with vision. The Vision Transformer converted the images into tokens that were fed into the language model. This approach is language models all the way down! There is no clue as to how much data was used in training or how it was performed, but it clearly got somewhere useful. A range of experiments was performed to see how well PaLM-E works. Interestingly it was found that training on more than one task at the same time improved performance by a factor of two and there seems to be transfer learning between vision and language and robot tasks. It also didn't seem to lose much of its linguistic abilities after the training - there was no catastrophic loss of learning and it could still answer questions. You can see the most impressive of the tasks in the video below: In this video, we execute a long-horizon instruction "bring me the rice chips from the drawer" that includes multiple planning steps as well as incorporating visual feedback from the robot's camera. Next, we demonstrate two examples of generalization. In the case below the instruction is "push red blocks to the coffee cup". The dataset contains only three demonstrations with the coffee cup in them, and none of them included red blocks The blocks video is reminiscent of Winograd's shrdlu, look it up, and it is a powerful statement of how far we have come since those early days. The robot video is surprisingly suggestive that domestic robots may not be that far away. I am sometimes asked why self-driving cars and bipedal robots aren't everywhere given how much success neural networks are having. The answer is that both these projects were started well before neural networks were this advanced and it is quite likely that something new will come out of somewhere unexpected and solve both problems. As for the philosophy, what can we say? It is interesting that wetware developed language after embodied intelligence. The purpose of language was to transfer knowledge and hence enhance intelligence. Now our language seems to be passing on what we know about the world to machines as they take language back to the embodied intelligence. If the language model fantasies, the world will soon let it know what is real.

More Informationhttps://palm-e.github.io/#demo Related ArticlesChatGPT Coming Soon To Azure OpenAI Services Open AI And Microsoft Exciting Times For AI The Unreasonable Effectiveness Of GPT-3 To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 08 March 2023 ) |