| TPU Is Google's Seven Year Lead In AI |

| Written by Mike James | |||

| Wednesday, 25 May 2016 | |||

|

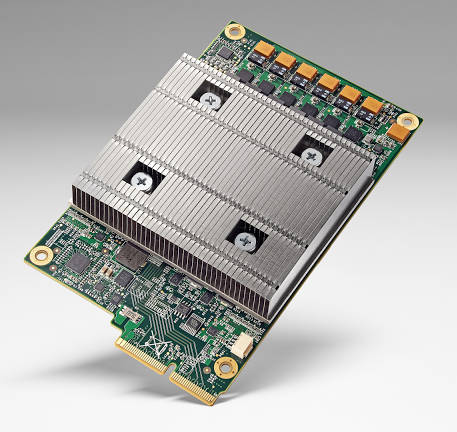

Google has been doing some remarkable things with AI, but the speed of development is set to increase because it has the Tensor Processing Unit, TPU. The TPU is a custom ASIC - Application-Specific Integrated circuit. This doesn't tell you much about exactly what the hardware is because ASIC covers a range of technologies from low cost and easy Field Programable Gate Arrays to full custom integrated circuits that are difficult and cost a lot. All we know is that from the first test samples to getting the device into data centers took just 22 days. Although notice that the project was: "a stealthy project at Google several years ago to see what we could accomplish with our own custom accelerators for machine learning applications." The application that the TPUs are specific to is TensorFlow and neural networks in particular. One of the big bottlenecks here is the huge computing power it takes to train and even use such big deep neural networks. Google now has a short cut. It claims that using TPUs is an order of magnitude better-optimized for performance per watt than other approaches. This is vague, but it is also claimed to have moved the technology about seven years into the future - or three generations of Moore's law. To quote the Cloud Platform Blog: TPU is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation. Because of this, we can squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models and apply these models more quickly, so users get more intelligent results more rapidly. The reference to reduced precision recalls the well documented behaviour of neural networks that they seem to work just as well with eight-bit and lower arithmetic precision. It seems that for neural nets it is the ensemble of neurons that matters not the accuracy of any particular neuron. Presumably the TPU performs lots of low precision vector arithmetic to make things faster. The TPU is also a very neat piece of hardware. A board with a TPU fits into a hard disk drive slot in our data center racks:

This is all the information we have about the TPU but there are some other interesting revelations in the recent blog post: "Machine learning provides the underlying oomph to many of Google’s most-loved applications. In fact, more than 100 teams are currently using machine learning at Google today, from Street View, to Inbox Smart Reply, to voice search." Notice "more than 100 teams". And: "TPUs already power many applications at Google, including RankBrain, used to improve the relevancy of search results and Street View, to improve the accuracy and quality of our maps and navigation. AlphaGo was powered by TPUs in the matches against Go world champion, Lee Sedol, enabling it to "think" much faster and look farther ahead between moves." So Google, or Deep Mind's, win was enabled by the use of TPUs. Google also claims that the TPU racks at its data centers might become available for anyone to use:,

A TPU rack - anyone got any idea what the notice on the end is about?

The strange thing is that just as Moore's law is running out and hardware really isn't getting any more powerful year on year, the new paradigm of AI, the deep neural network, steps in to make new architectures that keep the rate of progress going. Google having the TPU is another notch up in the AI arms race - I wonder what the others have that they are not talking about?

More InformationGoogle supercharges machine learning tasks with TPU custom chip Related ArticlesTensorFlow 0.8 Can Use Distributed Computing Google Tensor Flow Course Available Free How Fast Can You Number Crunch In Python Google's Cloud Vision AI - Now We Can All Play TensorFlow - Googles Open Source AI And Computation Engine Google Open Sources Accurate Parser - Parsey McParseface

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 25 May 2016 ) |