| Google Has Another Machine Vision Breakthrough? |

| Written by Mike James |

| Thursday, 18 July 2013 |

|

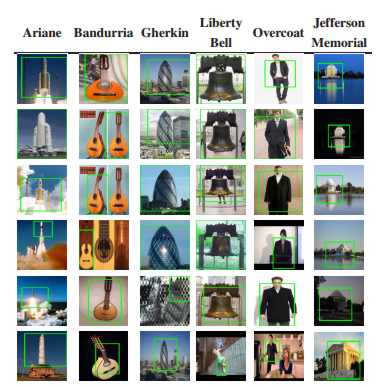

Google Research has just released details of a Machine Vision technique which might bring high power visual recognition to simple desktop and even mobile computers. It claims to be able to recognize 100,000 different types of object within a photo in a few minutes - and there isn't a deep neural network DNN mentioned. There has always been a basic split in machine vision work. The engineering approach tries to solve the problem by treating it as a signal detection task using standard engineering techniques. The more "soft" approach has been to try to build systems that are more like the way humans do things. Recently it has been this human approach that seems to have been on top, with DNNs managing to learn to recognize important features in sample videos. This is very impressive and very important, but as is often the case the engineering approach also has a trick or two up its sleeve. In this case we have improvements to the fairly standard technique of applying convolutional filters to an image to pick out objects of interest. The big problem with convolutional filters is that you need at least one per object type you are looking for - there has to be a cat filter, a dog filter, a human filter and so on. Given that the time it takes to apply a filter doesn't scale well with image size, most approaches that use this method are limited to a small number of categories of object. This year’s winner of the CVPR Best Paper Award, co-authored by Googlers Tom Dean, Mark Ruzon, Mark Segal, Jonathon Shlens, Sudheendra Vijayanarasimhan and Jay Yagnik, describes technology that speeds things up so that many thousands of object categories can be used and the results can be produced in a few minutes with a standard computer. The technique is complicated, but in essence it makes use of hashing to avoid having to compute everything each time. Locality sensitive hashing is use to lookup the results of each step of the convolution - that is, instead of applying a mask to the pixels and summing the result, the pixels are hashed and then used as a lookup in a table of results. They also use a rank ordering method which indicates which filter is likely to be the best match for further evaluation. The use of ordinal convolution to replace linear convolution seems to be as important as the use of hashing. The result of the change to the basic algorithm is a speed up of around 20,000 times, which is astounding. The method was tested on 100,000 object detectors based on a deformable-part model which required over a million filters to be applied to multiple resolution scalings of the target image which were computed in less than 20 second using nothing but a single multi-core machine with 20GB of RAM.

As the paper points out, an average human can recognize around 10,000 high level object categories so in this case the machine approach might be a way to provide basic features to a higher-level classifier. What is clear is that it is never safe to write off an approach in AI. In this case, being able to implement such sophisticated object detection methods at speed is not only likely to result in real world applications but in improvements in the detectors themselves. Being able to test and see results quickly is a prerequisite for building even more sophisticated methods. More InformationFast, Accurate Detection of 100,000 Object Classes on a Single Machine Related ArticlesA Billion Neuronal Connections On The Cheap Deep Learning Powers BING Voice Input Google Explains How AI Photo Search Works Near Instant Speech Translation In Your Own Voice Google's Deep Learning - Speech Recognition A Neural Network Learns What A Face Is

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Thursday, 18 July 2013 ) |