| Google Releases Object Detector Nets For Mobile |

| Written by Mike James | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Wednesday, 21 June 2017 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

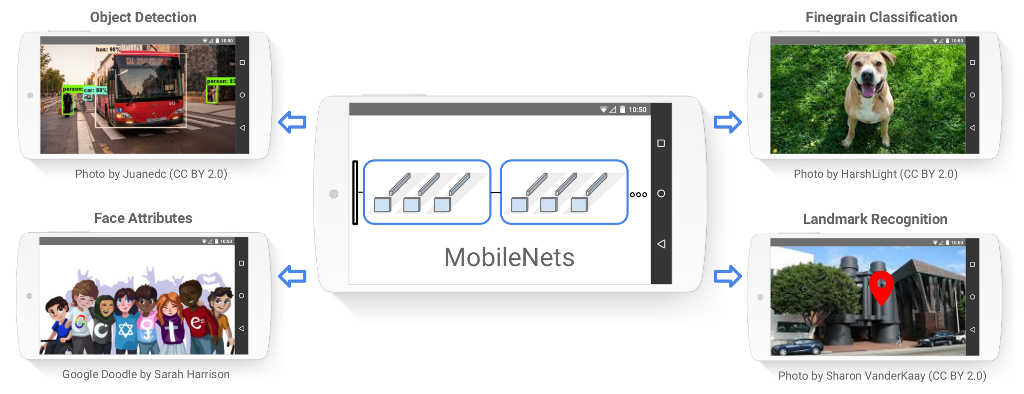

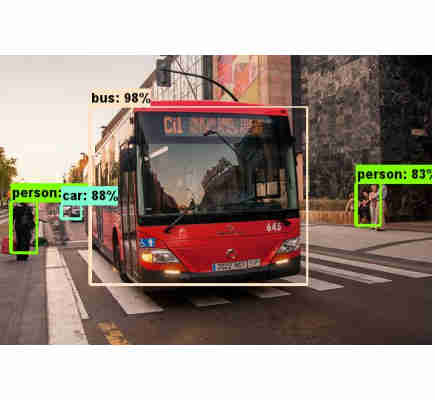

Google seems to be giving away its crown jewels. MobileNets are a small set of neural network models pre-trained to identify objects. In theory this software should be of great value, but Google is making it available for free. A neural network is a fairly simple thing to use once it has been trained. All you have to do is multiply each of the inputs by the weights in the first layer and apply an output function to each. Repeat for each layer and when you reach the final layer you have your result. This sounds so easy and even the most feeble of processors should be able to calculate the model. However, this isn't really taking into account the huge size of the network. We may have improved our use of neural networks, but the real advance is simply in how big a network we can train - you could say networks have got bigger not smarter. Modern neural networks have millions of weights and so to compute the output of a trained net you still need a reasonably powerful processor. Google's MobileNet models are fully trained models that are designed to let you select a network size to suit the processor you are going to use. The idea is that they will allow neural networks to be computed on mobile devices such as phones. At the moment most mobile AI applications work by uploading images or voice clips to the cloud where more powerful processors can deal with implementing the model. Having a neural net which can be computed on the mobile device can be faster than an upload and of course it works when the mobile is disconnected. All of the models have been trained on the ILSVRC-2012-CLS image dataset and so they are capable of recognizing a wide range of objects. Not only do they recognise, they also place bounding boxes around the objects they have identified.

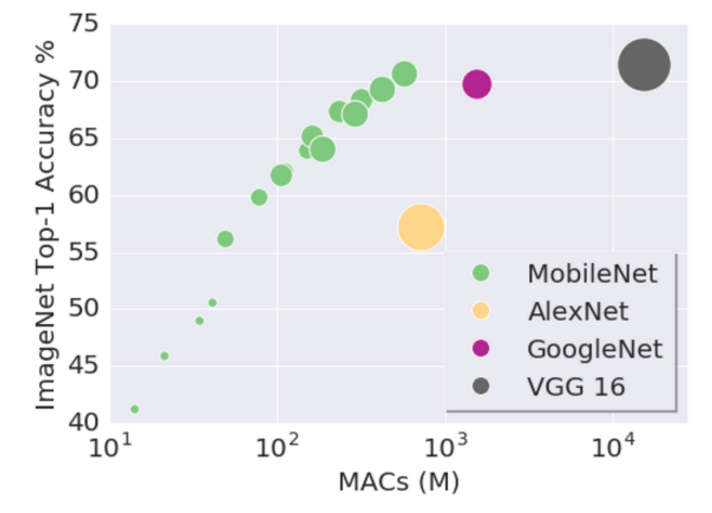

You can select the MobileNet you want to use according to the number of weights. Of course more weights equals a bigger network, which means more calculations but more accuracy. You can see how the different sizes of network perform in the chart below. The horizontal scale is in MACs or Multiplication and Addition operations needed:

You can see that the MobileNets at their best are as good as well known models. On the other hand, I'm not sure what some of the very low accuracy nets might be used for - apps where objects only needed to be detected occasionally? The following table gives you the performance in detail:

Top1 accuracy is when the correct label is in the first position and top 5 accuracy is when it is in the top five labels. All of these models run on TensorFlow or TensorFlow Mobile. They are all open source and can be downloaded from GitHub. The team has produced a paper (listed in More Information) which explains how they reduced the number of weights while keeping the performance high. It still begs the question of why Google is giving away such valuable software? Will the philanthropy end when a real killer app is on the horizon?

More InformationMobileNets: Open-Source Models for Efficient On-Device Vision MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. (PDF) Related ArticlesWe May Have Lost At Go, But We Still Stack Stones Better The Shape Of Classification Space Is Mostly Flat Microsoft Cognitive Toolkit Version 2.0 Evolution Is Better Than Backprop?

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Last Updated ( Wednesday, 21 June 2017 ) |