| Why Deep Networks Are Better |

| Written by Mike James |

| Wednesday, 24 May 2017 |

|

Why is it that deep neural networks are better even though we have known for a long time that anything a deep network can compute can also be computed by a shallow network? Physicist Max Tegmark and Mathematician David Rolnick have a reason for us.

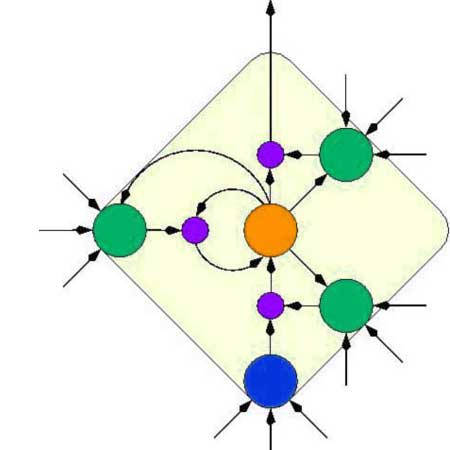

One of the fundamental errors that neural network beginners often make is due to Kolmogorov's theorem. This and other results prove that you only need a very simple type of function to approximate any function. This then leads on to the conclusion that a two layer network is enough to implement any arbitrary function. Notice that it has to be two layers because one layer isn't enough. That one layer isn't enough is the reason that work on neural networks ground to a halt because Minsky and Papert proved that perceptrons, i.e. one layer networks, couldn't learn various simple things like the XOR function. We didn't have a good training algorithm for multi-layer nets at the time and so it was generally thought that the fact that single layer networks couldn't work made the whole enterprise a non-starter. Of course, we now know that this wasn't the case and multi-layer networks learn very well indeed. The rejection of neural networks because single-layer networks aren't enough has an evil twin - the conclusion from the representation theorem that two layers are sufficient. This often causes beginners to use a small number of layers on the grounds that they are enough, and anything an n-layer network can learn a two-layer network can. This may be true but it isn't the whole story. In a new paper, Tegmark and Rolnick continues the investigation into the problem of why deep neural networks are so good. It is well-known that neural networks are universal approximators, but that deeper networks tend to be much more efficient than shallow ones. We shed light on this by proving that the total number of neurons m required to approximate natural classes of multivariate polynomials of n variables grows only linearly with n for deep neural networks, but grows exponentially when merely a single hidden layer is allowed. We also provide evidence that when the number of hidden layers is increased from 1 to k, the neuron requirement grows exponentially not with n but with n 1/k, suggesting that the minimum number of layers required for computational tractability grows only logarithmically with n. So if you restrict yourself to shallow networks you need a lot of neurons, but if you stack them into deep layers you can get away with very many fewer. The difference is huge. Going back to an example of the same general process from an earlier paper, we have: For example, a deep neural network can multiply 32 numbers using 4n = 160 neurons while a shallow one requires 2 32 = 4, 294, 967, 296 neurons. What is going on here? The answer is very simple, but it is hard to find in among all the mathematics presented. It stems from the observation that if you need to calculate xn then you need n multiplications if you use a single-layer approach. However if you use a multi-layer approach you can use the results of each layer to speed up the next. For example, if you need to compute x8 then with a single-layer network you need 7 multiplications: x * x * x * x * x * x * x * x Using a multi-layered network you can do the job using many fewer mutliplications: Layer 1 2 3 (x * x) -> x2 -> (x2 * x2) -> x4 -> (x4 * x4)-> x8

Where each layer performs the square function and hence needs only three multiplications. In short, hierarchical networks can use earlier results to build up more complex results and don't have to return to the raw data to do the job. This is well known and fairly obvious, but the analysis does give some insights. If you now read the paper it should make more sense. What is much more important about deep neural networks is not that they are more efficient at computing complex functions but how easy they are to train. It is tempting at this point to ask mathematical questions such as how many training samples and training epocs are needed, but this really isn't the big question. The real question is why and how to deep networks learn things in a way that seems similar to the way humans see the world. What is interesting about neural networks is that unlike classical statistical methods they get things wrong in interesting ways. The exception of course are adversarial images, but this is another story completely. If you restrict your attention to natural images then mostly neural networks get things wrong in reasonable ways. It seems likely that a hierarchical deep network can learn features at different levels of complexity that capture the structure of the data without simply memorising it. A deep enough neural network has sufficient variety to capture the structure of the natural world.

More InformationThe power of deeper networks for expressing natural functions Why does deep and cheap learning work so well? Related ArticlesAlphGo Defeats World's Top Ranking Go Player Evolution Is Better Than Backprop? A Single Perturbation Can Fool Deep Learning Artificial Intelligence - strong and weak

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Wednesday, 24 May 2017 ) |