| The Digital Camera |

| Written by Harry Fairhead | ||||

| Wednesday, 21 March 2012 | ||||

Page 3 of 3

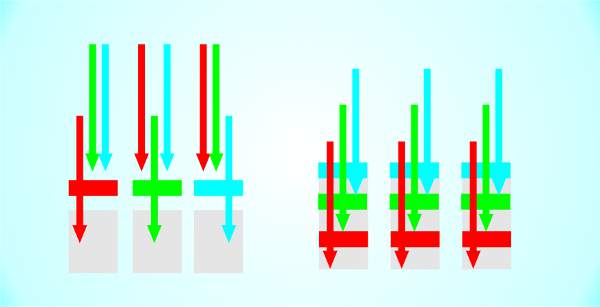

An alternative approach to colorA different approach to the color problem is the X3 sensor from Foveon. This uses a three-layer sensor which allows light of different colors to penetrate the sensor to different depths. You can think of it as stacking three conventional sensors on top of each other, using one for red, one for green and one for blue.

The result is a sensor that has a true color resolution equal to the number of pixels it claims to have and whose color quality is far superior to the Bayer filter and doesn’t show any of its color patterning characteristic. Currently there aren't many cameras that make use of it however. As well as problems, handling color electronically can give us some advantages. The strange truth is that the human eye does lie – most of the time. The color that you see as white most probably isn’t. If you have a white card and view this in light with a colored tint then, as long as the scene has a sufficient range of colors, you will still see the card as white – even though it isn’t. If you take a photograph, however, the camera never lies and so the white card takes on the tint of the light. This is generally called a color cast and it can occur whenever the light or scene has a bias towards one particular color. It can also happen if the film or camera is faulty. Removing a color cast in the darkroom is a matter of using a set of balanced optical filters. A digital camera, on the other hand, can be made to lie by adjusting the “white balance”, WB. All it has to do is turn up or down the sensitivity to the colors so that what you think is white actually shows as white. Simple cameras use fixed WB settings, others let you point the camera at what looks like a white area to you and then adjusts the WB to make it white. If you make a mistake, or if the camera doesn’t have a WB control, then any color casts can usually be corrected using a photo-editor. The intelligence in the cameraThe sensor governs the potential quality of the image that you take but how the processor makes use of the sensor and the data it generates is also important. The use of software in a camera isn’t confined to digital cameras. Electronics and software found their way into film cameras in the form of autofocus and autoexposure controls well before digital cameras were practically possible. In the case of the digital camera, however, the processor can actually get at the picture that is being taken and improve it. Some of these improvements fail to deliver all they promise, however. Digital zoom, which claims to let you zoom in without the need for an expensive zoom lens (i.e. optical zoom), isn’t really very good. A 2x digital zoom, for example, takes the data provided by half the total number of pixels and spreads it out to fill the frame. Clearly the result is a picture that has only half the resolution but clever software can improve the look by using various interpolation algorithms to calculate values for the missing pixels. Even the best interpolation algorithm is no substitute for a real optical zoom. There are other, more welcome, ways in which the processor can make picture taking easier and more reliable. For example, by automatically adjusting the color balance so that white really does look white in the photo. Going a step beyond simple exposure setting the processor can manipulate the contrast to produce details in the shadow and highlights. Finally the processor is responsible for compressing the picture data so that you can fit more photos in the storage. Usually cameras compress to JPEG format but professional cameras can store the data in an uncompressed format called RAW - which really isn't a single format. RAW has lots of advantages over JPEG in that it keeps all of the quality that the sensor can deliver and sometimes it can provide a higher dynamic range than JPEG. All of this processing takes time and one of the big problems is that often the processor hasn’t finished dealing with the picture you have just taken before you are ready to take another. Cameras differ in how quickly they are ready to take a first photo and how quickly they can be ready to take subsequent photos. The processor is usually so busy that it even stops updating the LCD screen so following the action can be difficult between shots. In effect the camera freezes while the photo is processed. As mobile processors get faster things get better but the actual design of a photo processing system is still an area where manufacturers compete. Cameras differ in how many frames per second they can shoot, time to first photo and similar measures of how fast they are. The problem is that as processors get faster the tasks that the camera is asked to perform become more sophisticated. Image processing is moving from the digital darkroom into the camera itself. Even this isn't the end of the story with advanced AI techniques being used to remove unwanted people from the photo, perform face recognition and tag the people it recognizes. There are even cameras that record more than on image of a scene and allow you to refocus or frame the shot at a later time. So much has changed since the first digital cameras but there is so much more still on the way. Photography isn't just digital. it's computational. We are in a new era of computational photography and the key is software. Related ArticlesFlash Memory - Changing Storage Light field camera - shoot first, focus later Search the world's smartphone photos CIA/NSA want a photo location finder Image deblurring using inertial measurement sensors Book ReviewsThe Art of Black and White Photography (2e) Capture: Digital Photography Essentials Photographic Multishot Techniques

Comments

or email your comment to: comments@i-programmer.info

To be informed about new articles on I Programmer, subscribe to the RSS feed, follow us on Google+, Twitter, Linkedin or Facebook or sign up for our weekly newsletter. |

||||

| Last Updated ( Wednesday, 21 March 2012 ) |