| Ethics Guidelines For Trustworthy AI |

| Written by Nikos Vaggalis | ||||||

| Monday, 22 April 2019 | ||||||

Page 2 of 2

Chapter I - Foundations of Trustworthy AI focuses on four ethical principles, rooted in fundamental rights, which must be respected in order to ensure that AI systems are developed, deployed and used in a trustworthy manner. Those principles are: (i). Respect for human autonomy (ii). Prevention of harm The 2016 Microsoft's AI Twitter chatbot incident serves as such a lesson. The researchers' intention was that the chatbot, Tay, would be capable of acquiring intelligence through conversations with humans. Instead it was tricked into altering its innocent and admittedly naive personality resembling a teenage girl, to adopt an anti-feminist and racist character. Later Microsoft admitted to there being a bug in its design. This goes to remind us that after all AI is just software and thus prone to the same issues that any program faces throughout its existence. In extent, who can tell what will happen if the software agents that power robotic hardware get hacked or infected with a virus? How can we make adequate precautions against such an act? You could argue that this is human malice and that with appropriate safety nets it can be avoided. Reality is quick to prove this notion false as bugs in any piece of software ever developed, leading to vulnerabilities or malfunctions, are discovered every day. But for the sake of continuing this argument let's pretend that humans develop bug-free software, something that eradicates the possibility of hacking and virus spreading. Then, what about the case of the machine self-modifying and self-evolving their core base? (iii) Fairness It is a well known secret that AI's reflect the biases of their makers. For example, the case where the resume sorting algorithms would derive the race of the candidates from their CV and use it either against them or for them when deciding to promote them or not. (iv) Explicability An example of that we explored in "TCAV Explains How AI Reaches A Decision", where we saw the example SkinVision, a mobile app that by taking a picture of a mole can decide if its malignant or not. Would the diagnosis be incorrect or misinterpreting a malignant mole as benign could have dire consequences.But the other way around is not without defects as well.It would cause uninvited stress to its users and turn them into an army of pseudo-patients who would come knowing down their already burned out practitioner's door. For such an AI algorithm to be successful, it's of foremost importance to be able to replicate the doctor's actions. In other words, it has to be able to act as doctor, leveraging his knowledge. But why is it so necessary for the algorithm to be blindly trusted, for the diagnosis to be autonomous? Because: Across the globe, health systems are facing the problem of growing populations, increasing occurrence of skin cancer and a squeeze on resources. We see technology such as our own as becoming ever more integrated within the health system, to both ensure that those who need treatment are made aware of it and that those who have an unfounded concern do not take up valuable time and resources. This integration will not only save money but will be vital in bringing down the mortality rate due to earlier diagnosis and will help with the further expansion of the specialism. Then, there's the possibility of tensions arising between those principles as in situations where "the principle of prevention of harm and the principle of human autonomy may be in conflict". There are many applications besides surveillance, such as It also compromises privacy by tracking public activity by introducing the ability of linking physical presence to places a person has been, something that until now was only feasible through credit card transaction monitoring or capturing the MAC address of their mobile device. Imagine the ethical scope arising of personalized advertising.. Potentially it contributes to an already troublesome scenario where privacy and its protective measures like cryptography are heavily attacked, blurring the line between evading privacy and using surveillance as a countermeasure to crime and terror. As expected, there's no fixed recommendations in cases like this since they are deemed too fluid to reach a solid conclusion, a situation worsen by the law's and ethics' incapability in keeping up with the challenges such a technology heralds.As such law and ethics have no answer to any of the aforementioned dilemmas.One thing is for certain, however - this technology grants great power and with great power comes great responsibility. Chapter II: Realizing Trustworthy AI This chapter in essence, reiterates the concepts met in the previous one, but in more concrete terms via a list of seven requirements:

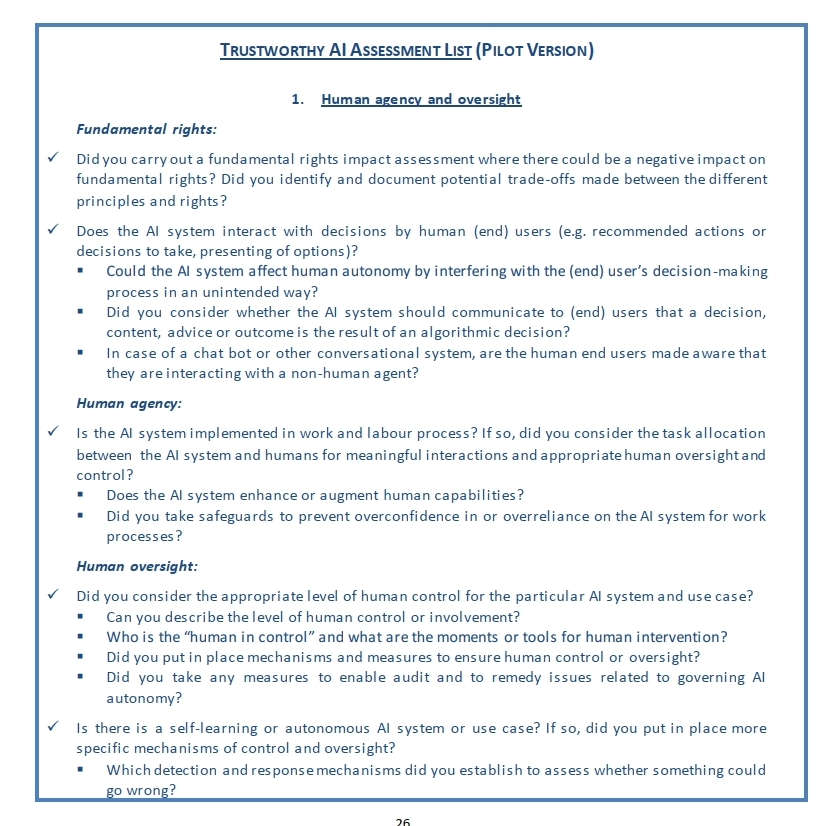

The chapter concludes with technical and non-technical methods to realize Trustworthy AI. "Technical" here doesn't mean examples of code and algorithms, but once again suggestions with the added difference that they look into the methodologies that should be employed for building such trust. As such, the lifecycle of building trustworthy AI should involve: "white list” rules (behaviors or states) that the system should always follow, and “black list” restrictions on behaviors or states that the system should never transgress". Also there are methods to ensure value-by-design, methods that should allow the AI to explain itself, methods for testing and validating and methods for quality assessing. The "non-technical" methods include Regulation; Codes of conduct; Standardization; Certification; Accountability via governance frameworks; Education and Awareness to foster an ethical mind-set; Stakeholder participation and Social dialogue; Diversity; and Inclusive design teams. Chapter III: Assessing Trustworthy AI This chapter revolves around a checklist prepared for stakeholders who'd like to implemented Trustworthy AI in their organizations or products. In every modern company this list will have to be used in relation to the role of its departments and employees . As such, the Management/Board: would discuss and evaluate the AI system's development, deployment or procurement, serving as an escalation board for evaluating all AI innovations and uses, when critical concerns are detected. whereas the Compliance/Legal/Corporate department: "would use [the list] to meet the technological or regulatory changes". Quality Assurance would: "ensure and check the results of the assessment list and take action to escalate issues arising" while Developers and project managers would: "include the assessment list in their daily work and document the results and outcomes of the assessment".

This is the kind of list that could be used as the entry barrier for the private sector to able to seal contracts with the public sector; "have you checked everything in the list? if yes, there's your contract". The guidelines conclude with Examples of Opportunities where AI can be put to innovative use as in Climate action and sustainable infrastructure, Health and well-being, Quality education and digital transformation. I would also add the following to this list, extracted from the "How Will AI Transform Life By 2030? Initial Report": Transportation Home/Service Robots Low resource communities Various others would include mobile devices that shut off all communication when they sense that their owners needs some rest, intelligent agents that start a conversation with you when they sense the loneliness in the sound of your voice or in reading your facial expressions, self driving cars that mobilize disabled people or make the roads safe again, and more. The document does also include the flip side of the coin with examples of Critical Concerns arising of the use of AI, such as in Identifying and tracking individuals, Covert AI systems (impersonating humans), AI enabled citizen scoring in violation of fundamental rights and, of course, Lethal autonomous weapon systems (LAWS) which we've already explored in "Autonomous Robot Weaponry - The Debate". To sum up the guidelines, Chapter I was about the ethical principles and rights that should be build into AI, Chapter II laid forward the seven key requirements in order to realize an AI that is Trustworthy, while Chapter III went through the non-exhaustive assessment list necessary for organizations to implement AI in their organization and included a few examples of beneficial opportunities as well as critical concerns. Wrapping up, the guidelines can be considered a good attempt for the EU to catch up with the coming revolution. As with every technology, there's bad use and good use, and the guidelines try to foster the correct use in every stakeholder. Scientists and policy makers can give answers to some of the questions laid forward by the report, but to others they cannot, hence it increasingly seems that the decisions will be based on a case by case approach of trial and error. Ethics aside, there's still the question of how the future workplace is going to be shaped by the use of AI, see "Do AI, Automation and the No-Code Movement Threaten Our Jobs?" The question that should be addressed asap, has to be whether everyone will be positively and equally affected by the coming revolution. Answer that and the task is almost done. |

Programming In The Age of AI 16/04/2025 Programmers have embraced AI to aid their productivity. But how should they adjust to really benefit? What skills are required for a successful relationship with AI? |

Ingres vs Postgres MVCC Explained With Neo4j's LLM Knowledge Graph Builder 14/04/2025 LLM Knowledge Graph Builder is an application designed to turn |

More News

|

Comments

or email your comment to: comments@i-programmer.info