| Applying C - Cores |

| Written by Harry Fairhead | |||

| Monday, 03 July 2023 | |||

Page 1 of 2 Usually you can just leave the allocation of cores to the operating system, but what if you want to control how they are used? This extract is from my book on using C in an IoT context. Now available as a paperback or ebook from Amazon.Applying C For The IoT With Linux

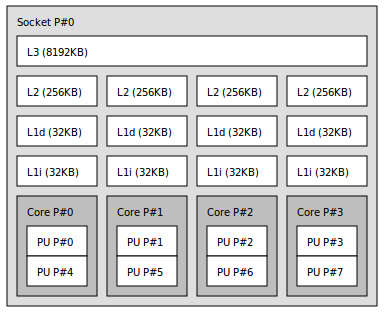

Also see the companion book: Fundamental C Modern processors are much more complex than the simple, single core, design you typically find in a small embedded processor. This technology has slowly trickled down to lower-cost devices so that today even a low-cost SBC can not only run Linux, but do so with multiple cores. This makes threading even more challenging because, instead of just seeming to run concurrently by the operating system’s scheduling algorithms, they really can be running at the same time on different cores. That is, a modern processor is capable of true parallel processing. Not only are multiple cores a common feature of low-cost SBCs, each core can also have a sophisticated pipeline processor which can reorder instructions to get the best performance. This means that you can no longer rely on operations occurring in the order that you specified them, even within the same thread. To deal with this we need to introduce ways of specifying instruction order via memory barriers. Finally, modern processors often provide alternatives to using locking to control access to resources. C11 introduced atomic operations, atomics, to take advantage of this. Managing CoresSo far we have been ignoring the possibility that there is more than one processor. With the advent of multi-core CPUs, however, even low-end systems tend to have two or more cores and some support logical or hyperthreading cores which behave as if they were separate processors. This means that your multi-threaded programs are true parallel programs with more than one thing happening at any given time. This is a way to make your programs faster and more responsive, but it also brings some additional problems. Processor architecture is far too varied to go into details here, but there are some very general patterns that are worth knowing about. Using this knowledge you should be able to understand, and make use of, the specific architecture of any particular machine. Even relatively small machines have multiple processing cores. A core is a complete processor (P) but a core can also contain more than one processing unit (PU). A processing unit isn’t a complete processor; it has its own set of registers and state, but it shares the execution unit with other PUs. At any one time there is generally only one PU running a program and if it is blocked for any reason another one can take over. A good description is that a PU is a hardware implementation of a thread, hence another term for the technique – hyperthreading. The processor or “socket” that hosts the cores also has a set of hierarchical caches. Often there is a single cache shared between all the cores and each core also has its own caches. The details vary greatly but what is important to know is that cache access is fast but access to the shared cache is slower and access to the main memory is even slower. It is also important to notice that as each core runs code out of its own isolated cache, you can think of them as isolated machines. Even when they appear to be operating on the same variable, they are in fact working with their own copy of the variable. This would mean that each core had its own view of the state of the machine if it wasn’t for cache coherency mechanisms. When a core updates its level 1 (L1) cache then that change is propagated to all of the L1 caches by special cache coherency hardware. This means that even though updates to a variable by each cache occur in an isolated fashion, the change is propagated and all threads see the same updated value. What is not guaranteed by cache coherency is the order of update of different variables, which can be changed by the hardware for efficiency reasons.

This machine has four cores or Processors and each core has two processing units – it is hyperthreaded. The machine can run a total of eight threads without having to involve the operating system. You can see that each core has two L1 caches – one for data and one for instructions. It also has a bigger second level, L2, cache all to itself and a very big shared L3 cache. Not all processors have multiple processing units. The Raspberry Pi 3 has a BCM2837B0 with four cores with one processing unit per core. You can often simply ignore the structure of the processor and allow the operating system to manage allocation of threads to cores. Threads are generally created with a natural CPU affinity. This means that the operating system will assign a thread to a core and from then on attempt to run the thread on the same core. So in most cases you can let the operating system manage a machine's cores, but sometimes you know better. The reasons why you would want to manually allocate threads to cores are usually very specific to the application. For example, you might have a computation-bound thread and an I/O-bound thread. Clearly, scheduling these on the same single core with two processing units would allow the computational thread to make use of the core when the I/O thread was waiting for the external world. Other reasons include placing a high priority thread into a core that no other thread was allowed to use so that it isn’t interrupted, placing a thread into a core that has preferential access to particular hardware, and so on. Another consideration is warm versus cold cache. When a thread starts running on a core then none of the data it needs will be in the cache and so it will run slowly. Slowly it transfers the data and instructions it uses, its working set, into the cache and the cache “warms up”. If the thread is switched out of the core and another thread takes it over then restoring the original thread to the original core means that it is still possible that the cache has the data that it requires – the cache is still warm. |

|||

| Last Updated ( Wednesday, 05 July 2023 ) |