| AWS Adds Support For Llama2 And Mistral To SageMaker Canvas |

| Written by Nikos Vaggalis | |||

| Tuesday, 12 March 2024 | |||

|

As part of its effort to enable its customers to use generative AI for tasks such as content generation and summarization, Amazon has added these state of the art LLMs to SageMaker Canvas. Amazon and IBM and Microsoft have all started to incorporate LLMs to their products to give their customers the edge. This February, IBM announced the availability of Mixtral-8x7B on its Watsonx platform. Mixtral is a variation of Mistral and was built using a combination of Sparse modeling and the Mixture-of-Experts technique, which combines different models that specialize in and solve different parts of a problem. The Mixtral-8x7B model is widely known for its ability to rapidly process and analyze vast amounts of data to provide context-relevant insights. It was benchmarked to outperform Llama 2 70B and offers a faster inference rate. Microsoft also announced a partnership with Mistral AI, under which it gives Mistral access to its Azure platform in order to train its cutting edge large language models utilizing Microsoft's infrastructure. Of course then Mistral's models are going to be available through Azure AI Studio and Azure Machine Learning’s Models as a Service. As it currently is, Mistral LLM comes in three variations, Tiny, Small and Medium. The dimensions indicate the cost efficiency in relation to the capabilities of the models.

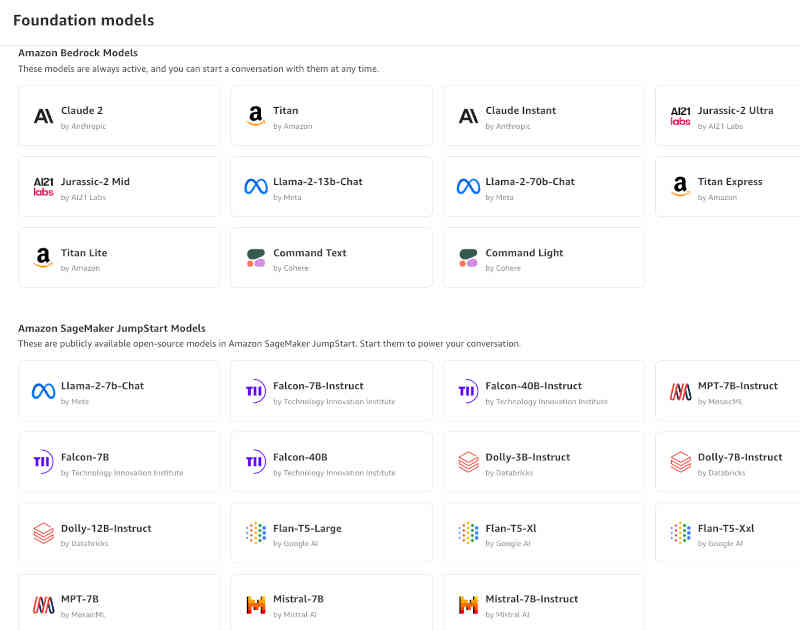

As far as the other model, Llama, goes, Meta released the initial version on February 2023 while Llama version 2 on July the same year. Llama2 is comprised of three model sizes, 7B, 13B, and 70B and in order to be historically accurate it's important to note that Guillaume Lample and Timothée Lacroix, two devs of the original Llama author team, went on to found Mistral AI. The summary is that GenAI frenzy has overtaken the big corps. To Amazon then... Amazon announced that SageMaker Canvas now supports three Llama2 model variants and two Mistral 7B variants:

They come in addition to SageMaker Canvas 's access to ready-to-use foundation model (FMs) such as Claude 2, Amazon Titan, and Jurassic-2 (powered by Amazon Bedrock) as well as publicly available FMs such as Falcon and MPT (powered by SageMaker Canvas JumpStart). The difference is that Amazon customers using SageMaker Canvas don't have to write code or be technically savvy to utilize those models in their work. That is because Amazon SageMaker Canvas provides a visual point-and-click interface so that business analysts can solve business problems using ML such as customer churn prediction, fraud detection, forecasting financial metrics and sales, inventory optimization, content generation, and more without writing any code. As to which model performs best, SageMaker Canvas natively provides the capability of model comparisons. You can select up to three different models and send the same query to all of them at once. SageMaker Canvas will then get the responses from each of the models and show them in a side-by-side chat UI. In general you'll probably find Mistral performing better than Llama but in any case you should evaluate them specifically for your use case. As such, Amazon SageMaker Canvas has enabled business analysts to build, deploy, and use a variety of ML models without writing a line of code where previously a deep level of technical and coding skills were required. It's a trend that will intensify in the very near future.

More InformationAnnouncing support for Llama 2 and Mistral models and streaming responses in Amazon SageMaker Canvas Related ArticlesCodeStar to Simplify Development On AWS

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Tuesday, 12 March 2024 ) |