| Chaff Bugs Make Your Code More Secure |

| Written by Mike James | |||

| Wednesday, 15 August 2018 | |||

|

The idea that bugs could be a good thing is not a natural one to most programmers - or it shouldn't be. Bugs not only crash your program, they are ways for bad people to get into your code. Perhaps more bugs could be used to hide the really dangerous bugs? Security by obscurity is a very attractive idea. If you can't see how something works, or if you are swamped by potential targets, then this approach makes attack all the more difficult. In World War II someone invented the idea of "chaff" - small strips of aluminum foil that were ejected in a cloud to fool radar. Now a group of researchers has suggested that a cloud of "chaff" bugs might protect your program from hackers.

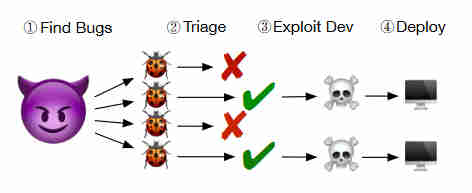

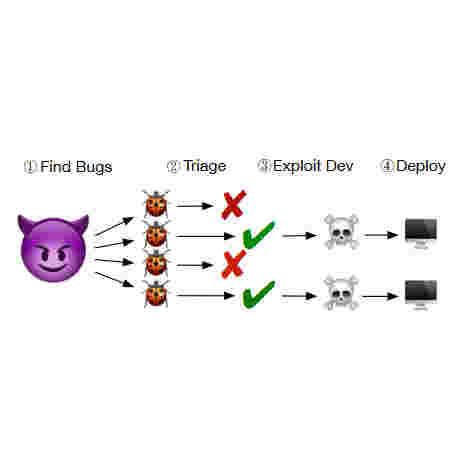

The key idea is that of a non-exploitable bug. After all, there wouldn't be a lot of advantage in inserting bugs that a hacker could make use of. The problem is that the bug still has to look like a potentially exploitable bug to keep the hacker's interest. It is hypothesized that the typical hacker's workflow is to find bugs and then spend a lot of time working out if a bug is exploitable before spending even more time on building an exploit using it. If you can make a non-exploitable bug look as it if might be exploitable, then you can waste a lot of time and perhaps hold the hacker up for so long that they give up.

The worst that a chaff bug can do is crash the program. Wait! crash the program - this sounds like a real bug. To be believable the chaff bug has to seem dangerous, but if it only crashes part of an app that can easily restart, like the tab of a browser or a thread of a web server, then the crash isn't as bad as it could be and it might be acceptable. However all this sounds difficult. The researchers, Zhenghao Hu, Yu Hu and Brendan Dolan-Gavitt, all at New York University, had a bit of a head start by using LAVA, a program which scans a C/C++ program and finds places to inject overflow bugs. I have to say I didn't know this existed and probably wouldn't have guessed that such a thing was desirable or even possible. It was created to make test code for bug detecting software. There is one chilling little comment in the paper: "To actually trigger the bug, LAVA also makes note of attack points along the trace—that is, places where the stored data from the DUA could be used to corrupt memory. In its original implementation, LAVA considers any pointer argument to a function call to be an attack point; in our work we instead create new attack points that cause controlled stack and heap overflows at arbitrary points in the program." Notice "any pointer argument to a function call to be an attack point" - gulp... The researchers also reveal that LAVA was found to be able to add thousands of bugs to a program. We really are that close to a non-working program most of the time. The bugs injected into the code were heap and stack overflow bugs, which are generally considered to be the main route into a program exploit. The problem of making the bugs non-exploitable wasn't an easy one to crack. One of the main ideas was to make the buffer overflow only overwrite variables that weren't actually used by the program. This meant controlling the layout of the stack and heap or simply making use of how the particular compiler laid out memory. This approach only works for the stack. A second method is to constrain the values of the overflow to stop code execution. This needs a lot of thought to be sure that the bug isn't exploitable. OK, so assume that the chaff bugs are safe, how do we hide this from the attacker? The two methods described above to make the chaff bug safe are fairly easy to detect. The solution is obfuscation. The unused variables have to be incorporated into the program to make them look as if they are used and the constraints on values have to be spread out in the program to make them hard to put together. So does it work? The paper asks three questions.

As you might guess, the paper presents evidence that everything works as expected. The most important result is that well-known fuzzing tools found the bugs and they were rated as mostly exploitable or probably exploitable. Of course, we haven't had a escalation of triage tools that explicitly take chaff into account. The conclusion is: In this paper, we have presented a novel approach to software security that adds rather than removes bugs in order to drown attackers in a sea of enticing-looking but ultimately non- exploitable bugs. Our prototype, which is already capable of creating several kinds of non-exploitable bug and injecting them in the thousands into large, real-world software, represents a new type of deceptive defense that wastes skilled attackers’ most valuable resource: time. We believe that with further research, chaff bugs can be a valuable layer of defense that provides deterrence rather than simply mitigation. So what do you think? Personally, after just finishing teaching a course on how to avoid overflow bugs in C, I think that that elimination is better then adding decoys. The fact that we have reached the 21st century and still can't stop a buffer overflowing is a disgrace. And before you think I'm suggesting that we give up on C and use something else, what I'm saying is that if we have tools that can inject 1000s of overflow bugs into code, why don't we have tools that can do a better job of removing them, and why don't we use the ones we do have? I guess it's because a significant number of our comrades are still using emacs and vi and claiming that an IDE is for sissys.

More InformationChaff Bugs: Deterring Attackers by Making Software Buggier Related ArticlesInstabug Analyzes 100,000,000 Bugs

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info Chaff Bugs: Deterring Attackers by Making Software Buggier

Zhenghao Hu

New York University

huzh@nyu.edu

Yu Hu

New York University

yh570@nyu.edu

Brendan Dolan-Gavitt

New York University

brendandg@nyu.e |

|||

| Last Updated ( Wednesday, 15 August 2018 ) |