| Breaking Fitness Records Without Even Moving - Spoofing The Fitbit |

| Written by Alex Armstrong |

| Thursday, 29 June 2017 |

|

Some new results indicate that it might be easy to falsify data gathered by even the best protected biometric device. Researchers have found two distinct ways of compromising a Fitbit. Hacking a fitness device doesn't seem like a rewarding occupation as you have access to your data and who else could be interested? However, this ignores the increasing role that such devices are playing in insurance and heath plans. Biometric data is becoming increasingly valuable and hence hackable. A team of researchers Hossein Fereidooni, Jiska Classen, Tom Spink, Paul Patras, Markus Miettinen, Ahmad-Reza Sadeghi, Matthias Hollick and Mauro Conti from the Universities of Darmstadt, Padua and Edinburgh have spent what must have been a considerable amount of time reverse engineering the Fitbit Flex and One at the software and hardware level. Their new paper makes interesting reading just to find out how to go about this sort of task. What is surprising is that they were successful in compromising both software and hardware for one of the fitness devices that seems to take security fairly seriously. The Fitbit software is not an open door waiting for someone to walk through. It makes use of end-to-end encryption. In earlier versions of the device, however, encryption was an option that had to be switched on and the servers still accept unencrypted data packets. In this mode the only security is by obfuscation. The format of the unencrypted packets was deduced by comparing packets sent and the data presented to the user on the standard app. With the structure of the packets mostly worked out, it was possible to send a data frame obtained from one device to another device's id - an impersonation attack. The fact that we are able to inject a data report associated to any of the studied trackers’ IDs reveals both a severe DoS risk and the potential for a paid rogue service that would manipulate records on demand. Specifically, an attacker could arbitrarily modify the activity records of random users, or manipulate the data recorded by the device of a target victim, as tracker IDs are listed on the packaging. Likewise, a selfish user may pay for a service that exploits this vulnerability to manipulate activity records on demand, and subsequently gain rewards. One of the problems in creating new packets was that the algorithm for the CRC wasn't clear. Fortunately, if a packet was sent with the wrong CRC the server would send back an error message containing the CRC that it was expecting. This could be added to the packet to send it without a CRC error. This could only be done a small number of times because the server operated a back off policy when too many CRC errors occurred.

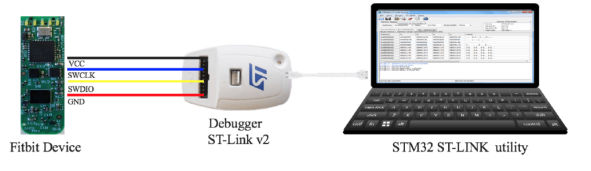

After trying the software and anticipating that the vulnerabilities found would be closed, the team turned its attention to the hardware. If you are a software person then this part of the reverse engineering will seem all the more amazing. After taking the device apart, a set of concealed pins were found to be a standard debug port. This allowed the firmware to be dumped and. after a lot more work. allowed the encryption keys to be located. Although this sounds promising the keys are device specific and hence could only be easily found by taking a device apart and reading it out from the firmware dump. It was, however, possible to find a byte that, if flipped, would turn the encryption off. This is good a find, but again it is making use of the server accepting plain text packets. The real breakthrough was discovering that the packets were stored in unencrypted form in the EPROM before being encrypted. This allows any data to be injected and sent to the server fully encrypted. So what have we learned? The examined trackers support full end-to-end encryption, but do not enforce its use consistently. This allows us to perform an in-depth analysis of the data synchronization protocol and ultimately fabricate messages with false activity data, which were accepted as genuine by the Fitbit servers. Suggestion 1 End-to-end encryption between trackers and remote servers should be consistently enforced, if supported by device firmware. Fitbit say that all models after 2015 consistently enforce encryption. Protocol message design: Generating valid protocol messages (without a clear understanding of the CRC in use) is enabled by the fact that the server responds to invalid messages with information about the expected CRC values, instead of a simple “invalid CRC”, or a more general “invalid message” response. Suggestion 2 Error and status notifications should not include additional information related to the contents of actual protocol messages. This seems almost obvious, but I can imagine writing an error message that supplies more information that it needs to during a debugging phase. CRCs do not protect against message forgery, once the scheme is known. For authentication, there is already a scheme in place to generate subkeys from the device key. Such a key could also be used for message protection. Suggestion 3 Messages should be signed with an individual signature subkey which is derived from the device key. To turn to the hardware: The microcontroller hardware used by both analyzed trackers provides memory readout protection mechanisms, but were not enabled in the analyzed devices. This opens an attack vector for gaining access to tracker memory and allows us to circumvent even the relatively robust protection provided by end-to-end message encryption as we were able to modify activity data directly in the tracker memory. Since reproducing such hardware attacks given the necessary background information is not particularly expensive, the available hardware-supported memory protection measures should be applied by default. Suggestion 4 Hardware-supported memory readout protection should be applied. Specifically, on the MCUs of the investigated tracking devices, the memory of the hardware should be protected by enabling chip readout protection level 2. Finally, given that no system is perfect, abnormal activity detection seems like a good idea: In our experiments we were able to inject fabricated activity data with clearly unreasonably high performance values (e.g. more than 16 million steps during a single day). This suggests that data should be monitored more closely by the servers before accepting activity updates. Suggestion 5 Fraud detection measures should be applied in order to screen for data resulting from malicious modifications or malfunctioning hardware. For example, accounts with unusual or abnormal activity profiles should be flagged and potentially disqualified, if obvious irregularities are detected. Sounds like a job for a neural network to me. Meanwhile, if you want to reach your fitness targets without all that tedious effort you now know how to do it.

More InformationBreaking Fitness Records without Moving: Reverse Engineering and Spoofing Fitbit Related ArticlesPebble Taken Over By FitBit - Developers & Users Abandoned Your Android Could Leak Data Via USB Charging Your Phone's Battery Leaks - Your Id That Is

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Thursday, 29 June 2017 ) |