| The Elephant In The Room - AI Vision Systems Are Over Sold? |

| Written by Mike James |

| Wednesday, 26 September 2018 |

|

There is currently a lot of talk about a new "AI winter" brought on by the overselling of what deep learning can do. A new study that literally addresses the problem of the elephant in the room is being used to drive the negative hype. Are deep learning vision systems really deeply flawed? Are we on the wrong track entirely? The latest research on how convolutional neural networks behave is focused on object trackers or detectors, which are slightly more specialized version of the more general convolutional network. Instead of just having to classify a photo as a dog or a cat, an object detector has to place bounding boxes around any dogs or cats it detects in the photo. Clearly being able to recognize a cat or a dog is the same sort of skill, but the object detector has to process the entire image and reject most of the sub areas as being nothing of interest. The big and currently unsolved problem with all neural networks is the existence of adversarial images. If a network correctly classifies a photo as a cat, say, then it is possible to work out a very small change to the pixel value in the image that causes the image to be classified as something else - a dog, say - and yet the changes are so small that a human simply doesn't see them. That is, an adversarial image looks like a cat to a human, but it is classified as a dog or something else by the network. This is a strange enough idea, but you can take it a step further and create so-called universal adversarial images which have a high probability of fooling a range of networks, irrespective of their exact architecture or training. The latest work The Elephant in the Room by Amir Rosenfeld, Richard Zemel and John K. Tsotsos looks into the fragility of object detection using deep learning. They created an alternative sort of adversarial image by putting an elephant in the room. Take a trained object detector and show it a scene that it labels correctly. Now put an unexpected object into the scene - an elephant, say - and the object detector not only incorrectly labels the elephant, it also changes the objects it does label with an incorrect classification.

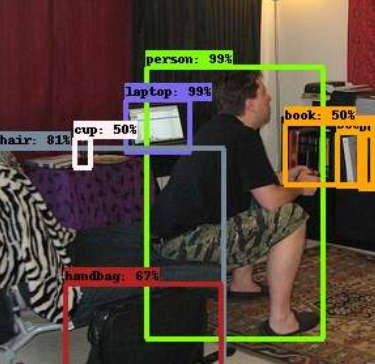

Object detection with not an elephant to be seen - personally I haven't yet got over the fact that this works so well.

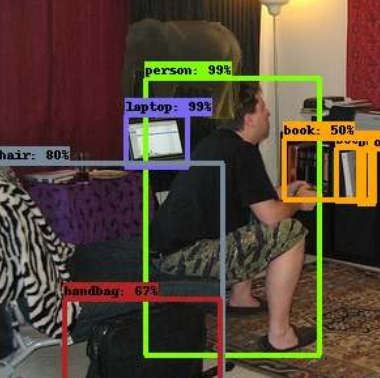

Yes there is an elephant in the room and the object detector is doing the human thing and not talking about it. Also notice that the cup has now not been detected - a small change, I say, considering there is an elephant in the room. This, of course, is jumped on as evidence that the current AI just isn't working. To quote a recent article in Quanta: “It’s a clever and important study that reminds us that ‘deep learning’ isn’t really that deep,” said Gary Marcus, a neuroscientist at New York University who was not affiliated with the work. Well, yes, deep learning isn't that deep, but that isn't the meaning most readers will take. There is a misplaced reaction to this study due to an overestimation of what AI. and deep learning in particular. is claiming. We don't have an artificial brain yet and our deep neural networks are tiny compared to the brain. However, they are remarkable in the way that they organize and generalize data and we need to study what is going on even at this basic level. Yes, deep neural networks aren't deep when compared to the brain and this study is the equivalent of showing the eye and the optic nerve some pictures and being surprised when showing it an elephant makes it go wrong. Our neural networks are the building blocks of future AI. There is no sense in which a deep neural network reasons about the world. It doesn't have an internal linguistic representation that allows it to reason that an elephant shouldn't be there and it doesn't take a second look as a result. Currently our deep neural networks are statistical analyzers and if you throw them a statistical anomaly then they will get it wrong. This is not a reason to bring out the heavy criticism and say that a 3 year old child could do better. This is not an artificial child as opposed to a real child, but a small isolated chunk of "artificial brain" with a pitifully small number of neural analogs - we need to remain amazed that they achieve even what they do. This said, there are some very real questions raised by the study. After all, from a statistical point of view it isn't surprising that an elephant, or other out of place item, would be misclassified. It is a statistical anomaly. What is more surprising is that the presence of an elephant causes the classifier to get objects in other areas of the image wrong. Why is there an interaction? This brings us back to the other sort of adversarial image. It seems that adversarial images are outside of the network's training set's statistical distribution - they are not like natural images. If you look at the perturbations that are added to images to make them misclassified, they are usually regular bands or regular noises, quite unlike anything that occurs in a natural image. This noise places the image outside of the set of images that the network has been trained to work with and as such misclassification might not be so unexpected. How does this apply to the elephant in the room? Same argument. The elephant has been cut and pasted into the image. The presence of editing edges and background changes probably move the image from the set of natural images into the set of manipulated images and hence we have the same problem. The authors of the paper speculate that this is indeed part of the problem - see "Out of Distribution Examples" - however, they also suggest some other possibilities. In particular, you might think that object detection would not suffer from non-localities caused by placing an elephant some distance away from an object that become misclassified. Unfortunately this expectation doesn't take into account the fact that, at the moment, neural networks are bad at scaling. They have to be trained to detect objects at different sizes within an image and this introduces non-local effects. The study does not highlight the fact that deep neural networks are over-claiming what they can do. What it highlights is that, without an understanding of what is happening, an innocent can read too much into the exceptional performance of object detectors. We need to carry on investigating exactly how adversarial inputs reveal how neural networks actually work and we need to understand that true AI isn't going to involve a single network, no matter how deep it is.

More InformationMachine Learning Confronts the Elephant in the Room Related ArticlesAdversarial Attacks On Voice Input Self Driving Cars Can Be Easily Made To Not See Pedestrians Detecting When A Neural Network Is Being Fooled Neural Networks Have A Universal Flaw Detecting When A Neural Network Is Being Fooled The Flaw In Every Neural Network Just Got A Little Worse The Deep Flaw In All Neural Networks To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Wednesday, 26 September 2018 ) |