| We Still Beat AI At Angry Birds |

| Written by Alex Armstrong | |||

| Sunday, 13 October 2019 | |||

|

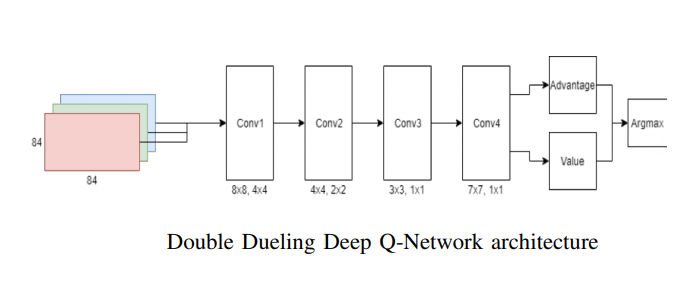

Humans! Rest easy we still beat the evil AI at the all-important Angry Birds game. Recent research by Ekaterina Nikonova and Jakub Gemrot of Charles University (Czech Republic) indicates why this is so. Angry Bird is a fun and seemingly simple game but it is harder than it looks - for AI at least. The game, unlike many that AI has proved to be best at, involves a physics simulation courtesy of Box2D. You must know the game, but just in case you don't it involves firing the angry birds from a slingshot and hitting various structures - basically it's fun to destroy things. But why so difficult for AI. A recent research paper by Ekaterina Nikonova and Jakub Gemrot of Charles University (Czech Republic) suggests the following: "Firstly, this game has a large number of possibilities of actions and nearly infinite amount of possible levels, which makes it difficult to use simple state space search algorithms for this task. Secondly, the game requires a planning of sequence of actions, which are related to each other or finding single precise shot. For example, a poorly chosen first action can make a level unsolvable by blocking a pig with a pile of objects. Therefore, to successfully solve the task, a game agent should be able to predict or simulate the outcome of it is own actions a few steps ahead." There have been a number of AIBirds competitions since 2012 and the latest saw the entry of a Deep-Q learning network of the sort used to successfully solve Atari games by DeepMind. The task is particularly interesting because there are many levels and each introduces new features. On a training set consisting of 21 levels the network was able to solve unseen levels showing generalization. A big training problem is that the researchers don't have access to the official level generator and so cannot perform training on extended levels.

So how did it match up to humans? "One of the goals that we did not quite achieve in this work, is to outperform humans in Angry Birds game. Despite the fact we have come close to a human-level performance on selected 21 levels, we still lost to 3 out of 4 humans in obtaining a maximum possible total score." On the whole, the AI is getting better: "Overall, while our agent had outperformed some of the previous and current participants of AIBirds competition and set new high-score for one of levels, there are still a lot of room for improvements." It seems that the major problem is not having enough data to train on. Even so the humans manage to crack new unseen levels with hardly any data. It might be that we just like throwing things and notice when something happens to our advantage.

More InformationDeep Q-Network for Angry Birds Related ArticlesOpenAI Five Defeated But Undaunted OpenAI Five Dota 2 Bots Beat Top Human Players Getting Started with Box2D in JavaScript AI Learns To Solve Rubik's Cube - Fast! To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 13 October 2019 ) |