| AI Learns To Solve Rubik's Cube - Fast! |

| Written by Lucy Black | |||

| Saturday, 31 August 2019 | |||

|

Yes, this is another "AI masters a game or puzzle" piece of news, but this one is surprising. Having learned to solve a logical problem, AI turns in a performance that typically not only beats a human, but is as good as hand-crafted methods. There is a lot of hype surrounding AI, but don't let this, and its criticism, blind you to how far we have come. A lot of this progress is simply due to the increasing computer power we can throw at the problem, but this is not the point. Neural networks are unreasonably effective because they are so simple, even if they need so much computing power.

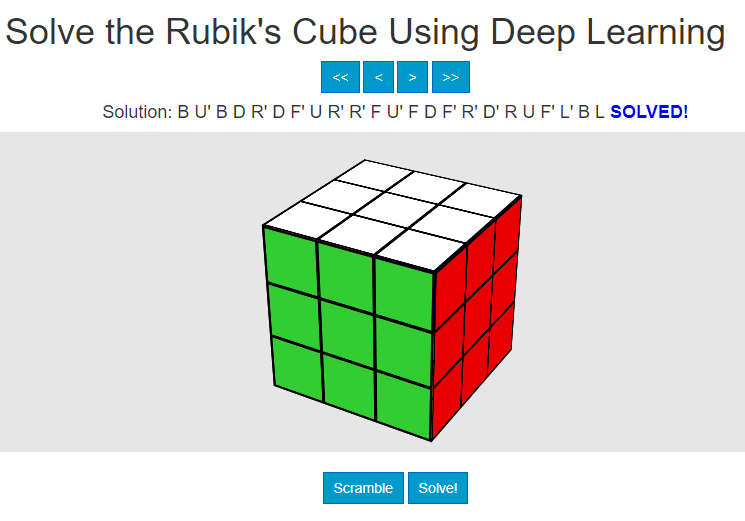

The latest neural network to impress is DeepCubeA from Forest Agostinelli, Stephen McAleer, Alexander Shmakov and Pierre Baldi of the University of California, Irvine. This is a deep neural network that learns a range of combinatorial puzzles - sliding block15, 24, 35, 48 puzzles, Lights Out, Sokoban and, of course, Rubik's cube. The network learns a reinforcment value function, but it does this "backwards". That is, it starts from a solution and randomly takes moves away from the goal. As it steps away from the goal, the moves and configurations become increasingly low in value, - i.e. they are moving away from the goal. This gives you a value function for each configuration and this is then used in an A* search as the heuristic on real puzzles. This is simple, direct and clever at the same time. DeepCubeA has been developed from an earlier attempt that modeled itself on DeepMind's Alpha Go. The difference seems to be that the guided A* algorithm tends to find the shortest solution. "DeepCubeA finds a shortest path to the goal for puzzles for which a shortest path is computationally verifiable: 60.3% of the time for the Rubik’s cube and over 90% of the time for the 15 puzzle, 24 puzzle and Lights Out." So how well does it do? The first thing to say is that DeepCubeA isn't guarenteed to find an optimal solution and there is an algorithm that is - PDB. In tests against this algorithm DeepCubeA managed to get 60% optimal solutions, but 97% are within 2 operations of optimal. Not only does if find good solutions, it does it fast - PDB took nearly 4 hours where DeepCubeA took 18 seconds. Similar good performance was returned on the other problems, but it is Rubik's cube that is likely to capture the imagination. Rubik's cube champions solve the cube in around 50 moves, the AI needs only 20 moves. The AI is fast, but the humans take at best only 5 to 6 seconds and that includes moving a physical cube. You can try the program out and see what you think of its solution - it's fast and can run on modest hardware. It is fairly obvious that this is not using any of the "human" algorithms, but it is impressive and it seems to be generalizable to a range of planning problems: "The generality of the core algorithm suggests that it may have applications beyond combinatorial puzzles, as problems with large state spaces and few goal states are not rare in planning, robotics and the natural sciences." One interesting thought - DeepCubeA learned the value of a move by working backwards. It also learned the value of moves close to the goal before extending its knowlege to more distance states. Could humans learn something in the same way?

More InformationSolving the Rubik's Cube with Deep Reinforcement Learning and Search Related ArticlesRubik's Cube Is Hard - NP Hard Rubik's cube - the order of God's Number 17x17x17 Rubik Cube Solved In 7.5 Hours To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Saturday, 31 August 2019 ) |