| Fractal Image Compression |

| Written by Mike James | ||||||

| Friday, 08 April 2022 | ||||||

Page 3 of 5

Iterating the IFSIf you feel that you are beginning to get lost in all this detail - don't give up we are nearly home. The key ideas are a set of contractive affine transformations and a novel way of applying them. OK, so you understand this much - but what exactly is interesting about an IFS? The answer is that like simple contractive transformations if you keep on applying an IFS, i.e. iterating it, the result slowly settles down to a final result. In other words, the output of an IFS converges to a particular set of points. This set of points is called the attractor of the IFS. In mathematical terms:

where Cn is the IFS applied n times, B is any set of points and A is the attractor. It also isn't difficult to see that if A is the attractor of the IFS then:

That is the attractor is also a "fixed point" of the transformation - applying the IFS doesn't change it. An attractorSo what!? An IFS converges to a particular result if you iterate it enough times on any starting set - how can this be useful? Well it would be little more than a theoretical curiosity except for the fact that the sets that an IFS can be made to converge to can be very complex - fractals even. An example will soon convince you that this is true. First, it is worth introducing a shorthand way of writing an IFS. Instead of writing out the values in matrix form it is simpler to list them in a table. If the contractive affine transformation is:

then the IFS can be listed in a table of values with columns a b c d e f. For example, an IFS consisting of four affine transformations can be written:

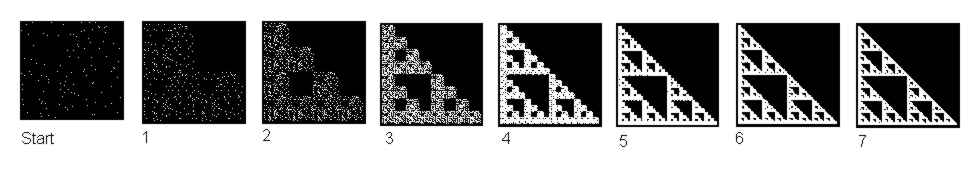

which you have to admit is shorter than writing out the four sets of equations. The question is what do you think the attractor of this simple IFS is? To find out all you have to do is start from any old set of points and apply the IFS. Next you apply the IFS to the result and carry on until there is no change in the output. For example, starting from a random set of dots you can see that the image slowly converges to something very surprising, a Sierpinski triangle.

The random set of points converges to the Sierpinski triangle, a classical fractal, with each application of the IFS. It doesn't matter what the starting set of points is, the end product is a Sierpinski triangle which is the attractor for this IFS.

The collage theoremAt last we are very close to seeing how all of this might be used as a method of compressing images. Notice that the Sierpinski triangle is a very complex image that is generated using just the data in the table specifying the IFS that it is the attractor for. Also notice that the IFS method of generating it is resolution independent. If you start off with an array of 100x 100 points or 1000x1000 or whatever the transformation can be applied and you will see more detail the more points you have. Only18 coefficients generate this image. In the case of a 100x100 points a 1.22KByte binary image can be compressed to 18 Bytes - a compression ratio of just less than 70:1. Of course by increasing the size of the image generated the ratio can be made even higher because 18 bytes serve to generate the Sierpinski triangle at any resolution! So the basic idea is that we try to find an IFS that will generate a given image and store the IFS coefficients in place of the original. When the original is required all we have to do is iterate the IFS on an arbitrary starting image of the desired resolution. The problem is how to find an IFS that will generate a given image? The answer to this question is given by the collage theorem, although it isn't immediately obvious that this theorem helps at all! The collage theorem is at first difficult to follow. It says that if you have an image T and an IFS with contractivity s such that:

then if A is the attractor of the IFS then

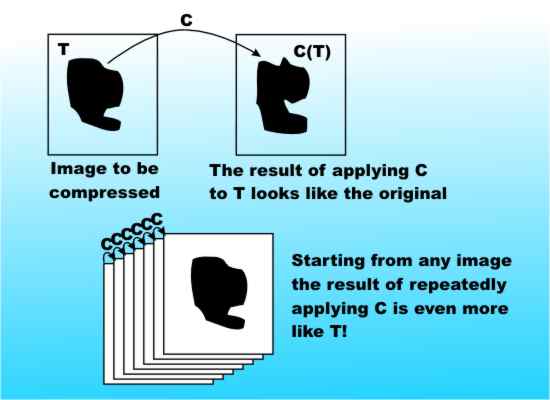

This is a bit confusing. The first line says that if the distance between C(T) and T is small then the second line tells you that the distance between T and A is even smaller. In other words, if you can find an IFS which when applied once to T gives an image that looks like T then iterating the IFS on any starting image gives a result that is even more like T. This is an amazing result and it is the key to finding an IFS that can be used to compress an image.

That is the collage theorem gives us a way to find an IFS that has an attractor that is close to the image we want to compress. Find an IFS that that in one step applied to the image we want to compress gives a close approximation to the image then the attractor of the IFS is even closer to the target image. You might at this point ask why not use use the identity transformation i.e. leave T alone? Surely this would work? The point is that we need a contractive map and the identity isn't a contractive map. Also the more contractive the map is the faster the iteration converges to its attractor. There isn't a lot of value in having a contractive map that reduces an image to a few bytes but takes thousands of millions of iterations to converge to something even slightly close to the original image. This is almost a practical approach to fractal image compression but we are still not quite there.

<ASIN:1846286859> <ASIN:0486432785> |

||||||

| Last Updated ( Monday, 27 May 2024 ) |