| Virtual Memory |

| Written by Harry Fairhead | ||||||

| Sunday, 20 November 2022 | ||||||

Page 2 of 2

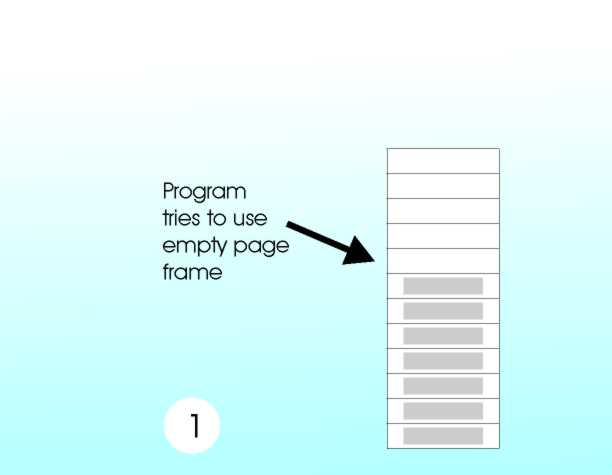

So what has all this moving around of physical memory got to do with virtual memory? When you first start running an application you might be lucky enough to have plenty of unused RAM. Every time the application tries to use a new logical page the operating system maps a physical page into the space - the space is usually called a page frame. But sooner or later comes the terrible moment when there is no more physical RAM to be mapped into an additional page frame.

Step one in a page fault

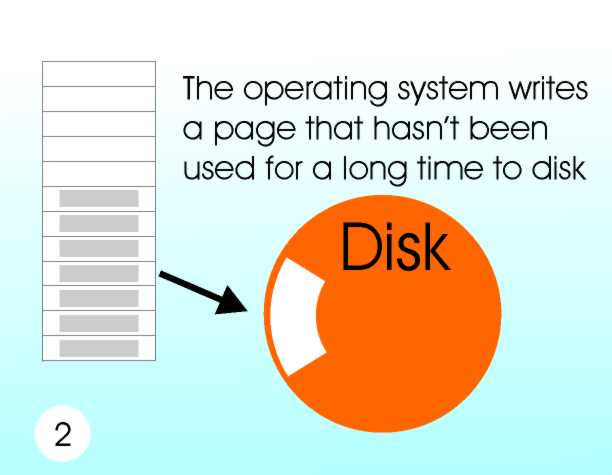

What happens next is the core of the mechanism of virtual memory implementation. The operating system has a look through the page tables to see if there is a page that hasn’t been used for a while – milliseconds in processor terms! It selects a suitable page and writes the current contents of the physical memory mapped there out to disk.

Step two in a page fault

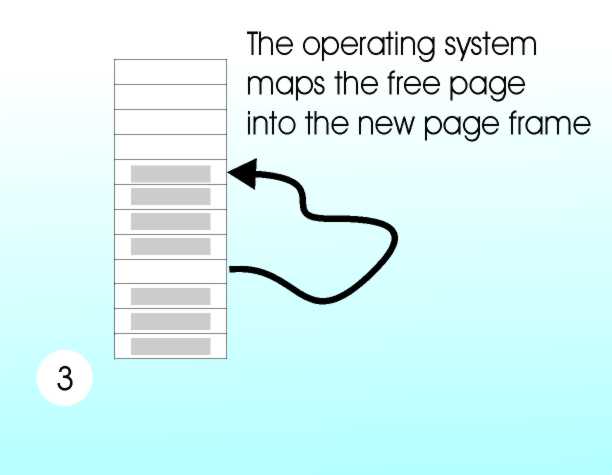

It then maps the now free physical page of memory to the new page frame. It looks as if an additional 4Kbytes of memory has just been created – but it’s all a matter of moving physical memory around.

Step three in a page fault

If the program discovers it wants to use a logical page that has been swapped out to disk then the whole process occurs again. Only this time the page is selected and written out to disk, the RAM freed up is remapped to the required page frame and the contents of the original page are read back in. The application has the page back and it didn’t even know it was gone! This is page swapping. Page faults and working setsWhen an application tries to use a page that has been swapped out to disk this is called a “page fault” and it slows things down. You might think that it would slow things down to a point where your expensive machine would work at the speed of the disk drive. However its an observable statistical fact that programs don't jump about all over the place at random. A program tends to use pages in clusters. If a page has just been use then it is likely that it will be used next. If a page hasn’t been used for a while then the chances are it will not be needed for a while longer. At any given time a program has a “working set” of pages that it needs and as long as you have enough physical memory to hold this number of pages the slow down isn’t significant because page hits are much more frequent than page misses. So the rule is always have enough memory to store the total working set of any programs in use. An easy principle in theory but very tough to meet in practice. Notice that this is starting to sound a bit like the original idea of overlay programming. The working set represents the part of the program that is currently active and this is the part that would have been manually loaded by an overlay specification. You can think of paging as an automatic approach to finding how to divide a program into overlays. Practical page faultsThe implementation details of virtual memory in any modern Operating System are more or less the same. An area of the disk is set aside to act as the area where pages are stored – the page or swap file. This usually has to be in an area of the disk that is fast to get at. You should choose a page file size that is right for the hardware configuration and the mix of programs you intend to run but most operating systems do this automatically for you. As already discussed the page file should be just the size of the working set no bigger and no smaller - of course this is easier said than done. There are some optimizations applied in practice that can sometimes be counter productive. You might noticed that your machine sometimes starts to use the hard disk if you leave it alone for a while? It is simply using a policy of “aggressive paging”. This means it writes pages that haven’t been used for a while out to disk but leaves them in place. Then if they do have to be swapped out they are already written to disk so speeding things up. Although what has been described is the general outline of virtual memory be warned that there are a great many hardware features that have been introduced to make it all work faster. For example, the Pentium uses not one page table but a set of them and they too can be paged out to disk. It also uses a special page table cache called a “Translation Lookaside Buffer” or TLB. When you actually look at the design of the Pentium it can look very complicated but it is doing exactly the job described above. The idea of virtual memory is simple, but the practical details can make it seem more complicated. Related ArticlesComputer Memory and Pigeonholes

Comments

or email your comment to: comments@i-programmer.info To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

<ASIN:0955832810> <ASIN:095583287X> |

||||||

| Last Updated ( Sunday, 20 November 2022 ) |