| Open Source Kinect Fusion - Instant Interactive 3D Models |

| Written by Harry Fairhead |

| Sunday, 15 January 2012 |

|

Kinect is an amazing piece of hardware, but without software it is nothing. KinectFusion is the most exciting Kinect application yet. Now with the release of the Point Cloud Library, an open source project, we can all make use of this realtime 3D model builder. Here's a video that explains how it all works. Back in August 2011 Microsoft demonstrated the Kinect being used as a hand-held 3D scanner at SIGGRAPH KinectFusion. As the Kinect was scanned around the room it seemed to "paint" a 3D model in the manner of a flashlight illuminating different parts of the scene. It was clear from the demonstration and the videos that it was fast, and repeated scanning improved the accuracy and removed noise points.

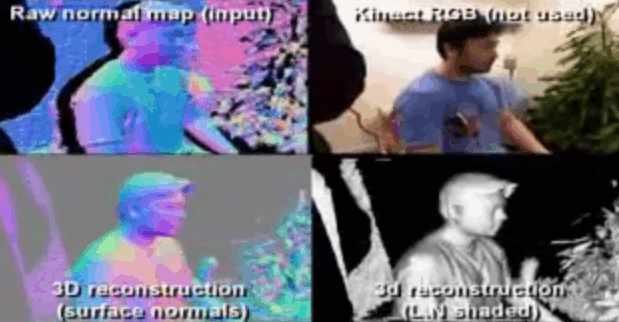

The 3D model that was created could be used in all sorts of creative ways including virtual reality and augmented reality. With a 3D model it is possible to simulate how other objects and fluids would move within the scene and then overlay this on the video. So you could throw a virtual bucket of paint onto the scene and it would flow as determined by the 3D model. All in all this is remarkable stuff and it is cheap - needing only a Kinect and a GPU to do the computation. The only problem is that Microsoft hasn't, and may never, released the code. The good news is that now there is an open source implementation of KinectFusion. The Point Cloud Library is an open source project about 3D processing in general. The PCL framework has routines that implement filtering, feature estimation, surface reconstruction, registration, model fitting and segmentation. It also has higher level operations such as mapping and object recognition. Now we have an implementation of KinectFusion based on the scientific paper that describes the algorithm in some detail. It is still being developed but you can see that it works in the video (the top right-hand panel is the 3D model):

Notice how objects seem to "melt" and "freeze" into the model as they are added and removed. This is all part of the averaging process that removes noise. If you want to know how it works in detail then you can read the paper by Microsoft Research. To help you get started we have a video that uses extracts from existing videos to explain how the Fusion algorithm works:

If you are looking for an exciting area to get involved in then this is it. The ability to build a 3D model in realtime, and at a realistic cost, is a step change in technology and it will make it possible to do things that only a few months ago were unthinkable. You can now scan an entire house interior and let a robot use the model to navigate while it collects data to refine the model. As they say "this is limited only by your imagination". More InformationKinectFusion: Real-Time Dense Surface Mapping and Tracking (pdf)

Comments

or email your comment to: comments@i-programmer.info Further reading:KinectFusion - instant 3D models KinectFusion - realtime 3D models Getting started with Microsoft Kinect SDK Kinect goes self aware - a warning we think it's funny and something every Kinect programmer should view before proceeding to create something they regret! Avatar Kinect - a holodeck replacement Kinect augmented reality x-ray (video)

Comments

or email your comment to: comments@i-programmer.info

To be informed about new articles on I Programmer, subscribe to the RSS feed, follow us on Google+, Twitter, Linkedin or Facebook or sign up for our weekly newsletter.

<ASIN:B003O6EE4U@COM> <ASIN:B003O6JLZ2@COM> <ASIN:B002BSA298@COM> <ASIN:B0042D7XMO@UK> <ASIN:B003UTUBRA@UK> <ASIN:B0036DDW2G@UK>

|

| Last Updated ( Sunday, 14 October 2012 ) |