| KinectFusion - realtime 3D models |

| Written by Harry Fairhead | |||

| Friday, 12 August 2011 | |||

|

We have a must-see video to show you. But it is also how it is done, rather than just what it does, that is so fascinating. Watch as a Kinect scans a room and builds a 3D model. Then watch as the GPU provides the power to add virtual reality goo...

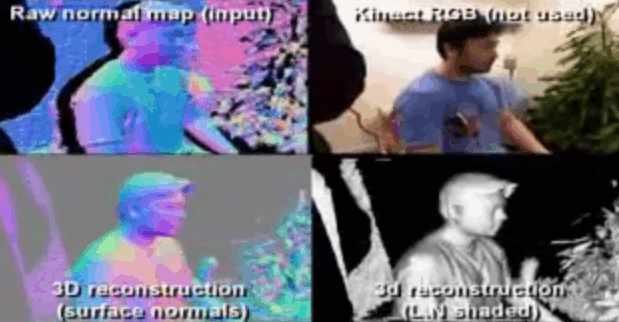

As they say in adverts to get you to go and see a movie - if there is one movie to see this summer then go and see X. In our case X is a video from Microsoft Research and if you watch it you will probably think that there are some amazing things going on, but once you realize exactly what is going on you can't help to be even more amazed. Yes, this is another Kinect-doing-amazing things video but it is also the result of a convergence of GPU computing power and some clever algorithms implemented by Microsoft Research and presented at this year's SIGGRAPH. The exact details of how it all works haven't been released yet as a paper so we will have to wait for more information. What is going on it in the video is that a 3D model is being build in real-time from the Kinect depth stream. A whole room can be scanned in a few seconds and new sections added to the model as the Kinect is pointed to new areas of the room. At the start of the video you can see the view cone of the Kinect being projected on the video and this is used to deduce the 3D structure of the scene. Slowly but surely a complete 3D model is build up. Notice that this isn't trivial because to unify the scans you have to know where the camera is pointing. As you watch later on notice how objects can be moved, added or removed and the model is adjusted to keep the representation accurate. The model that is built up is described as volumetric and not a wireframe model. This is also said to have advantages because it contains predictions of the geometry. Exactly what this means is difficult to determine without the paper describing the method in detail. From the video you can see that the algorithm estimates the surface normals and these are used as part of the familiar rendering algorithms.

What is clear from the video is that the model seems to work well enough to allow interaction between it and particle system and textured rendering. The key to the speed of the process is to use the computational capabilities of the GPU. Without the video you might think that a real-time model was a solution in search of a problem, but as you can see it already has lots of exciting applications. The demonstration of drawing on any surface wouldn't be possible without a model of where those surfaces are, and the same is true of the multi-touch interface, the augmented reality and the real-time physics in the particle systems. Which one of these applications you find most impressive depends on what your interests are but I was particularly intrigued with the ability to throw virtual goo over someone - why I'm not sure... Watch the video and dream of what you could do with a real-time 3D model...

Further reading:Getting started with Microsoft Kinect SDK Kinect goes self aware - a warning we think it's funny and something every Kinect programmer should view before proceeding to create something they regret! Avatar Kinect - a holodeck replacement Kinect augmented reality x-ray (video) <ASIN:B003O6EE4U@COM> <ASIN:B003O6JLZ2@COM> <ASIN:B002BSA298@COM> <ASIN:B0042D7XMO@UK> <ASIN:B003UTUBRA@UK> <ASIN:B0036DDW2G@UK>

|

|||

| Last Updated ( Friday, 12 August 2011 ) |