|

Page 1 of 3 The asyncio module may be the hottest topic in town, but it isn't the only asynchronous feature in Python worth knowing about. Find out about process-based parallelism in this extract from my new book Programmer's Python: Async. It has the advantage of no GIL problems.

Programmer's Python:

Async

Threads, processes, asyncio & more

Is now available as a print book: Amazon

Contents Contents

1) A Lightning Tour of Python.

2) Asynchronous Explained

3) Processed-Based Parallelism

Extract 1 Process Based Parallism

4) Threads

Extract 1 -- Threads

5) Locks and Deadlock

6) Synchronization

7) Sharing Data

Extract 1 - Pipes & Queues

8) The Process Pool

Extract 1 -The Process Pool 1

9) Process Managers

10) Subprocesses ***NEW!

11) Futures

Extract 1 Futures,

12) Basic Asyncio

Extract 1 Basic Asyncio

13) Using asyncio

Extract 1 Asyncio Web Client

14) The Low-Level API

Extract 1 - Streams & Web Clients

Appendix I Python in Visual Studio Code

Most books on asynchronous programming start by looking at threads, as these are generally regarded as the building blocks of execution. However, for Python there are advantages in starting with processes, which come with a default single thread of execution. The reasons are both general and specific. Processes are isolated from one another and this makes locking less of an issue.

There is also the fact that Python suffers from a restriction on the way threads are run called the Global Interpreter Lock or GIL which only allows one thread to run the Python system at any specific time. This means that, without a lot of effort, multiple threads cannot make use of multiple cores and so do not provide a way of speeding things up. There is much more on threads and the GIL in the next chapter, but for the moment all you need to know is that processes can speed up your program by using multiple cores. Using Python threads you cannot get true parallelism, but using processes you can.

What this means is that if you have an I/O-bound task then using threads will speed things up as the processor can get on with another thread when the I/O bound thread is stalled. If you have CPU-bound tasks then nothing but process parallelism will help.

The Process Class

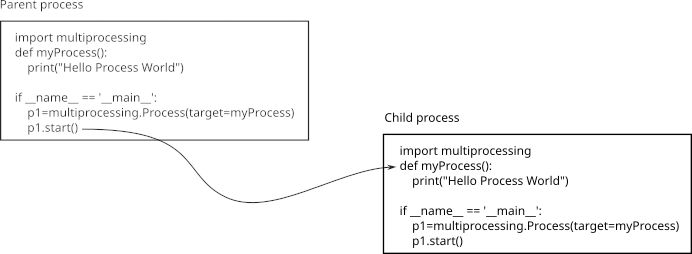

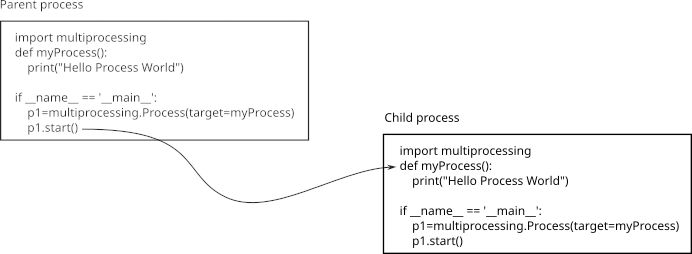

The key idea in working with processes is that your initial Python program starts out in its own process and you can use the Process class from the multiprocessing module to create sub-processes. A child process, in Python terminology, is created and controlled by its parent process. Let’s look at the simplest possible example:

import multiprocessing

def myProcess():

print("Hello Process World")

if __name__ == '__main__':

p1=multiprocessing.Process(target=myProcess)

p1.start()

In this case all we do is create a Process object with its target set to the myProcess function object. Calling the start method creates the new process and starts the code running by calling myProcess. You simply see Hello Process World displayed.

There seems to be little that is new here, but a lot is going on that isn’t obvious. When you call the start method a whole new process is created, complete with a new copy of the Python interpreter and the Python program. Exactly how the Python program is provided to the new process depends on the system you are running it on and this is a subtle point that is discussed later.

For the moment you need to follow the three simple rules:

-

Always use if __name__ == '__main__': to ensure that the setup code doesn’t get run in the child process.

-

Do not define or modify any global resources in the setup code as these will not always be available in the child process.

-

Prefer to use parameters to pass initial data to the child process rather than global constants.

The reasons for these rules are explained in detail later.

In general to create and run a new process you have to create a Process object using:

class multiprocessing.Process(group=None, target=None,

name=None, args=(), kwargs={}, daemon=None)

You can ignore the group parameter as it is just included to make the call the same as the one that creates threads in the next chapter. The target is the callable you want the new process to run and name is that applied to the new process. If you don’t supply a name then a unique name is constructed for you. The most important parameters are args and kwargs which specify the positional and keyword parameters to pass to the callable.

For example:

p1=multiprocessing.Process(target=myProcess,(42,43),

{“myParam1”:44})

will call the target as:

myProcess(42,43,myParam1=44)

The Process object has a number of useful methods and attributes in addition to the start method. The simplest of these are name and pid.

The name attribute can be used to find the assigned name of the child process. It has no larger meaning in the sense that the operating system knows nothing about it. The pid attribute, on the other hand, is the process identity number which is assigned by the operating system and it is the pid that you use to deal with child processes via operating system commands such as kill.

Both the name and pid attributes help identify a process, but for security purposes you need to use the authkey attribute. This is set to a random number when the multiprocessing module is loaded and it is intended to act as a secure identifier for child processes. Each Process object is given the authkey value of the parent process and this can be used to prove the process is indeed a child process of the parent, more about this later.

The multiprocessing module also contains some methods that can be used to find out about the environment in which the child processes are running.

-

multiprocessing.cpu_count() gives the number of CPUs i.e. cores in the system. This is the theoretical maximum number of processes that can run in parallel. In practice the maximum number available is usually lower.

-

multiprocessing.cpu_set_executable() gives the location of the Python interpreter to use for child processes.

A Python process isn’t a basic native process. It has a Python interpreter loaded along with the Python code defined in the parent process and it is ready to run the function that has been passed as the target.

|