| Creating Web Apps - The Touch API |

| Written by Mike James | |||||

| Monday, 21 January 2013 | |||||

Page 1 of 4 Touch interfaces are spreading and web pages can no longer ignore touch, even on the desktop. The HTML5 touch API is fairly well supported, so let's find out how it works. This article is one in a series on creating web apps:

To make your web app behave like a native app, one of the first requirements is that it makes use of touch interfaces where available. The low level Touch API is well supported by both Chrome and Firefox and one of the least troublesome of the APIs to work with. The Touch API is very easy to use, especially if you have worked with the standard mouse events. The only real complication is that the interface supports multitouch. A mouse can only be in one place at a time, but a touch screen can be touched in multiple places. This makes things more complicated because touch events have to work with a list of touch locations. There are three basic touch events:

Notice that the three basic events are linked in the sense that you can't get a touchmove until a touchstart has occurred for that particular touch point. In general the events occur in the order touchstart->touchmove->touchend You also have to take account of the case where there are multiple touch points. In this case a touchend might not stop all of the touchmove events because there are still some active touch points. There are two element-oriented events:

and one general purpose cancel:

The touchcancel is difficult to characterize because there are lots of device-dependent things that can abort a touch event sequence. For example, a modal dialog box can stop the user touching the app's UI and so can moving off the document to work with the browser's UI. Your app can easily write a general touchcancel event handler that simply stops the current operation in the most appropriate way. We will mostly ignore the touchenter, touchleave and touchcancel events because they are fairly easy to use once you have see how the three main events operate. Now it's time to take a look at each of the events starting with touchstart. TouchstartTouchstart is a good event to examine first because it has all of the characteristics of the other touch events. It is fired when the user touches the screen with one or more fingers. Notice that another touchstart event can occur if the user adds a point of contact to the screen. In this case the new touchstart lists all of the points of contact. The information about the event is, as always, stored in an event object. The TouchEvent object has a number of useful properties, but the most useful is the touches property. This returns a list of Touch objects. Each Touch object has the details of a point of contact on the screen. The most important of these give the position of the point of contact:

These are all we need to write a simple example that explains everything. Let;s write a small program that plots a circle at each point the the user touches and to keep it simple we will just plot one point of contact. First we need a canvas element:

A subroutine to draw a colored circle on the canvas also make things easier:

The real work of the program is done by the event handler:

this attaches handleStart to the canvas element. All the event handler has to do is draw a circle at the location of the point of contact:

Notice that we draw the circle centered at pageX and pageY which is fine as long as the canvas is at the top left of the page. If this is not the case we need to modify the page co-ordinates by the offset of the canvas. Also notice that we only retrieve the first point of contact with touches[0].

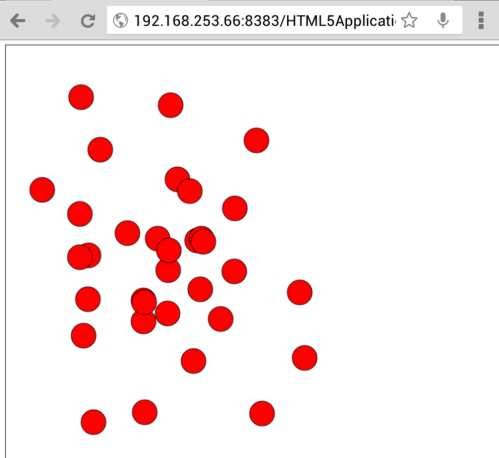

If you put all this together and run you will discover that you can make a red circle appear where ever you touch the screen. If you touch with two or more fingers then you will only see the circle drawn at the location of one of the points of contact.

|

|||||

| Last Updated ( Monday, 21 January 2013 ) |