| Rule-Based Matching In Natural Language Processing |

| Written by Jannes Klaas | |||

| Monday, 20 May 2019 | |||

Page 2 of 2

Add custom functions to matchersLet's move on to a more complex case. We know that iPhone is a product. However, the neural network-based matcher often classifies it as an organization. This happens because the word "iPhone" gets used a lot in a similar context as organizations, like in "The iPhone offers..." or "The iPhone sold..." Let's build a rule-based matcher that always classifies the word "iPhone" as a product entity. First, we have to get the hash of the word PRODUCT. Words in spaCy can be uniquely identified by their hash. Entity types also get identified by their hash. To set an entity of the product type, we have to be able to provide the hash for the entity name. We can get the name from the language models vocabulary by running: PRODUCT = nlp.vocab.strings['PRODUCT']Next, we need to define an on_match rule. This function will be called every time the matcher finds a match. on_match rules get passed by four arguments:

There are two things happening in our on_match rule: def add_product_ent(matcher, doc, i, matches):match_id, start, end = matches[i] #1doc.ents += ((PRODUCT, start, end),) #2

Let's break down what they are:

Now that we have an on_match rule we can define our matcher. We should note that matchers allow us to add multiple patterns, so we can add a matcher for just the word "iPhone" and another pattern for the word “iPhone” together with a version number like "iPhone 5": pattern1 = [{'LOWER': 'iPhone'}] #1pattern2 = [{'ORTH': 'iPhone'}, {'IS_DIGIT': True}] #2 matcher = Matcher(nlp.vocab) #3matcher.add('iPhone', add_product_ent, pattern1, pattern2) #4

So, what makes these commands work?

We will now pass one of the news articles through the matcher: doc = nlp(df.content.iloc[14]) #1matches = matcher(doc) #2

This code is relatively simple, with only two steps:

Now that the matcher is set up, we need to add it to the pipeline so that spaCy uses it automatically. Adding the matcher to the pipelineCalling the matcher separately is somewhat cumbersome. To add it to the pipeline, we have to wrap it into a function, which we can achieve by running:

The spaCy pipeline calls the components of the pipeline as functions and always expects the annotated document to be returned. Returning anything else could break the pipeline. We can then add the matcher to the main pipeline, as can be seen in the following code: nlp.add_pipe(matcher_component,last=True)

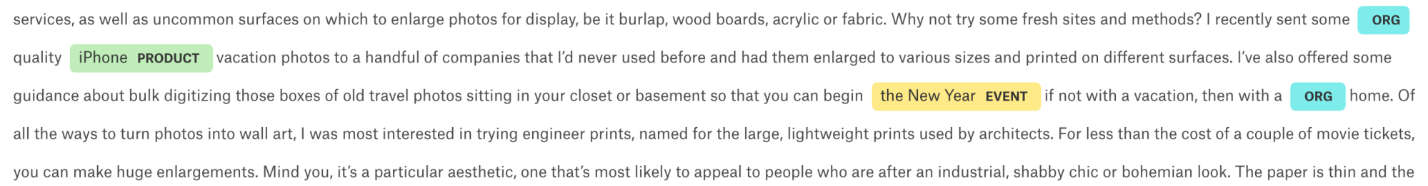

The matcher is now the last piece of the pipeline, and iPhones will now get tagged based on the matcher's rules. And boom! All mentions of the word "iPhone" (case independent), are now tagged as named entities of the type product. You can validate this by displaying the entities with displacy as we have done in the following code, and that you can see in the following screenshot: displacy.render(doc,style='ent',jupyter=True)

spaCy now finds the iPhone as a product SummaryIn this article, we learned about rule-base matching in natural language processing. We created a matcher, following adding custom functions to the matcher. We worked out a rule-based matcher that classifies the word "iPhone" as a product. In the last sub-section, we added the matcher to the pipeline so that spaCy can use it to find the iPhone as a product. Explore NLP to automatically process language with Jannes Klaas’ debut book Machine Learning for Finance.

This article is an excerpt from Machine Learning for Finance by Jannes Klaas and published by Packt Publishing, a book which introduces the study of machine learning and deep learning algorithms for financial practitioners. To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin. Related ArticlesReading Your Way Into Big Data |

|||

| Last Updated ( Monday, 20 May 2019 ) |