| Programmer's Guide To Theory - Turing Thinking |

| Written by Mike James | ||||

| Wednesday, 18 June 2025 | ||||

Page 1 of 3 Turing machines are the basis of computer science, but perhaps not in the way that you might think. There is a way of thinking about Turing machines that is special. A Programmers Guide To Theory

Now available as a paperback and ebook from Amazon.

Contents

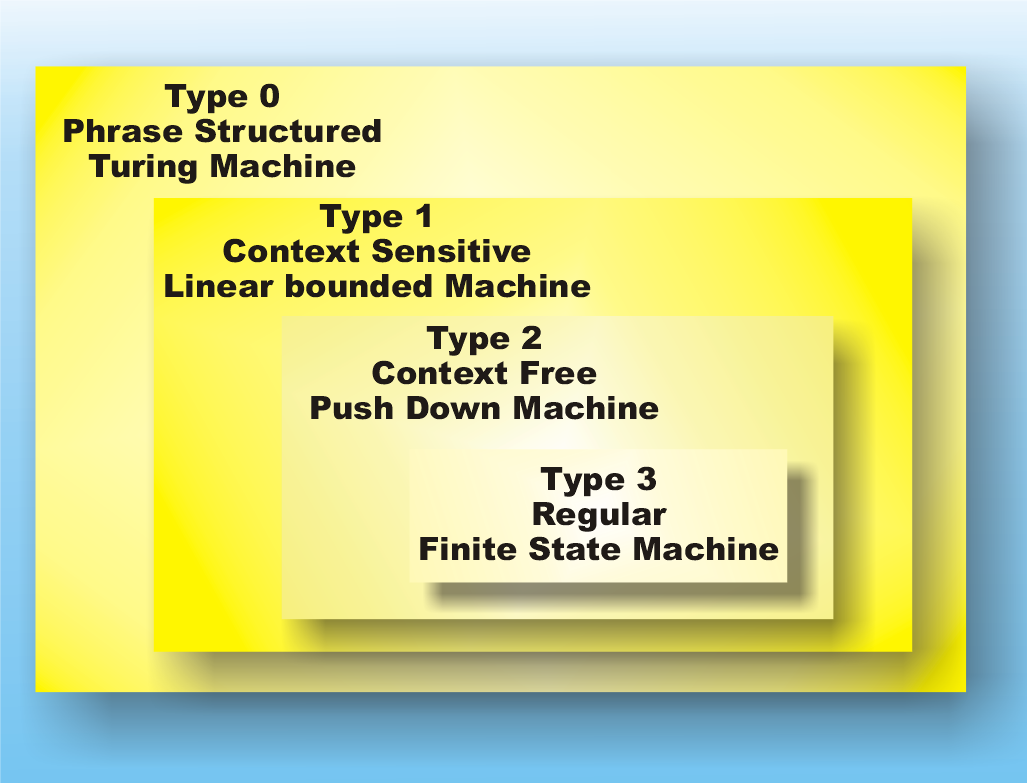

<ASIN:1871962439> <ASIN:1871962587> The type of language that a machine can generate or recognize helps us classifiy the power of the machine. The simplest machine, a finite state machine can only work with regular languages. A pushdown machine extends this to context free languages. You can probably guess that, if the language corresponding to a pushdown machine is called context-free, the next step up is going to be ‘context-sensitive’. This is true, but the machine that accepts a context-sensitive sequence is a little more difficult to describe. Instead of a stack, the machine needs a ‘tape’, which stores the input symbols. It can read or write the tape and then move it to the left or the right. Yes, it’s a Turing machine! A Turing machine is more powerful than a pushdown machine because it can read and write symbols in any order, i.e. it isn’t restricted to LIFO order. However, it is also more powerful for another, more subtle, reason. A pushdown machine can only ever use an amount of storage that is proportional to the length of its input sequence, but a machine with a tape that can move in any direction can, in principle, use any amount of storage. For example, the machine could simply write a 1 on the tape and move one place left, irrespective of what it finds on the tape. Such a machine would create an unbounded sequence of 1s on the tape and so use an unbounded amount of storage. It turns out that a Turing machine is actually too powerful for the next step up the ladder. What we need is a Turing machine that is restricted to using just the portion of tape that its input sequence is written on, a ‘linear bounded machine’. You can think of a linear bounded machine as a Turing machine with a short tape or a full Turing machine as a linear bounded machine that has as long a tape as it needs. The whole point of this is that a linear bounded machine accepts context-sensitive languages defined by context-sensitive grammars that have rules of the form: anything1 → anything2 but with the restriction that the sequence on the output side of the rule is as least as long as the input side. Consider: A<S1> → A<S1>A as an example of a context-sensitive rule. Notice that you can think of it as the rule: <S1> → <S1>A but only applied when an A comes before <S1> and hence the name “context-sensitive”. A full Turing machine can recognize any sequence produced by any grammar – generally called a ‘phrase structured’ grammar. In fact, as we already know, a Turing machine can be shown to be capable of computing anything that can reasonably be called computable and hence it is the top of the hierarchy as it can implement any grammar. If there was a grammar that a Turing machine couldn't cope with then the Church Turing Thesis wouldn't hold. Notice that a phrase structured grammar is just a context-sensitive grammar that can also make sequences shrink as well as grow.

That’s our final classification of languages and the power of computing machines. Be warned, there are lots of other equivalent, or nearly equivalent, ways of doing the job. The reason is, of course, that there are many different formulations of computing devices that have the same sort of power. Each one of these seems to produce a new theory expressed in its own unique language. There are also finer divisions of the hierarchy of computation, not based on grammars and languages. However, this is where it all started and it is enough for the moment. |

||||

| Last Updated ( Wednesday, 18 June 2025 ) |