| NAG for GPGPU |

| Written by Harry Fairhead | |||

| Tuesday, 01 June 2010 | |||

|

The GPU is an ideal candidate for simple number crunching and a new library turns it into a powerful random number generator.

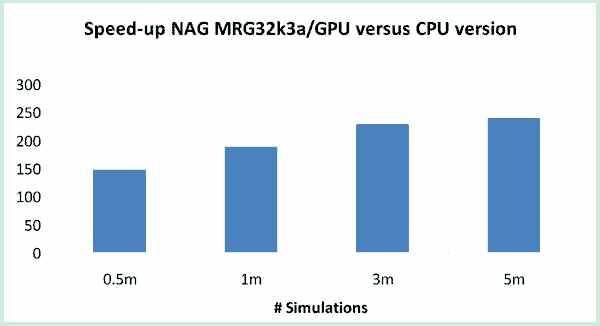

Following our recent GPGPU news item - GPGPU optimisation - there seems to be nothing happening that doesn't involve the use of the GPU to create a personal supercomputer. Now NAG - Numerical Algorithms Group - well known for its professional quality numerical code has released a beta of NAG Numerical Routines for GPUs. In case you have missed what the fuss is all about the GPU, or Graphics Processing Unit, that most PCs have as standard is capable of performing large numbers of simultaneous operations. So many that they are in theory more powerful than the CPU at many types of calculation. Unfortunately programming a GPU isn't an easy task and so any help you can get is welcome. Currently, the prototype library includes components for Monte Carlo simulation, comprising a pseudo-random number generator, a quasi-random sequence generator and a Brownian bridge constructor. The random number generators provide three output distributions (uniform, exponential and Normal) implemented in both single and double precisions. Random number generators are an obvious task for the GPU as they are needed in large quantities and the algorithms to compute them are relatively short. Various benchmarks indicate a speed up of over 50 times compared to random number generation on the CPU.

If you would like more information on using a GPU for random number generation and some examples of the code in action then visit Professor Mike Giles' page on his ongoing research.

Access to the beta is free to academics as long as they sign a collaborative agreement. Commercial access has to be negotiated. http://www.nag.co.uk/numeric/gpus/ Other relevant articles:

|

|||

| Last Updated ( Wednesday, 02 June 2010 ) |