| Machine Learning Applied to Game of Thrones |

| Written by Nikos Vaggalis |

| Saturday, 11 May 2019 |

|

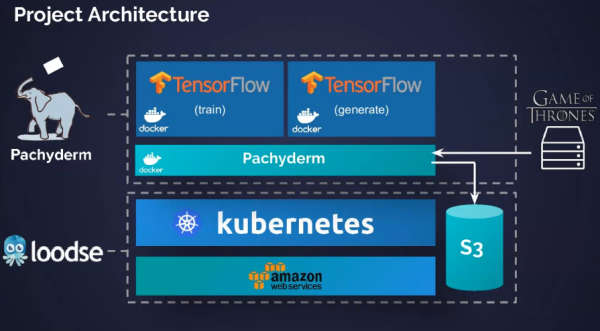

No-one wants the beloved series to end. Some, like the geeks at Pachyderm, have gone to great lengths to extend its life span, to the point of employing ML to serve the Iron Throne. This is a new example of style transfer where ML identifies the essential characteristics of a genre in order to create its own examples, such as we've seen before with art and even with cooking. But first of all, what is Pachyderm and where does that word come from? Pachy-derm is a direct translation of the Greek word 'παχύ-δερμο' which means 'fat skinned', referring to the Elephant in their logo. The Elephant itself is used to denote Pachyderm's relation to Hadoop, another Elephant logo, but posing as its newer and better counterpart. Pachyderm, like Hadoop, is an analytics engine, following the same philosophy but built to go where Hadoop falls short. So in place of writing jobs in Java and running them on the JVM, it uses Docker containers deployed on Kubernetes which can contain jobs written in any language. In place of HDFS, it uses the Pachyderm File System and, in place of MapReduce, it uses Pachyderm Pipelines to pipe containers together, as shown in this GoT ML example. For more about the latest release, see of Pachyderm Gets Faster And Gets Funding. The aim of Pachyderm is to enable programmers not familiar with MapReduce or Java to write their analytics applications with whatever tool they see fit, as such developers can do their big data processing without requiring specialized know-how, hence Pachyderm's posing as a much more accessible option than Hadoop. The GoT example involves deploying Pachyderm as a container on Kubernetes on an AWS S3 Bucket and a repository holding the input/output data used.

The input data are the preprocessed GoT scripts of Season 1 Episode 1. They have been labeled to help the Recurrent Neural Network used distinguish between dialogs and other elements of the script regarded as "exposition", mainly stage directions and context settings: <open-exp> First scene opens with three Rangers riding through a tunnel , leaving the Wall , and going into the woods <eos> (Eerie music in background) One Ranger splits off and finds a campsite full of mutilated bodies , including a child hanging from a tree branch <eos> A birds-eye view shows the bodies arranged in a shield-like pattern <eos> The Ranger rides back to the other two <eos> <close-exp> Pachyderm takes this locally stored data and starts the first pipeline, feeding the data to a container running TensorFlow to train the model.After processing TF writes the model's output as well as the TF specific files back to the repo. Then the 2nd pipeline begins which takes as input those files outputted in the previous step and with that feeds another TF instance in order to generate the scripts. As simple as that, without the complexity of MapReduce.

The first scripts generated didn't make a lot of sense, but the quality can be improved a lot by raising the accuracy level of TensorFlow, as well as feeding the NN more scripts. Be aware, however, that increasing the accuracy also increases the processing time from hours to even days depending on the underlying infrastructure. The project's Github repo contains the scripts generated with a low model accuracy, not bad as the whole process lasted under an hour, and while not winning the Emmys, they represent a considerable feat of style transfer insofar as they capture the "very essence" of GoT. [ OLENNA ]: Then where would she serve that Grand Maester and nine are men ? And soon , we need to rule that to a man who wore on the walls . . This neural net didn't know English, much less grammar, but it did pick up on some pieces of structure:

Putting more scripts would automatically start the pipelines again to retrain the model and generate the new scripts.The project's repo also includes instructions on how to replicate the experiment yourself, so have a look and judge for yourself if the quality of the output holds true to the series's plot. Say,why not let the Network write Season 9 and keep everyone happy?

More InformationPachyderm Webinar:From Zero To Production ML in 30 mins using Loodse & Pachyderm

Related ArticlesPachyderm Gets Faster And Gets Funding More Efficient Style Transfer Algorithm A Neural Net Creates Movies In The Style Of Any Artist Style Transfer Applied To Cooking - The Case Of The French Sukiyaki The Ai-Da Delusion - Machines Don't Have Souls How Do Open Source Deep Learning Frameworks Stack Up? Neural Networks In JavaScript With Brain.js Dreams Come To Life With Machine Learning To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Sunday, 12 May 2019 ) |