| TensorFlow Lite For Mobiles |

| Written by Kay Ewbank | |||

| Monday, 20 November 2017 | |||

|

Google has announced a developer preview of TensorFlow Lite, a version of TensorFlow for mobile and embedded devices. TensorFlow is Google's open source tool for parallel computations, including implementing neural networks and other AI learning methods. It is designed to make it easier to work with neural networks and is seen as more general and easier than other options. The new Lite version gives low-latency inference of on-device machine learning models. Until now, TensorFlow supported mobile and embedded deployment of models through the TensorFlow Mobile API. The developers say TensorFlow Lite should be seen as the evolution of TensorFlow Mobile. The highlights of the new Lite version start with the fact that it is lightweight, so can be used for inference of on-device machine learning models with a small binary size. It's also cross-platform, though for the moment that means Android and iOS. Perhaps most importantly, the developers say it's also fast. It has been optimized for mobile devices, meaning that models load quickly, and it makes use of hardware acceleration. While it might seem unlikely to think of carrying out AI learning on a mobile device, the developers of TensorFlow Lite point out that an increasing number of mobile devices now incorporate purpose-built custom hardware to process ML workloads more efficiently. TensorFlow Lite supports the Android Neural Networks API to take advantage of these new accelerators as they come available. If accelertor hardware isn't available, TensorFlow Lite falls back to optimized CPU execution.

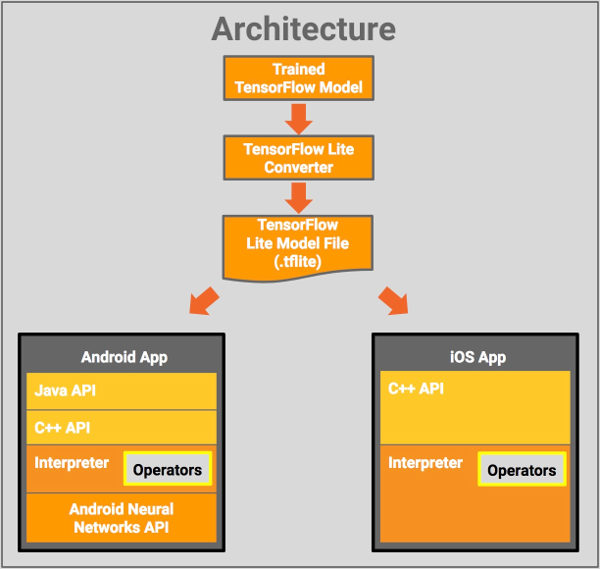

TensorFlow Lite consists of a trained TensorFlow model saved on disk, and a converter that converts the model to the TensorFlow Lite file format. You also get a model file format based on FlatBuffers, that has been optimized for maximum speed and minimum size, and the model file can then be deployed in a mobile app, where the C++ API loads the model and invokes an interpreter that executes the model using a set of operators. The C++ API is itself wrapped in a Java API convenience wrapper on Android. The interpreter supports selective operator loading to minimize the memory needed. TensorFlow Lite comes with a number of models that are trained and optimized for mobile, including a class of vision models that can identify across 1000 different object classes; and a conversational model that provides one-touch replies to incoming conversational chat messages.

More InformationTensorFlow Lite documentation. Related ArticlesAndroid 8.1 Introduces Neural Networks API //No Comment - Should I use TensorFlow, AI Real Estate & Lip Reading TPU Is Google's Seven Year Lead In AI TensorFlow 0.8 Can Use Distributed Computing TensorFlow - Googles Open Source AI And Computation Engine To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 20 November 2017 ) |