| Computational Photography On A Chip |

| Written by David Conrad |

| Thursday, 21 February 2013 |

|

Computational photography is a growing field and it is even mature enough for some of its approaches and algorithms to have found their way into silicon. MIT has a new low power chip that could revolutionize photography.

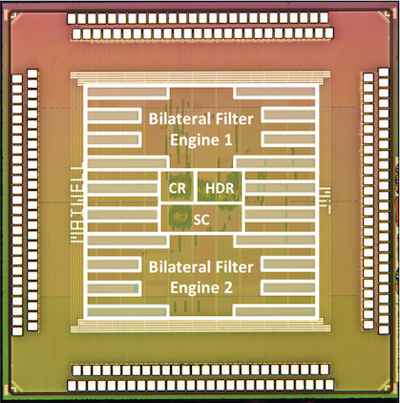

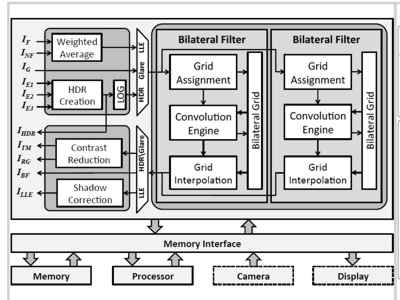

A chip built by a team at MIT's Microsystems Technology Lab and funded by Foxconn provides most of what you need to place computational photography right in the camera. It uses so little power that it could be built into a phone or a digital camera and provide the sort of processing that is usually performed by specialist software after the photo has been uploaded to a PC. The chip is quite complicated but its main element is an implementation of a bilateral filter array. A bilateral filter is a generalization of the simple linear 2D Gaussian based smoothing filter to a more powerful non-linear filter that smooths while preserving edge detail. A bilateral filter weights the amount of blur applied by the difference in brightness of neighboring pixels. This leaves the edges where the brightness is changing rapidly unmodified.

This simple filter has a great many uses, including reducing noise, removing errors in exposure and contrast and changing resolution. The chip has two bilateral filter grids and some additional hardware that make it even more useful. For example, it can take three images taken at different exposures and merge them together to create a single High Dynamic Range (HDR) image. This is a big step forward for HDR photography, which has previously needed post processing to weld the images together. The chip can do the job in a few hundred milliseconds for a 10Mp image. HDR images are stunning, but they need HDR displays to show them accurately. So how can the photographer view them in realtime? Once again, the bilateral filter comes to the rescue in that it can be used to compress the dynamic range for display on the standard camera display. Other uses include glare reduction. Modern cameras have sophisticated exposure control, but this doesn't stop areas of the image going outside of the representable range. The bilateral filter can perform local contrast reduction or enhancement to improve the overall look of the image. Other techniques are to use the camera's flash and to provide detail to add to a natural light exposure. The result is a well-exposed image that looks natural.

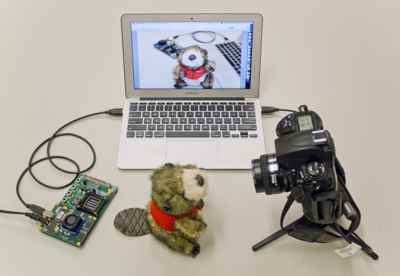

The test chip in realtime action As well as improving the look of a photograph, the device could find its way into machine vision hardware as a preprocessing step. Many machine vision algorithms require edge enhancement and multi-resolution images, which the bilateral filter can implement. The chip is only an experimental prototype at the moment and it is described in a paper, presented at this year's International Solid State Conference, by Rahul Rithe, Priyanka Raina, Nathan Ickes, Srikanth V. Tenneti and Anantha P. Chandrakasan. It proves that it is possible to perform real time computational photography with hardware that can be built into mobile devices.

More InformationRelated ArticlesBlink If You Don't Want To Miss it Halide - New Language For Image Processing Super Seeing - Eulerian Magnification Plenoptic Sensors For Computational Photography New Algorithm Takes Spoilers Out of Pics

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Thursday, 21 February 2013 ) |