| Generate 3D Flythroughs from Still Photos |

| Written by David Conrad | |||

| Sunday, 20 November 2022 | |||

|

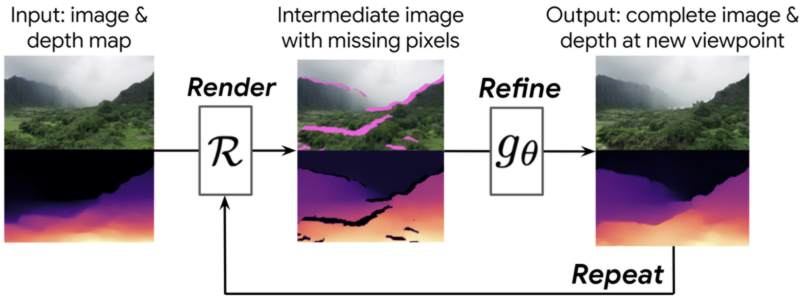

Machine Learning has already produced many impressive results using nothing but still photos. Now researchers have extended the technique to give us the visual experience of flying through a landscape, something that could be used for games or in virtual reality scenarios. Introducing the Infinite Nature project on the Google Research blog, Noah Snavely and Zhengqi Li write: We live in a world of great natural beauty — of majestic mountains, dramatic seascapes, and serene forests. Imagine seeing this beauty as a bird does, flying past richly detailed, three-dimensional landscapes. Can computers learn to synthesize this kind of visual experience? The answer is that a collaboration between researchers at Google Research, UC Berkeley, and Cornell University has already come up with a technique whereby a user can interactively control a camera and choose their own path through a landscape. Called Perpetual View Generation, all that is needed is a single input image of a natural scene to generate a long camera path that "flies" into the scene, generating new scene content as it proceeds. The way it works is that given a starting view, like the first image in the figure below, first a depth map in computer. This is then used to render the image forward to a new camera viewpoint, as shown in the middle, resulting in a new image and depth map from that new viewpoint.

The intermediate image presents a problem — it has holes where the viewer can see behind objects into regions that weren’t visible in the starting image. It is also blurry as being closer to objects, pixels from the previous frame have to be stretched to render these now-larger objects. To deal with this a neural image refinement network learns to takes this low-quality intermediate image and output a complete, high-quality image and corresponding depth map. These steps can then be repeated, with this synthesized image as the new starting point. Because both the image and the depth map are refined, this process can be iterated as many times as desired. The system automatically learns to generate new scenery, like mountains, islands, and oceans, as the camera moves further into the scene.

InfiniteNature-Zero was the topic of an Oral Presentation at last month's European Conference on Computer Vision (ECCV 2022). The project can be found on its GitHub repository. More InformationInfinite Nature: Generating 3D Flythroughs from Still Photos https://arxiv.org/abs/2207.11148 InfiniteNature-Zero: Learning Perpetual View Generation of Natural Scenes from Single Images by Zhengqi Li, Qianqian Wang, Noah Snavely, Angjoo Kanazawa Related ArticlesGoogle Presents --- Motion Stills Animating Flow In Still Photos Synthesizing The Bigger Picture To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 20 November 2022 ) |