| Deep Learning Restores Time-Ravaged Photos |

| Written by David Conrad | |||

| Sunday, 04 October 2020 | |||

|

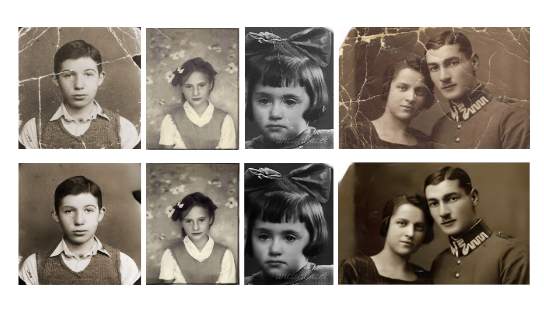

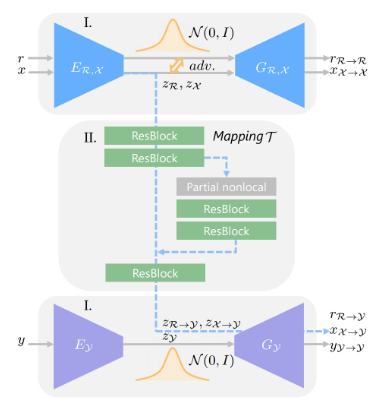

Researchers have devised a novel deep learning approach to repairing the damage suffered by old photographic prints. The project is open source and a PyTorch implementation is downloadable from GitHub. There's also a Colab where you can try it out. We've encountered neural networks that can colorize old black and white shots, can improve on photographs of landscapes and even paint portraits in the style of an old master. Here the goal is more modest - to apply a deep learning approach to restoring old photos that have suffered severe degradation. The researchers, from Microsoft Research Asia in Beijing, China and at the University of Science and Technology of China, and now the City University of Hong Kong start from the premise that: Photos are taken to freeze the happy moments that otherwise gone. Even though time goes by, one can still evoke memories of the past by viewing them. Nonetheless, old photo prints deteriorate when kept in poor environmental condition, which causes the valuable photo content permanently damaged. As manual retouching of prints is laborious and time-consuming they set out to design automatic algorithms that can instantly repair old photos for those who wish to bring them back to life. The researchers presented their work as an oral presentation at CVPR 2020, held virtually in June and their paper, "Bringing Old Photos Back to Life", which is part of the conference proceedings is already available. It explains that: Unlike conventional restoration tasks that can be solved through supervised learning, the degradation in real photos is complex and the domain gap between synthetic images and real old photos makes the network fail to generalize. Therefore, we propose a novel triplet domain translation network by leveraging real photos along with massive synthetic image pairs. Specifically, we train two variational autoencoders (VAEs) to respectively transform old photos and clean photos into two latent spaces. Architecture of our restoration network. (I.) We first train two VAEs: VAE1 for images in real photos r ∈ R and synthetic images x ∈ X , with their domain gap closed by jointly training an adversarial discriminator; VAE2 is trained for clean images y ∈ Y. With VAEs, images are transformed to compact latent space. (II.) Then, we learn the mapping that restores the corrupted images to clean ones in the latent space. The restoration process works well and is available for others to use. There is a PyTorch implementation on GitHub and also a Colab where you can try it out.

More InformationBringing Old Photos Back to Life Old Photo Restoration (PyTorch Implementation) on GitHub Colab - Bringing Old Photo Back to Life.ipynb

Related ArticlesDeep Angel-The AI of Future Media Manipulation To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Sunday, 04 October 2020 ) |