| Crowd Sourced Solar Eclipse Megamovie |

| Written by David Conrad | |||

| Tuesday, 22 August 2017 | |||

|

Using photographs contributed by observers on the path of totality, Google computers have stitched together images of the 2017 solar eclipse to create the “Eclipse Megamovie.” The resulting movie is intended as a preview of the larger publicly available dataset of photos that will enable scientists around the world to study the sun and its atmosphere. UPDATE August 22 Here is the first version of Google's Eclipse MegaMovie:

Saturday August 19 On Monday, August 21, 2017, all of North America will experience an eclipse of the sun and, given favorable weather conditions, those within the path of totality, which will pass from Oregon in the North West to South Carolina in the South East will witness the phenomenon of a total solar eclipse, in which the disc of the moon completely covers the sun allowing the sun's corona to be seen.

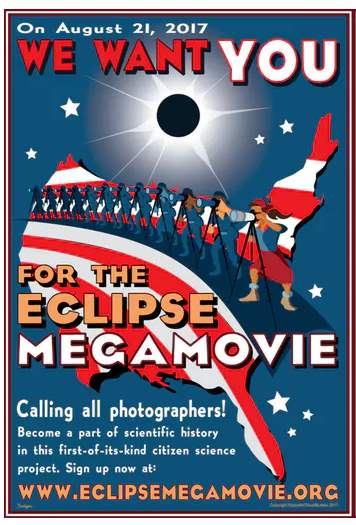

The Eclipse Megamovie Project is led by the Making & Science Team at Google and the Mutiverse Team at UC Berkeley's Space Sciences Lab. Its aim is to produce a high definition, time-expanded video pieced together from images collected by citizen scientists along this path which will far exceed what any one person could capture from a single location.

According to Dr. Juan Carlos Martínez Oliveros of UC Berkeley Space Sciences Laboratory’s Science Team, the project is: "really an experiment in using crowdsourcing to do solar science, which will hopefully pave the way for much future work." Once participants upload their pictures to the Megamovie website, the content will transfer to Google’s servers and its computers will get to work to algorithmically align and process the images to create a continuous view of the eclipse. This will be done using an algorithm developed by Dr. Larisza Krista, Solar Eclipse Image Standardisation and Sequencing (SEISS), who created it to stitch together images taken of the 2012 total solar eclipse in Queensland, Australia. As described in a 2015 paper, SEISS can: “process multi-source total solar eclipse images by adjusting them to the same standard of size, resolution, and orientation.” It can also: “determine the relative time between the observations and order them to create a movie of the observed total solar eclipse sequence.” Google has made some changes to Dr. Krista’s algorithm for this project. Whereas SEISS included code for detecting when the eclipse was in its partial phases, the Megamovie is focusing on its totality, the short period in which the moon blocks out all of the sun’s light so Google will use each photo’s timestamp and GPS location to identify those images taken during the totality and discard the others. . Google’s Joshua Cruz explains: “After identifying the solar disk, we scale, translate, and rotate the images so that they all share the same reference frame. Images taken without an equatorial mount are rotated by the parallactic angle. The entire eclipse path is broken into 3600 equal segments; for each segment, the ‘best’ photo is identified. Then, the movie is stitched (3600 total frames at 25 FPS), with any gap segments filled with cross-fades of the surrounding photos.”

To participate in the Megamovie, you’ll need an interchangeable lens digital camera (like a DSLR or mirrorless camera), not just a cellphone, and you’ll also need to be in the path of the eclipse’s totality. See the first version of the resulting movie at the top of this updated news. More InformationRelated ArticlesAutomatic Studio & Better Astro Shots Microsoft Open Sources WorldWide Telescope Computational Photography Shows Hi-Res Mars To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Tuesday, 22 August 2017 ) |