| Computational Photography Shows Hi-Res Mars |

| Written by David Conrad |

| Sunday, 01 May 2016 |

|

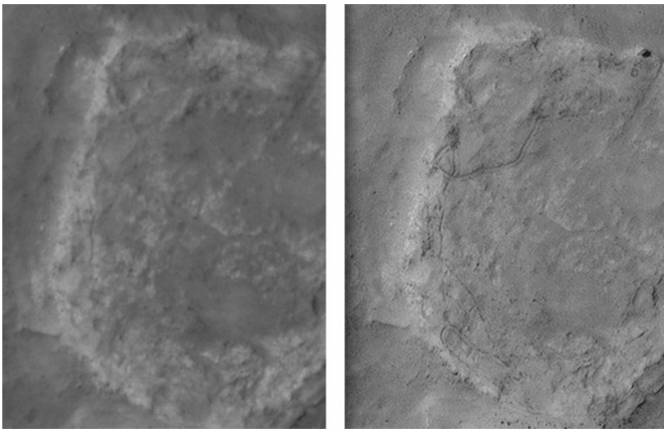

Computational photographic is amazing, but sometimes you have to wonder if it is actually useful and not just amusing. Proving that it is, researchers have found a way to extract high-resolution images from multiple low-resolution images of the Martian surface. These are good enough almost to see the lost Beagle 2 lander clearly. The idea isn't a new one - take a stack of pictures that overlap and process them to produce a single higher-resolution image. Simple to say, but it takes a lot of work to perfect. In principle, we need to solve an inverse problem. Each of the low- resolution photos is derived from an ideal high-resolution photo by a set of transformations corresponding to change in camera position, blurring and down-sampling etc. All you have to do is apply the inverse operators to create the high-resolution photo. In practice, this is a difficult problem, but you can see how it works by imagining that there is an arrangement of the low-resolution images where one photo fills in the details between the pixels of another. This is what University College London researchers Yu Tao and Jan-Peter Muller have done. The technique was applied to photos from the HiRISE camera on board the Mars Reconnaissance Orbiter 300km above the red planet's surface. These low-resolution images provide a view of objects as small as 25cm. but by combining eight repeat passes over Gusev Crater, where the Spirit rover left tracks, the resolution could be increased to 5cm. The processing time was in the order of 24 hours for a 2048x1024 tile. Because of the time it takes a full HiRISE image hasn't been processed as yet. This should become possible when the program extended to make use of a GPU. The results are amazing and provide planetary geologists with so much more information. You can see the original 25cm resolution photo on the left and the 5cm image constructed from it on the right. The wiggly line in the upper part of the image is the track the rover left in the Martian dust.

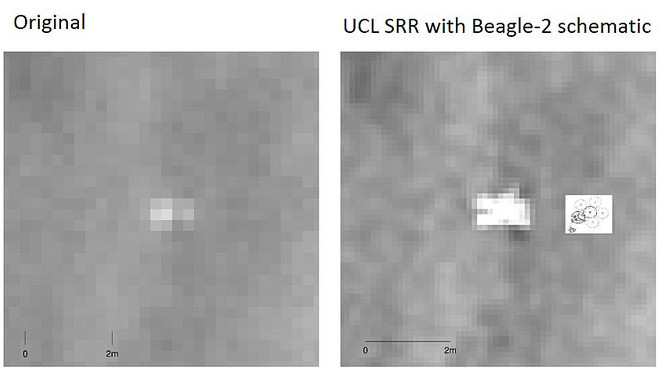

Just in case there is any doubt of the accuracy of the enhancement, the team checked the accuracy of the rover tracks and found that the maximum disagreement was 8 cm or 1.6 pixels and the difference could be due to the change in the Martian surface over the 5-year period. Finally the method was applied to the proposed crash site of the Beagle 2 lander. In case you have forgotten, the Beagle 2 was a novel lander designed to test for life which should have transmitted a signal on Christmas day 2003, but was never heard from. A possible crash site was spotted twelve years later as a bright dot in a HiRISE image. The constructed higher resolution version starts to show the characteristic shape of the space craft:

The technique could improve the pictures sent back from all planetary missions and even images of earth. As long as there are multiple low- resolution photos that overlap you can increase the resolution. You can see more examples in the team's paper and on its Flicker gallery.

More InformationA novel method for surface exploration: Super-resolution restoration of Mars repeat-pass orbital imagery.Yu Tao and Jan-Peter Muller Related ArticlesRemoving Reflections And Obstructions From Photos Separating Reflection And Image Microsoft Hyperlapse Ready For Use SparkleVision - Seeing Through The Glitter Automatic High Dynamic Range (HDR) Photography See Invisible Motion, Hear Silent Sounds Cool? Creepy? Computational Camouflage Hides Things In Plain Sight Google Has Software To Make Cameras Worse Plenoptic Sensors For Computational Photography

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Sunday, 01 May 2016 ) |