| HEARBO - A Listening Robot |

| Written by Harry Fairhead | |||

| Sunday, 02 December 2012 | |||

|

Researchers at the Honda Research Institute in Japan have been working on robot audition - the ability of robots to detect sounds and understand them. Two videos show impressive progress. HEARBO (HEAR-ing roBOt) is being developed at the Honda Research Institute in Japan (HRI-JP), and papers describing its latest functionalities in the field called Computational Auditory Scene Analysis were presented at this year's International Conference on Intelligent Robots and Systems (IROS).

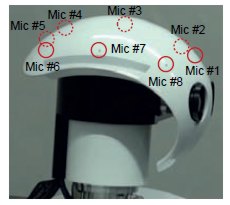

According to IEEE Spectrum, typical approaches to robot audition use a method called beamforming to "focus" on a sound, like a person speaking. The system then takes that sound, performs some noise reduction, and then tries to understand what the person is saying using automatic speech recognition. The HEARBO researchers takes the beamforming approach a step further using a 3-step paradigm of localization, separation, and recognition. This system, called HARK, enables original sounds to be recovered from a mixture of sounds based on where the sounds are coming from. Their reasoning is that "noise" shouldn't just be suppressed, but be separated out and then analyzed afterwards, since the definition of noise is highly dependent on the situation. For example, a crying baby may be considered noise, or it may convey very important information. At IROS 2012, Keisuke Nakamura of HRI-JP presented his new super-resolution sound source localization algorithm, which allows sounds to be detected to within 1-degree of accuracy. Using the methods developed by Kazuhiro Nakadai's team at HRI-JP, up to four different simultaneous sounds or voices can be detected and recognized in practice. Theoretically, with eight microphones, up to seven different sound sources can be separated and recognized at once, something that humans with two ears cannot do.

As demonstrated in this video, Nakamura has also taught HEARBO about the concepts of music, human voice, and environmental sounds. Upon hearing a song it’s never heard before, it can say, “I hear music!” This means that HEARBO can tell the difference between a human giving it commands, and a singer on the radio. In this video, different sound sources are positioned around HEARBO and we witness the robot recognizing the sounds, determining the location they're coming from and focusing its attention on each source one by one:

Another focus of the Honda researchers is ego-noise suppression - filtering out their internal self-generated noise their motors make when they move - which is akin to how the human hearing system filters out the heartbeat sound. To do this microphones are embedded in HEARBO's body to subtract internal noise from sounds coming through its head mics. In this second video HEARBO not only shows off his dance moves but also its ability to handle a plethora of sounds:

More InformationHEARBO Robot Has Superhearing (IEEE Spectrum) Related ArticlesKorean Household Robot Prepares Salad Flying Neural Net Avoids Obstacles

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 02 December 2012 ) |