| Turing-ISR: AI-Powered Image Enhancements |

| Written by Sue Gee |

| Monday, 23 May 2022 |

|

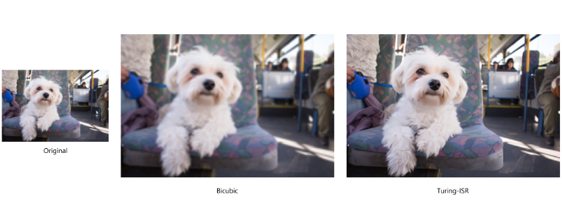

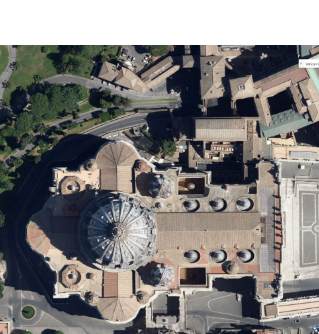

Using the power of Deep Learning, the Microsoft Turing team has built a model to improve the quality of images and is incorporating its new technology dubbed Turing Image Super Resolution in Bing Maps and the Edge browser. We have already reported on Microsoft's Project Turing applying AI and massive compute power to Natural Language Generation, see The Third Age Of AI - Megatron-Turing NLG and now the Turing Team is tackling the problem of low quality grainy images with its new model, Turing Image Super-Resolution (T-ISR). According to Microsoft: The ultimate mission for the Turing Super-Resolution effort is to turn any application where people view, consume or create media into an “HD” experience. The new technology is already available in Aerial View of Bing Maps and is currently shipped in the Canary channel of the Edge browser. It will continue to be rolled out and Microsoft must be hoping that enhanced imagery will be an attractive feature that will give its browser an edge over Chrome. In the blog post outlining the details of the T-ISR model the Turing team explains how it adopted a two-stage process: When we began working on super-resolution, we saw that most approaches were still using CNN architectures. Given the Microsoft Turing team’s expertise and success applying transformers in large, language models ... we experimented with transformers and found it had some compelling advantages (along with some disadvantages). Ultimately, we incorporated the positive aspects of both architectures by breaking the problem space into two stages: 1) Enhance and 2) Zoom. The first stage is called DeepEnhance and it was found that when testing with very noisy images like highly compressed photos or aerial photos taken from long-range satellites, transformer models did a very good job at "cleaning up" the noise, tailored to what was in the image. For example. noise around a person's face was handled differently from the noise on a highly textured photo of a forest. After using a sparse transformer scaled up to support extremely large sequence lengths and adequately process the context of an image, the result is a cleaner, crisper and much more attractive output image whose size is the same as the input. The second stage, DeepZoom, is used to scale up images and uses a novel approach. The widely used bicubic interpolation, in which pixels are inferred based on the surrounding pixel, usually results in a grainy image missing a large amount of detail that gets worse the larger the scaling factor is. To avoid this effect, the team trained a 200-layer CNN to learn the best way to recover "lost" pixel detail, The result is a model that knows the best way to recover pixels for the specific types and scenes of an image. Using Bing Maps to look at landscapes and landmarks like St. Peter's in the Vatican City does reveal that there is a real difference in image quality thanks to the use of T-ISR. But you need to see it for yourself.

More InformationIntroducing Turing Image Super Resolution Related ArticlesThe Third Age Of AI - Megatron-Turing NLG To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Monday, 23 May 2022 ) |