| Tracking.js Computer Vision In The Browser |

| Written by Mike James | |||

| Wednesday, 30 July 2014 | |||

|

The only real problem with tracking.js is that potential users might ignore it, thinking it has something to do with marketing. It doesn't. It is an open source computer vision library in JavaScript and it makes it fairly easy to add sophisticated image processing into your web apps.

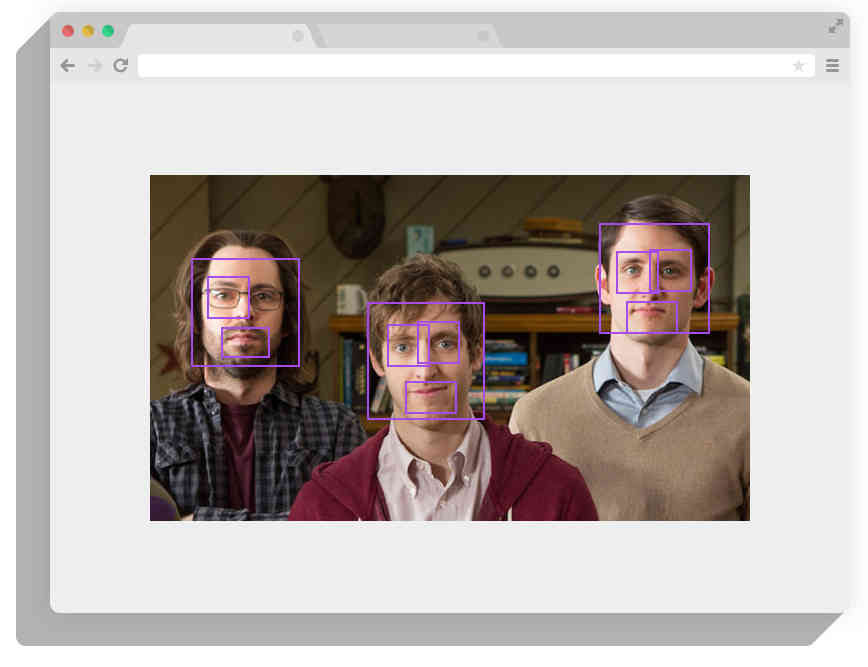

The library is called tracker.js because it majors on visual tracking algorithms. It has just reached Version 1 and it seems to be a project with a lot of enthusiastic participants. The range of algorithms that it offers is impressive. It is also easy to use. Simply load the core library and any additional algorithm libraries you need. Next you need to set up a video source and this can be as simple as an HTML 5 video tag, Canvas tag, img tag or a full getUserMedia call if you want to use a built in video camera. Next you hook up the algorithm to the video source and set a call back for any detection event. The ease of hooking up the video source is one reason to use the library but the simplicity of just running a textbook algorithm by creating and object and calling a method is very difficult to beat. The entire library is also available as a set of web components built using Polymer on top of the basic tracking.js. The trackers available go from the very simple, in principle, color tracker which tracks a specified color to an object tracker which will track faces, eyes a mouths. For example, to track all three you would need:

You can extend the object tracker by adding your own training data. You can also easily extend the library with your own tracker. This is also made easy in that your custom code is passed to the pixel array from the video source to work with. In addition to the trackers, there is also a set of utilities that can be used in creative ways. There are two feature detectors - one called fast which finds corners and on called Brief which uses binary features. There is also the Viola Jones object detection framework, which is used in the object tracker. You can use it to track an arbitrary object in an image simply by providing it with the pixel data for that object. You also have access to a general convolution filter, image blur, Sobel, convert to grayscale and integrate a region operations. There are lots of examples of the library in use and many use the live feed from any built in video camera your computer might have. Notice, however, that the library doesn't do face recognition only detection. The bottom line is that this is one of the easiest computer vision packages to use and you can start doing clever and impressive things with it, even if you don't know much about the algorithms involved. What is more, you don't have to write much code to get things started. If you do know something about the algorithms then you can implement new and custom trackers very easily. If you have any interest in computer vision in web apps - this is one library worth checking out.

More InformationRelated ArticlesSmart Cat Feeder Uses Facial Recognition GaussianFace Recognizes Faces Better Than Humans Google's Deep Learning AI Knows Where You Live And Can Crack CAPTCHA Google Uses AI to Find Where You Live Deep Learning Researchers To Work For Google Google Explains How AI Photo Search Works Google Has Another Machine Vision Breakthrough? Never Ending Image Learner Sees, Understands, Learns

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 30 July 2014 ) |