| IBM's TrueNorth Simulates 530 Billion Neurons |

| Written by Mike James | |||

| Friday, 23 November 2012 | |||

|

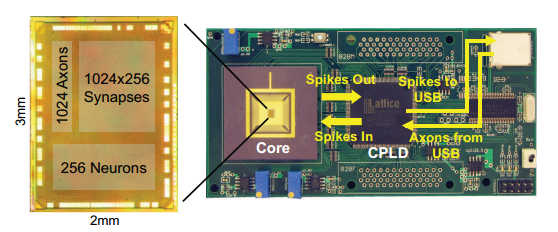

IBM Research has broken new ground with a simulation of 530 billion neurons in a network modeled on the connectivity of a monkey brain - almost enough for a complete working brain. The paper detailing the system involved "TrueNorth" is simply titled 1014 which is the number of synapses simulated. The simulation of TrueNorth was run on the IBM Blue Gene/Q super computer at Lawrence Livermore Labs. The figures make impressive reading. The system simulated 2 billion neurosynaptic cores, which contained the 530 billion neurons and the 1014synapses. The whole thing was running only 1500 times slower than the real hardware would have. By comparison the human brain is estimated to have between 0.6 to 2.4 x 1014 synapses. The research is IBM's effort towards DARPA's Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE)project. As the research paper says: "We have not built a biologically realistic simulation of the complete human brain. Rather, we have simulated a novel modular, scalable, non-Von Neumann, ultra-low power, cognitive computing architecture.." The neurosynaptic cores are chips each containing 256 neurons with a connection network of 1024 axons - making a total of 1024x256 synapses. The neuronal model is based on a leaky integrate-and-fire action, which is a relatively commonly used neural network. Unlike real neurons which emit a series of pulses when they fire, these artificial neurons simply fire when the activity on their inputs summed over time exceed a threshold. This is the "integrate-and-fire" part of the description. The "leaky" simply means that the effect of an input dies away over time so that the neuron doesn't have an unlimited memory of past activity. It is generally agreed that this is a reasonable baseline model of the behavior of a biological neuron but notice that it doesn't contain any element of learning in terms of modifying the strengths of the connections - so it only models the behaviour of a fully trained brain.

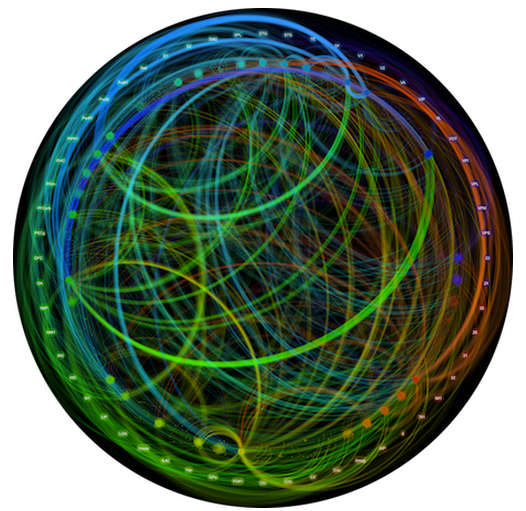

The overall architecture of the simulation was based on a model of the brain of a monkey with the 2 billion neurosynaptic cores divided into 77 brain-inspired regions each with their own probabilistic white matter or grey matter connectivity. Again this is not an unreasonable way to organize things but it is a fairly crude approximation to the structure of a real brain. The truth of the matter is that we really have no idea how accurately we have to reproduce brain structure to reproduce the behavior. Perhaps random connections with the same statistics as the real brain is good enough - but as the neurons in the simulation have no learning capacity it is even more limited than it seems at first.

Neuro-synaptic cores are locally clustered into brain-inspired regions, and each core is represented as an individual point along the ring. Arcs are drawn from a source core to a destination core with an edge color defined by the color assigned to the source core.

As a model of the brain this is fairly crude, even if an amazing achievement, but it might well be all that is needed. No details of what the simulation achieved are provided, apart from the simple fact that it worked and proved more than possible. If you are expecting results such as the simulation showed - thought patterns, alpha waves or it learned something, then you are probably going to be disappointed. The network existed as a functioning entity for a very short time and with a time scaling factor of around 1500 for an even shorter "thinking" time about 0.5s. It is worth noting that the neuromorphic core has been used in a number of experimental applications, including learning to play the game of Pong but in each case the learning was done off line and the resulting architecture was transferred to the network for implementation. In other words, as implemented, the network has no ability to learn. We still have some way to go before we reach the goal of a brain in a box. You can see some of the basic ideas in the following IBM Research promo video:

Don't get too taken in by the glossy presentation and the appearance of the "artificial brain" and flashing lights - this is important work but still a long way from the goal of strong AI - to create a machine intelligence of the same nature as the human brain.

More InformationBuilding Block of a Programmable Neuromorphic Related ArticlesThe Influence Of Warren McCulloch Near Instant Speech Translation In Your Own Voice Flying Neural Net Avoids Obstacles Google's Deep Learning - Speech Recognition A Neural Network Learns What A Face Is The Paradox of Artificial Intelligence To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Friday, 23 November 2012 ) |