| Near Instant Speech Translation In Your Own Voice |

| Written by Mike James |

| Friday, 09 November 2012 |

|

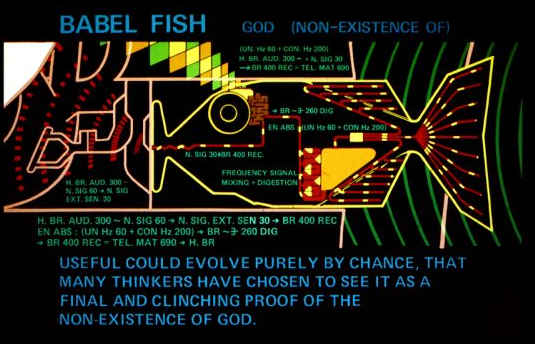

It looks as if the tide is turning for neural network based AI. It is about to move from the lab into the real world. Microsoft Research has demonstrated realtime translation of speech into another language - complete with the intonations of the original speaker. If you are a Hitchhiker's Guide fan then this is a Babel Fish; if Star Trek is more to your liking then it is the Universal Translator. A few months ago Microsoft Research demonstrated a system that could translate from one language to another and mimic a speaker's voice patterns. It was as if you could really speak the new language. The only drawback was that the input language had to be typed in. Now it has demonstrated a system that takes the spoken word and turns it into your voice in another language.

The demonstration was given in Tianjin, China at Microsoft Research Asia’s 21st Century Computing event. For some reason it wasn't officially recorded, but an enterprising member of the audience managed to capture it and this video recently turned up on You Tube. Now Microsoft Research has got in on the act and published a blog entry that includes it. This raises the question of why they didn't make more of it in the first place. The technique makes use of the most successful approach to speech recognition - Hidden Markov Models - coupled with the new approach offered by deep neural networks. The neural networks increased the performance by 30% to about one error in eight words.

The translation part of the system is the same as used with Bing translate, i.e. a statistical approach. The demonstration shows the presenter Rick Rashid, Microsoft’s Chief Research Officer, being translated to Chinese text output. At the next stage the voice is converted into spoken Chinese. The audience seems to approve, but personally, not being a Chinese speaker, not only is it impossible to check the translation, it is difficult to know if the qualities of the speaker's voice has been preserved. As the blog says: "Though it was a limited test, the effect was dramatic, and the audience came alive in response. When I spoke in English, the system automatically combined all the underlying technologies to deliver a robust speech to speech experience—my voice speaking Chinese. You can see the demo in the video above. The results are still not perfect, and there is still much work to be done, but the technology is very promising, and we hope that in a few years we will have systems that can completely break down language barriers." There are lots of unanswered questions about the system, in particular how much computer power is needed to do the job. The neural networks are probably cheap to compute, but the entire system is still likely to be complex. We might be still some way off being able to put the electronic equivalent of a fish in your ear (the Babel Fish) or having a Universal Translator in your phone, but we have a proof that it is more than possible. Original animation artwork by Rod Lord More InformationRelated Articles

Comments

or email your comment to: comments@i-programmer.info

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

|

| Last Updated ( Friday, 09 November 2012 ) |