| Kinect knows what you are doing... |

| Written by Mike James | |||

| Monday, 04 July 2011 | |||

|

You can train a Kinect to recognize what you are doing and perhaps even to recognize who is doing it! Just when you thought that the Kinect RGBD (Red, Green, Blue, Depth) camera had gone stale and that there is nothing left to do with it, researchers from Cornell prove you wrong. What they have done is to take a standard Kinect with the open source drivers and the PrimeSense Nite software and created a program that can tell what you are doing. The Kinect mounted on a roaming robot or perhaps one in every room can monitor what you are doing - cleaning your teeth, cooking, writing on a whiteboard (the designer are academics after all) and so on. Why would you want to do this? Imagine the intelligent house of the future. It is obvious that it knowing what you were doing would be an advantage - "Would you like some help with that recipe, Dave?" At a less ambitious level you could use a Kinect to monitor patients say and make sure that they were drinking or eating etc. A more worrying application might be to make sure that workers were doing just that - working and doing the correct task. The researchers have also demonstrated that it isn't only Microsoft Research who can put AI into Kinect. They used a hierarchical maximum entropy Markov model which is based on identifying sub-activities such as "pickup", "drink", "place" and so on.

The training set was small compared to the sort of thing that Microsoft Research uses and hence the results aren't as convincing. Only four different people were used and simply given an instruction to perform the activity but in full view of the Kinect. This is a bit artificial in that you don't usually clean your teeth standing in front of a Kinect. The results of the training worked well for subjects seen before - 84.31% correct activity. What is equally interesting is that the classification rate dropped to 64.17% for people that hadn't been seen before. What this means is that there might well be information contained in how people do these standard tasks sufficient to recognize the individuals. It might well be that how you clean your teeth is sufficient to identify you. To turn either possible application into a reality would require a much bigger training set and further development - but it looks promising. It also demonstrates that it is not just Microsoft Research that can add AI methods to the Kinect. Most of its creative uses to this point have simply been adaptations that make use of its depth input to guide robots or gesture recognition to control quadrotors etc. In this case the unique view of the world that a depth camera provides has been used within machine learning to produce something completely new.

More information:Human Activity Detection from RGBD Images (Pdf) Related articles:Getting started with Microsoft Kinect SDK

If you would like to be informed about new articles on I Programmer you can either follow us on Twitter, on Facebook or you can subscribe to our weekly newsletter.

<ASIN:B002BSA298@COM> <ASIN:B0036DDW2G@UK> <ASIN:B0036DDW2G@FR> <ASIN:B002BSA298@CA> <ASIN:B003H4QT7Y@DE> <ASIN:B00499DBCW@IT> |

|||

| Last Updated ( Monday, 04 July 2011 ) |

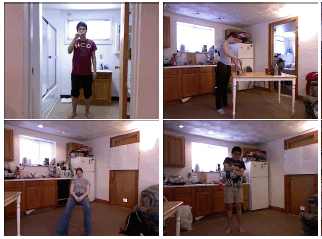

Four samples from the training site - brushing teeth, cooking, relaxing on chair, opening pill container.

Four samples from the training site - brushing teeth, cooking, relaxing on chair, opening pill container.