| Anthropic Launches Prompt Caching With Claude |

| Written by Sue Gee | |||

| Monday, 19 August 2024 | |||

|

Anthropic has added Prompt Caching as a feature that it claims makes it more practical and cost-effective to use Claude's capabilities for complex, long-term projects that require consistent access to large amounts of contextual information. The quality of the output users obtain from any LLM (Large Language Model) is highly dependent on the prompt it is given, i.e the initial input provided by the user. Prompt caching, which is now available in public beta on the Anthropic API for Claude 3.5 Sonnet and Claude 3 Haiku, improves model responses by enabling longer, more detailed, and more instructive prompts which are then stored for reuse. As Anthropic explains, "This makes it feasible to include comprehensive information — such as detailed instructions, example responses, and relevantbackground data, thereby improving the quality and consistency of Claude's responses across multiple interactions." Anthropic suggests that as well as enhancing Claude's performance across a wide range of applications, prompt caching can improve response times by up to a factor of 2 and reduce costs by up to 90%. All of which raises questions about the types of applications Claude is suited to and the cost and performance of its three models. Some background is called for. Claude is the AI assistant from Anthropic, an artificial intelligence research company founded in 2021. Claude 1.0 was released publicly in March 2023 having been developed using Anthropic's "constitutional AI methods" which aim to create AI systems with built-in safeguards and values. Claude 2.0 followed in July 2023 and the Claude 3 family was announced in March 2024. Claude's claimed capabilities are:

According to the information Claude itself provided me with when I asked for some background material: Claude excels at tasks requiring nuanced understanding and complex reasoning. It's particularly strong in areas like analysis, writing, and coding. By way of personality, it told me it that it tends to be more direct and focused in its responses compared to some other AI assistants and admitted that: Unlike Copilot, which is tailored for coding tasks, Claude is a more general-purpose assistant. Anthropic offers three tiers for using Claude.ai Free

Pro - $20 Per person / month

Team - $25 Per person / month

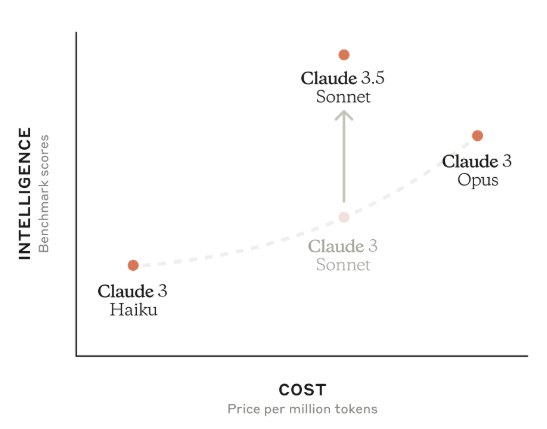

There are three options for using the Anthropic API which differ in cost, expressed in price per million tokens and performance. Claude 3 Haiku is the fastest model, intended for lightweight actions. It costs $0.25/MTok for Input and $1.25/MTok for Output. Prompt cashing read costs $0.03/MTok while prompt cashing write costs $0.30/MTok. Claude 3 Opus is highest-performing of the three intended for complex analysis, longer tasks with many steps, and higher-order math and coding tasks. It costs $15/MTok for Input and $75/MTok for Output. When it becomes available, prompt cashing read will cost $1.50/MTok while prompt cashing write will cost costs $18.75/MTok. Recently updated, Claude Sonnet 3.5 is the most intelligent model to date. It costs $3/MTok for Input and $15/MTok for Output. Prompt cashing read costs $0.30/MTok while Prompt cashing write costs $3.75/MTok. According to Anthropic, prompt caching is most effective when sending a large amount of prompt context once and referring to that information repeatedly in subsequent requests. Common business AI applications include: ● Conversational agents: Reduce cost and latency for extended conversations, especially those with long instructions or uploaded documents. ● Large document processing: Incorporate complete long-form material in your prompt without increasing response latency. ● Detailed instruction sets: Share extensive lists of instructions, procedures, and examples to fine-tune Claude's responses without incurring repeated costs. ● Coding assistants: Improve autocomplete and codebase Q&A by keeping a summarized version of the codebase in the prompt. ● Agentic tool use: Enhance performance for scenarios involving multiple tool calls and iterative code changes, where each step typically requires a new API call.

More InformationRelated ArticlesAmazon Bedrock Adds Support For Anthropic's Claude3 Opus Free Course On ChatGPT Prompt Engineering To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 19 August 2024 ) |