| Create Your Own LLM Pipelines With Instill AI |

| Written by Nikos Vaggalis | |||

| Thursday, 08 August 2024 | |||

|

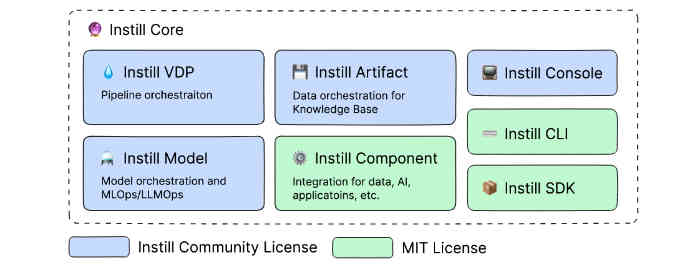

Instill AI are the makers of the Instill Core platform which includes a powerful visual pipeline builder for chaining LLMs together without writing any code. Versatile Data Pipeline, or VDP for short, is part of the Instill Core package alongside Instill Model and Instill Artifact, all seamlessly working together to work the magic out. While all play their role, VDP lies at the heart of the Core since its role is to orchestrate the rest. With VDP you can:

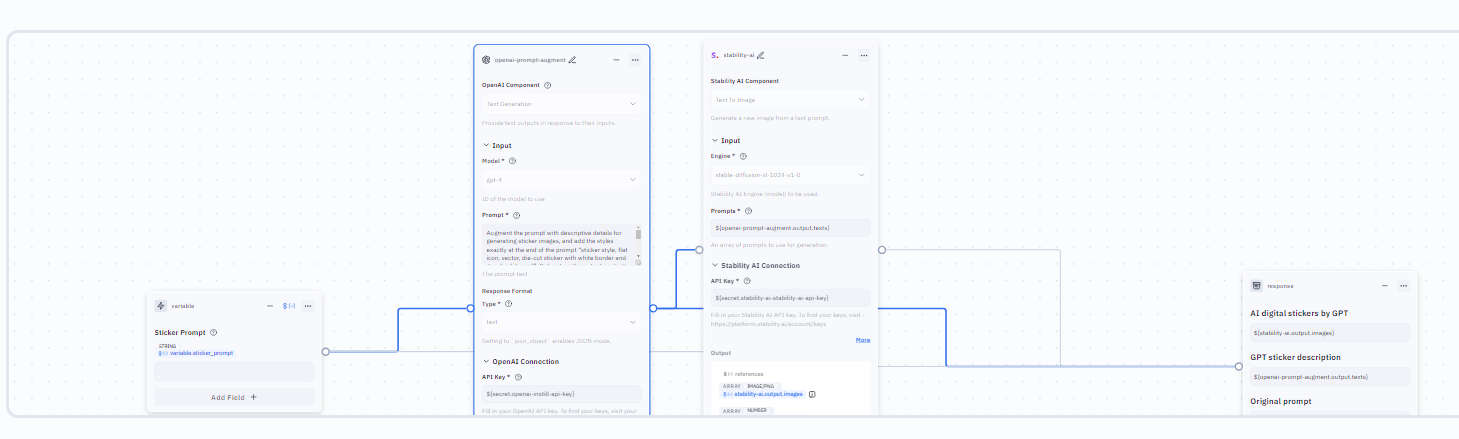

Let's see a simple example. We want to enter a prompt of simple keywords describing a sticker, choose a LLM which will augment that prompt in order to become more descriptive, and feed the augmented prompt to Stable-Diffusion-XL which in turn But before jumping into the action, let's find out how you can install Instill. There's a few options; you can either selfhost Instill by getting it from its Github repo , available for various platforms and as a docker image, or log into the managed Instill Cloud by making a free account. We opted for the latter to speed up the demonstration. So after you login, the steps to create a pipeline are: 1.Initiate the pipeline by selecting "+ Create Pipeline" in the Pipelines page. 2.In the "Create new pipeline" dialog, fill in the following details: Owner: Determine this pipeline to be created under your personal account or organization account. 3.From the workbench you are presented with, select the Component that will trigger the process by entering our initial prompt in it and name it as "Select Prompt". 4.Now add a Component by clicking "Component +" and select : Model: "OpenAI gpt3.5 Turbo" Prompt: "Augment the prompt with descriptive details for generating sticker images, and add the styles exactly at the end of the prompt “sticker style, flat icon, vector, die-cut sticker with white border and grey background”. Only return the output content. Prompt: a bear Output: a brown bear dancing in a forest, sticker style, flat icon, vector, die-cut sticker with white border and grey background Prompt: a dog writes code Output: a dog writes code in front of a laptop and drinks coffee, sticker style, flat icon, vector, die-cut sticker with white border and grey background Prompt: ${variable.sticker_prompt}" Response format: "text" 5.Connect another Component, this time a Stability AI component, with the task of "Text To Image". This will work on the output emitted by the former OpenAI component of step 4. 6.Connect a Response or continue repeating steps 3-5 (by adding different Components) until your pipeline is completely set up.

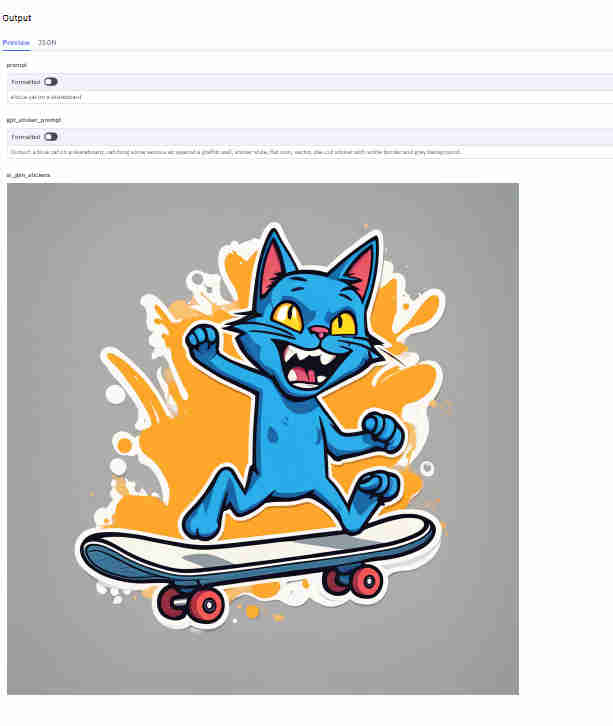

7.Run the Pipeline For instance in case we trigger the pipeline by entering the very simple prompt of "a blue cat on a skateboard",

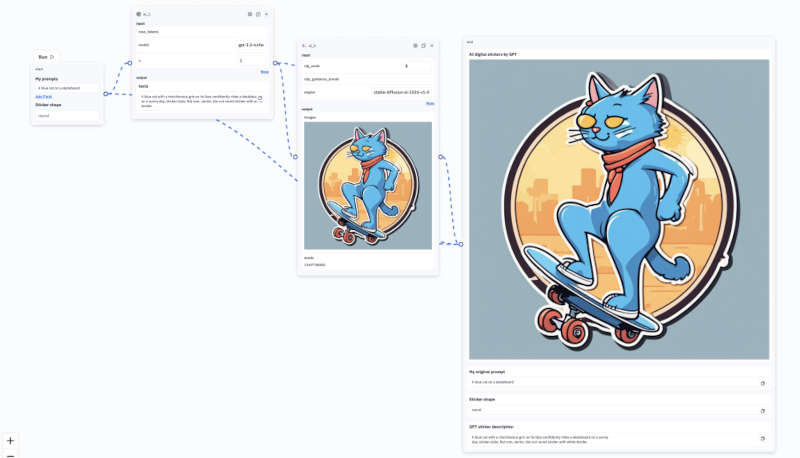

A more descriptive example, which also shows how the pipeline is constructed, is:

To cut a long story short, here's the sticker generating pipeline as a pre-made template for you to experiment with. As said, Instill comes with many pre-made templates which you can clone and tweak on your own playground.

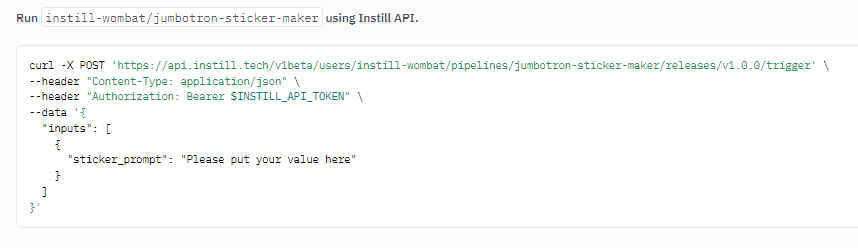

Furthermore, the API can be used in your code with the Python and TypeScript SDKs, or if you want CLI access to it, you can use Instill Console and Instill CLI. Note that in order to use the managed Cloud version and while you get an account for free, this comes with a fixed amount of credits which are consumed whenever you run your pipelines. The account also hands you out an Instill API key too. To run your own pipelines comprising of non-Instill components you also need to posses the API keys of those components too, say OpenAI or Stability AI. However, as said there's many free pipelines offered as templates which can be used without such restrictions; or you can just initiate pipelines for free by hooking into the default The key takeaway is that you can create elaborate pipelines by picking, matching and chaining any of the available Components together :

In summary, Instill is an innovative tool that simplifies developing AI powered applications, whether you're a pro or citizen developer.

More InformationInstill AI jumbotron-sticker-maker template Related ArticlesMirascope-Python's Alternative To Langchain

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Thursday, 08 August 2024 ) |