| Mirascope-Python's Alternative To Langchain |

| Written by Nikos Vaggalis |

| Thursday, 20 June 2024 |

|

Mirascope is a Python library that lets you access a range of Large Language Models, but in a more straightforward and Pythonic way.

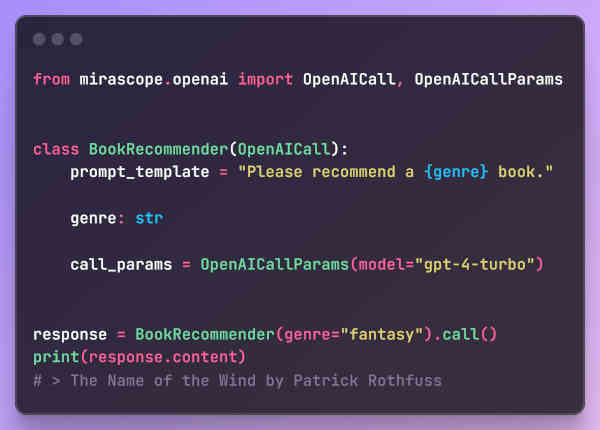

If you've been used to Langchain to call into various model providers such as OpenAI, Anthropic, Mistral etc you'll find that this library does the same but more fluenty and with less overhead. The notion is that working with LLMs should be intuitive and simple just like coding with other Python projects. Mirascope unites the core modules of accessing each distinct LLM provider under a common API but at the same time gives you full access to every nitty-gritty detail of them when you need to; that is you can use the Mirascope wrapper API but at any point in your workflow you can get access to the original provider classes. As an API, Mirascope's classes encapsulate everything which can impact the results of using that class, such as Let's see an example. You can initialize an OpenAICall instance and invoke its call method to generate an OpenAICallResponse:

You can also Chain Calls, do Retries when LLM providers fail for various reasons, have stateful Chat History, Function calling etc. The following providers are for the time being supported:

As said, Mirascope's unified interface makes it fast and simple to swap between various providers. For instance here's how easy is the swapping, first with OpenAI:

and then with Mistral:

So there you have it; access LLMs in a fluent less bloated and Pythonic way. More InformationRelated ArticlesIBM Launches The Granite Code LLM Series Get Started With Ollama's Python & Javascript Libraries

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Thursday, 20 June 2024 ) |