| OpenAI Introduces GPT-4o, Loses Sutskever |

| Written by Sue Gee | |||

| Wednesday, 15 May 2024 | |||

|

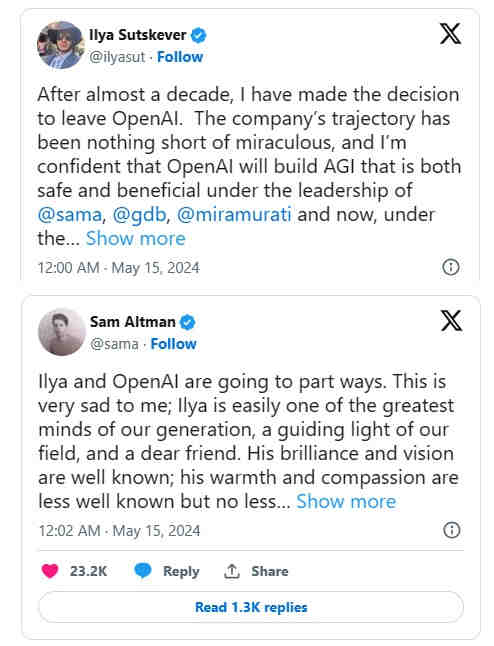

It's an eventful week for OpenAI, the research company dedicated to making advances towards Artificial General Intelligence that are both safe and beneficial to all. A day after it showcased its latest, multimodal, flagship model, GPT-4o, Ilya Sutskever, OpenAI's Chief Scientist and one of it co-founders, left the company. Sustkever's decision to move on was publicized in a Tweet and the response from Sam Altman used the same medium:

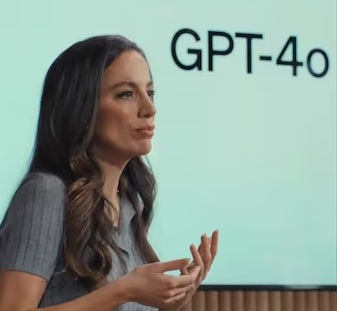

a project that is very personally meaningful to me about which I will share details in due time no hint is given of what triggered his departure at this time. However the fact that Jan Leike, who has shared responsibility with Sutskever for the Superalignment team set up by Open AI to counter the threat of developing a harmful superintelligence, has also resigned, suggests a rift on a matter of policy. Another general speculation is that it could be to do with strained relationships after Sutskever was among those who voted to remove Altman from his position as CEO last November. After protests from Open AI employees, Altman was reinstated and Ilya expressed personal regret via a tweet. GPT-4o made its debut at the Open AI Spring Update on May 13th fronted by CTO Mira Murati. Prior to the event rumors had circulated about how the next major model from Open AI, GPT-5 might encompass search and challenge Google's dominance in that area. Instead what was presented was an enhanced version of GPT-4 that has been rebuilt and retrained from scratch by OpenAI to understand speech-to-speech as well as other forms of input and output without first converting them to text, which makes it significantly faster. Murati's first announcement at the event was that Open AI is opening up many of the features previously reserved for paying customers to those who use it for free, and this extends to the new flagship product GPT-4o, where the o stands for omni indicating its availability to all. ChatGPT-4o has new capabilities including live voice translation, the ability to understand video input and the ability to describe what it is seeing, enabling to act as a tour guide to the visually impaired. Some of the features demoed in the video aren't ready just yet - the ability to detect emotion by looking at a face through the camera - but will be rolled out over the coming few weeks. ChatGPT-4o exhibits a female personality, reacting to compliments in a flirtatious manner. It is also able to modify its voice on demand as demoed in the video when it tells a story in a robotic voice. ChatGPT-4o will be free for all users, however paid users will continue to have up to five times the capacity limits of free users which is why Open AI and Microsoft are likely to make money from it, despite its high compute costs. Open AI's announcements came the day before Google I/O making Google's announcements of Gemini Live AI assistant that, like GPT-4o is multi-modal with impressive voice and video capabilities, seem like a catch up. More InformationIlya Sutskever to leave OpenAI, Jakub Pachocki announced as Chief Scientist Related ArticlesPain And Panic Over Rogue AI - We Need a Balance Chat GPT 4 - Still Not Telling The Whole Truth Open AI And Microsoft Exciting Times For AI The Unreasonable Effectiveness Of GPT-3 To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Friday, 13 September 2024 ) |

Although Sutskever expresses excitement about his next move referring to:

Although Sutskever expresses excitement about his next move referring to: