| Insights From AI Index 2024 Report |

| Written by Sue Gee | |||

| Wednesday, 17 April 2024 | |||

|

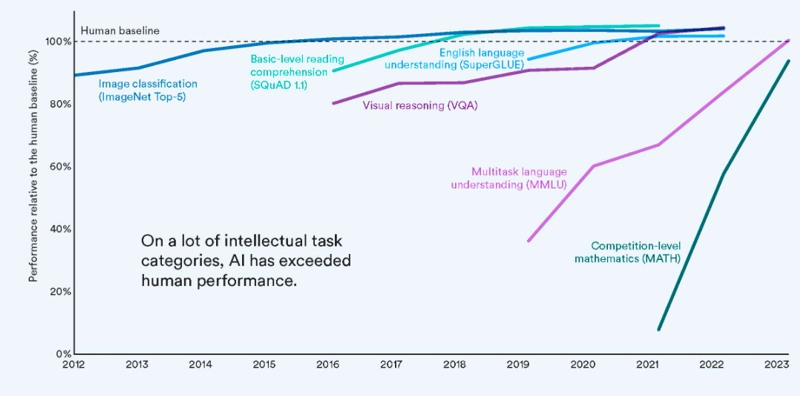

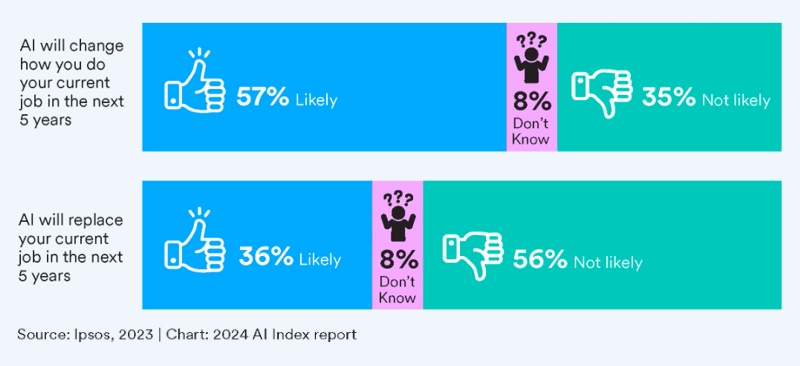

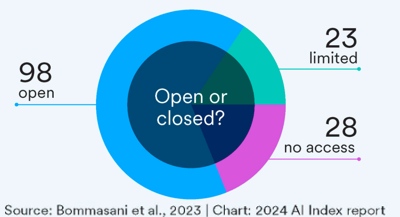

Published this week, the latest Stanford HAI AI Index report tracks worldwide trends in AI. A mix of its new research and findings from many other sources, it provides a wide ranging look at how AI is doing. Originated in 2017, and now publishing its seventh edition, the AI Index is an independent initiative at the Stanford Institute for Human-Centered Artificial Intelligence (HAI). It was conceived within the One Hundred Year Study on Artificial Intelligence (AI100), see our report The Effects Of AI - Stanford 100 Year Study, and it aims to provide information about the field’s technical capabilities, costs, ethics and more. According to its own website: The AI Index report tracks, collates, distills, and visualizes data related to artificial intelligence (AI). Our mission is to provide unbiased, rigorously vetted, broadly sourced data in order for policymakers, researchers, executives, journalists, and the general public to develop a more thorough and nuanced understanding of the complex field of AI. The project is overseen by the AI Index Steering Committee, an interdisciplinary group of experts from across academia and industry, who also contribute to the report. The latest report is a PDF of over 500 pages written by Nestor Maslej, Loredana Fattorini and the 11-member steering committee. Another 20 researchers contributed to the report. The question that every reader of the report will want answering is "How is AI doing?" and its top takeway sums it up: AI has surpassed human performance on several benchmarks, including some in image classification, visual reasoning, and English understanding. Yet it trails behind on more complex tasks like competition-level mathematics, visual commonsense reasoning and planning. The earliest benchmark in the chart relates to ImageNet which in 2012 started at around 90% of human ability in visual classification, took three years to get to 100%, continued to gain a few percentage points and then has stayed steady between 2017 and 2021, the latest data point. While AI hasn't yet reached top human performance in competition-level mathematics, starting at around 10% in 2021 it has reached over 90% within 2 years. Multi-task language understanding also showed fast progress, starting around 40% in 2019, it reached 100% in 2022. Another question the report addresses is "What is AI doing?" and this chart from a McKinsey survey that reveals how businesses are using AI: The most commonly adopted AI use case by function among surveyed businesses in 2023 was contact-center automation (26%), followed by the marketing and sales functions - personalization (23%) and customer acquisition (22%). Use of AI in R&D product development is almost as widespread with AI-based enhancements of products (22%) and creation of new AI-based products tying with product feature optimization (19%). Another independent survey, from IPSOS, included questions about how people perceive AI’s impact on their current jobs. 57% of respondents think AI is likely to change how they perform their current job within the next five years, and 36% fear AI may replace their job in the same time frame. We have seen the extent to which open source software is increasingly dominant across many spheres. AI is no exception. Looking at 149 foundation models released in 2023, more than double the number released in 2022, 65.7% were open-source compared with only 44.4% in 2022 and 33.3% in 2021.

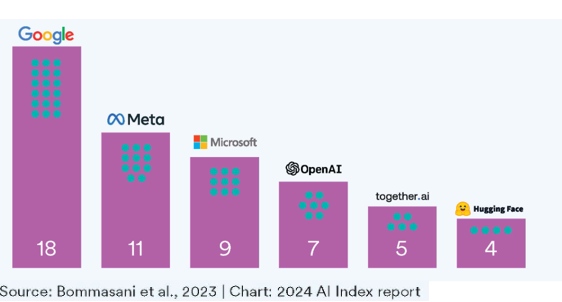

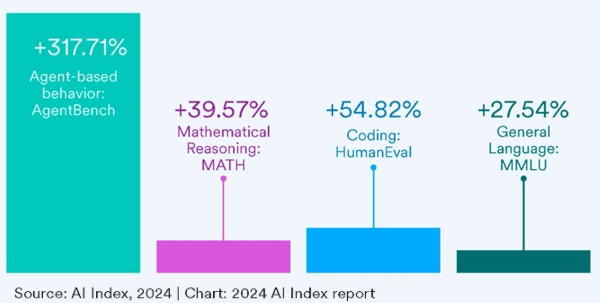

Looking at the number of foundation models by organisation reveals Google released the most models in 2023. This consolidated its dominance since 2019. In this period Google release a total of 40, followed by OpenAI with 20. The distribution of the 2023 foundation models by sector showed that industry, which released 108 new foundation models, was responsible for the vast majority (72%). Academia released 28 models and a further 9 were industry-academia collaborations. Government accounted for the remaining 4. One reason for Google's dominance is that you need deep pockets. According to the report: "One of the reasons academia and government have been edged out of the AI race: the exponential increase in cost of training these giant models. Google’s Gemini Ultra cost an estimated $191 million worth of compute to train, while OpenAI’s GPT-4 cost an estimated $78 million. In comparison, in 2017, the original Transformer model, which introduced the architecture that underpins virtually every modern LLM, cost around $900." On the basis of its own research, the AI Index reveals that: Closed-source models still outperform their open-sourced counterparts. On 10 selected benchmarks, closed models achieved a median performance advantage of 24.2%, with differences ranging from as little as 4.0% on mathematical tasks like GSM8K to as much as 317.7% on agentic tasks like AgentBench. One of the coding benchmarks in the above chart, HumanEval is for evaluating AI systems’ coding ability. Consisting of 164 challenging handwritten programming problems, was introduced by OpenAI researchers in 2021. AgentCoder, a GPT-4 model variant, leads in HumanEval performance, scoring 96.3%, which is a 11.2 percentage point increase from the highest score in 2022. Since 2021, performance on HumanEval has increased 64.1 percentage points, which seems to be good news for any developer who want AI assistance with coding tasks.

More InformationArtificial Intelligence Index Report 2024 Related ArticlesThe Effects Of AI - Stanford 100 Year Study Google's 25 Years of AI Progress To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Thursday, 18 April 2024 ) |