| AI Understands HTML |

| Written by Mike James | |||

| Wednesday, 19 October 2022 | |||

|

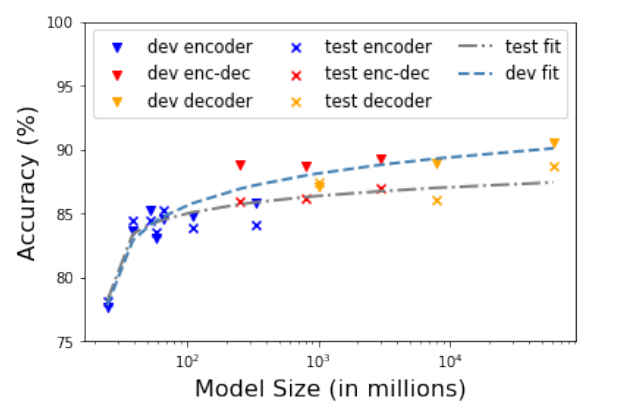

Large Language Models, LLMs, like GPT3 and BERT are all the rage at the moment and you can ask if they truly understand anything at all. Now we have an example of the same technology applied to "understanding" HTML - this is super scraping and could remove the human from the loop. HTML is a strange beast. It started out as a markup language which confused semantics with presentation. Now it is supposed to be mostly about classifying the parts of a page with CSS dealing with presentation - but it generally isn't. Most HTML pages were, and are, constructed with the designing thinking that H1 is a bigger header than H2 rather than a more important header - but no mind it still conveys the logical structure of the document as long as "bigger" is "more important". Given that so much information is coded up within HTML the question is, can we make use of the structure to infer what the information is all about? A team from Google Research thinks so. Its technique could provide us with the ability to "scrap" web pages and automatically extract information that can be put to use - usually creating other web pages. The same technique can be used to understand a web page well enough to allow a program to make use of it. For example, you could give the AI a verbal instruction to sign in using a given user name and password and it could navigate the page, enter the information in the correct places and find and click the submit button - so replacing the human in the loop. The suprising part of this study is that taking a pretrained network, i.e. one trained on general language data, only a little fine tuning was necessary to achieve better results than a network trained only on HTML. It seem true that the LLMs do extract structure from general language and this structure is also applicable to artifical languages such as HTML. "While previous work has developed dedicated architectures and training procedures for HTML understanding, we show that LLMs pretrained on standard natural language corpora transfer remarkably well to HTML understanding tasks. For instance, fine-tuned LLMs are 12% more accurate at semantic classification compared to models trained exclusively on the task dataset." They tested the models on three tasks:

It seems that HTML is understandable and we can automate interaction with it and data extraction. Of course, this is particularly relevant to Google and search engines in general that have to understand a page to index it, but perhaps this goes beyond simple indexing. Perhaps in the future web crawlers can interact with the pages they visit. If you have ever tried to automate a web interaction you will know how difficult and fragile a task it is. The page changes just a little and all your scraping efforts are so much dead code until you fix the problem. We could have engineered automation interfaces on our web pages, but given we haven't AI seems to be the only real option.

If you are interested in these ideas the research team has a gift for you: "To promote further research on LLMs for HTML understanding, we create and open-source a large-scale HTML dataset distilled and auto-labeled from CommonCrawl" More InformationUnderstanding HTML with Large Language Models Izzeddin Gur, Ofir Nachum, Yingjie Miao, Mustafa Safdari, Austin Huang, Aakanksha Chowdhery, Sharan Narang, Noah Fiedel and Aleksandra Faust - Google Research Related ArticlesHeadless Chrome and the Puppeteer Library for Scraping and Testing the Web Alexa Teacher Models Outperform GPT-3 Amazon Invests In Conversational AI The Unreasonable Effectiveness Of GPT-3 Alexa Prize SocialBot Grand Challenge 5 To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 19 October 2022 ) |