| AI Beats 50 Year Old Best Multiplication Algorithm |

| Written by Mike James | |||

| Thursday, 06 October 2022 | |||

|

Multiplying two matrices together is fundamental and almost elementary. How fast you can do the job is both practically and theoretically important. The fastest known algorithm has just been beaten - not by a mathematician but by DeepMind's AlphaTensor, a reinforcement learning program. The idea that reinforcement learning can be used to solve a problem that seems to involve detailed analysis and logic to construct a precise algorithm is a strange one. We are more used to neural networks performing "fuzzy" tasks such as recognizing faces or objects in general. The result that AlphaTensor can optimize an algorithm is more an indication that there is yet another area that AI can potentially take over. The actual algorithm for multiplying two matrices together is remarkably simple, but it involves three nested for loops and so it takes in the order of n3 multiplications to multiply two n x n matrices together.

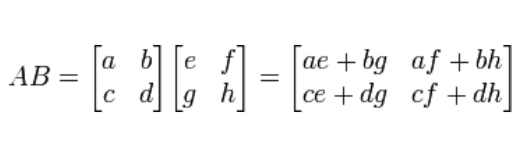

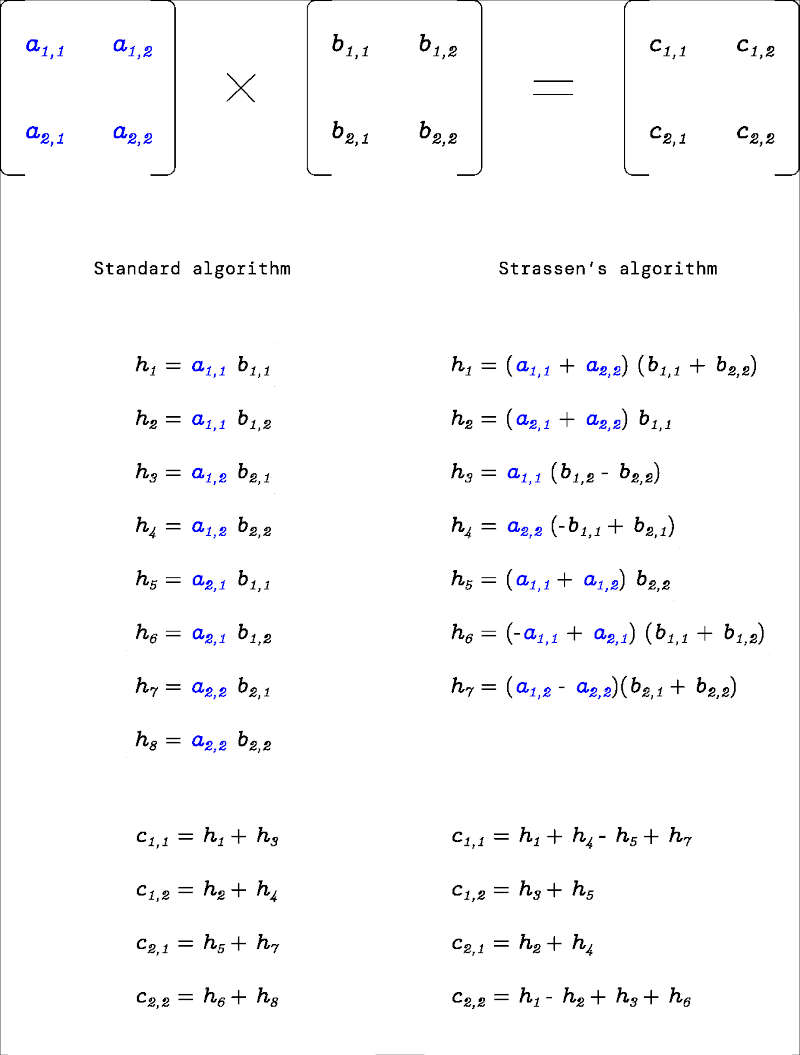

The algorithm is so simple that you might think that there is no scope for a general reduction in this amount of work - it clearly needs three loops of order n in general. However, it has long been conjectured that matrix multiplication might be possible in very nearly quadratic time. That is you might be able to multiply two matrices in time proportional nω with ω<3. The first such algorithm was invented in 1969 by Volker Strassen. You can see how it works from this illustration taken from the announcement of the result:

He found a way to multiply 2x2 matrices using just 7, rather than 8, multiplications - the trade-off was that you had to do more additions. While it is usual to assume that multiplications take longer than additions this isn't always so true with modern hardware. You can perform the multiplication of matrices of any size by recursively multiplying 2x2 blocks and so this gives rise to a general algorithm that takes O(nω) with ω=log27=2.807. What is interesting is that it took so long to realize that there might be a faster way and even today we don't know very much about the 3x3 case. DeepMind takes on the problem using the approach of AlphaZero, its game-playing AI, by turning matrix multiplication into a game. Exactly how this works is complicated and it is the key to how the problem is solved. The idea is that matrix multiplication is a special case of a more general idea - a multilinear function. Such functions can be represented by tensors - this is one reason tensors are so useful. Matrix multiplication of 2x2 matrices can be represented as a 3D (rank 3) 4x4x4 tensor, F. This is a strange idea. What you actually do is convert each matrix to a 4D vector and multiply the first by F to give a 4x4 tensor and then multiply this by the second to give a 4D vector which is the result of multiplying the original matrices. The point is that F codes the process of matrix multiplication, i.e. it is a program for computing the result using tensor operations. With this formulation finding a faster algorithm can be implemented as finding a decomposition of F into simpler (rank 1) tensors. This is what the DeepMind team has managed to turn into a game. Starting from the initial tensor F, the player repeatedly picks three lower rank tensors and uses these to reduce F towards zero. If the system manages to reduce F to zero the sequence of lower rank tensors can be summed to provide a tensor decomposition that might provide a faster algorithm. This is the game that AlphaTensor is set to solve using the same techniques of reinforcement and tree search that AlphaZero used to learn to play other games. Does it work? The paper states: "Our agent, AlphaTensor, is trained to play a single-player game where the objective is finding tensor decompositions within a finite factor space. AlphaTensor discovered algorithms that outperform the state-of-the-art complexity for many matrix sizes. Particularly relevant is the case of 4 × 4 matrices in a finite field, where AlphaTensor’s algorithm improves on Strassen’s two-level algorithm for the first time, to our knowledge, since its discovery 50 years ago" How much better? "AlphaTensor improves over the best algorithms known for several matrix sizes. In particular, AlphaTensor finds an algorithm for multiplying 4 × 4 matrices using 47 multiplications in Z2, thereby outperforming Strassen’s two-level algorithm, which involves 72 = 49 multiplications. By applying this algorithm recursively, one obtains a practical matrix multiplication algorithm in Z2 with complexity For example, multiplying a 4x5 by a 5x5 matrix takes 100 multiplications. the previous state-of-the-art algorithm reduced this to 80; AlphaTensor reduces it to 76. While much of the hype surrounding this effort focuses on the improvements over the human-created mutliplication algorithms - "improves on Strassen’s two-level algorithm for the first time, to our knowledge, since its discovery 50 years ago" - tensor decomposition is an important problem in its own right. The paper notes: "Tensors can represent any bilinear operation, such as structured matrix multiplication, polynomial multiplication or more custom bilinear operations used in machine learning" As an example an algorithm that multiplies a skew-symetric matrix and a vector does it in roughly 1/2n2 instead of the currently best time of n2. There is a lot more to discover in the paper - it is worth reading.

More InformationDiscovering faster matrix multiplication algorithms with reinforcement learning Discovering novel algorithms with AlphaTensor Alhussein Fawzi, Matej Balog, Bernardino Romera-Paredes, Demis Hassabis, Pushmeet Kohli https://github.com/deepmind/alphatensor Related ArticlesBreakthrough! Faster Matrix Multiply To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Friday, 07 October 2022 ) |