| Neurons Are Smarter Than We Thought |

| Written by Mike James | |||

| Wednesday, 18 August 2021 | |||

|

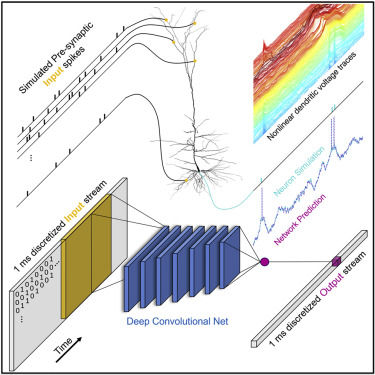

Artificial Neural Networks seem to compute in a way that is similar to the way that we do and they use artificial neurons that model the biological neuron - or they are supposed to. Now it seems that we have long underestimated the computing power of the neuron. A new paper suggests that it is more like a 5-8 layer network than just one of our artificial neurons. This is imporant because we tend to quantify, if only in a very rough way, the computational power of the brain as just its number of neurons, which we then roughly equate to an artificial neural network of a similar size. It give us a feeling of how far away from the goal we are. If, however, we have underestimated the computing power of a single neuron then our goal is even further away than it seems at the moment. We have had some suggestion that a single neuron is equivalent to a two-layer network, but this work pushes that to many more layers. The real problem is how do you characterize the computing power of a neuron? If you just look at its macro features you end up with a model that is the classical McCulloch-Pitts neuron - a set of inputs with weights and an output that "fires" when the weighted sum of the inputs reaches a threshold. However, if you look at the behavior of a neuron then you can arrive at a different idea of "what it does". If you take a neuron and map its input output characteristics by applying inputs and recording the outputs you have something to model. You can then take an artificial neural network and see what is required to realize the model. This is what has been done by researchers David Beniaguev, Idan Segev and Michael London from the Edmond and Lily Safra Center for Brain Sciences (ELSC) at the Hebrew University of Jerusalem. Using a neuron from layer 5 in the cortex, they found that it took a five to eight layer network to replicate the I/O characteristics. The criterion used was how well the model worked outside of the training set so we are using generalization ability rather than just rote memorizing of the I/O characteristics to say how good the model is.

So what is it that is making a single neuron so complicated? The answer might be the NMDA receptors because when these were disabled the I/O characteristic could be modelled by a much simpler network. What is an NMDA receptor - "The NMDA receptor (NMDAR) is an ion-channel receptor found at most excitatory synapses, where it responds to the neurotransmitter glutamate, and therefore belongs to the family of glutamate receptors." There you have it, but what it means for artificial neuron models is more difficult to say. It does seem that the response of the neuron is modulated in more complex ways than a simple weighed sum of the inputs. I'd like to comment more on the research, but the paper published in the journal Neuron is behind a paywall and I'm not going to encourage the practice of paying for access to research we have already paid for - shame. More InformationSingle cortical neurons as deep artificial neural networks Related ArticlesNeurons Are Two-Layer Networks Neuromorphic Supercomputer Up and Running Deep Learning from the Foundations To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 18 August 2021 ) |