| Nematode Worm Parks A Car |

| Wednesday, 24 October 2018 | |||

|

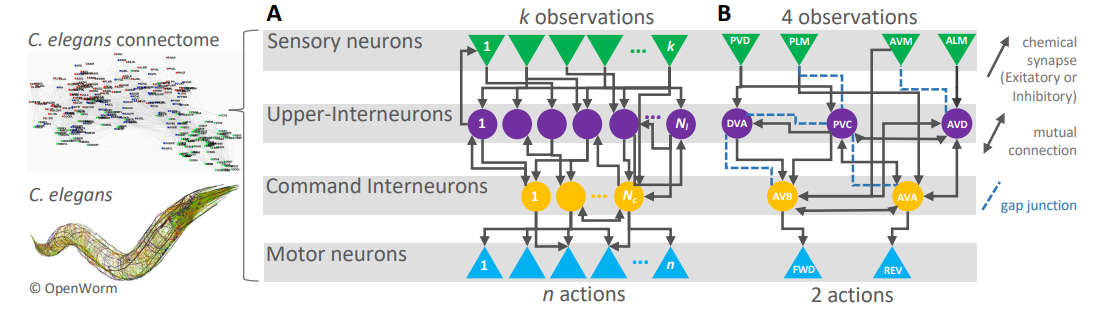

OK, the headline is a bit over the top, but does the slightly more accurate - "12 neurons from a nematode worm parks a car" sound any less so? C. elegans is very definitely the pin-up worm of the AI community. The nematode worm C. elegans is a very simple organism with some moderately complex behaviour. What is important about it is that we have mapped its entire neural network and this has resulted in a number of amazing projects. First the entire network was emulated so putting a worm's brain into a lego and then an Arduino body. More recently a sub-net of 12 neurons was modelled which successfully managed to implement a vertical pendulum balancing algorithm - Worm Balances A Pole On Its Tail. All of this is amazing enough, but now we have the same 12-neuron sub-net parking a car:

The network in question is normally responsible for the tap reflex. You tap C. elegans' "nose" and it withdraws.

A = C. elegans' general neural structure In this case the network was modified, but not in any biologically unlikely way. The network connections were allowed to vary in strength over time. The model neurons in this case are much more complex and subtle than the sort found in most neural networks. The behavior of the synapse, the gap between neuron connections, was also modelled. The various parameters of the more realistic neurons was set using a simple reinforcement learning algorithm. This makes this work different from the previous pole-balancing example because the network is trained to do the job. In this case it was tried out on pole balancing, the classic mountaincar problem, and parking. In each case the network learned to do the job. You can see how the network improved in the pole-balancing task in the video below:

The learning was reasonably quick - 5000 iterations to learn the parking task. Other tasks seem to take longer but it there is a proof that this neural circuit and other similar circuits are universal approximators for any finite-time continuous dynamics which means that they can be trained to do anything. "We show that our neuronal circuit policies perform as good as deep neural network policies with the advantage of realizing interpretable dynamics at the cell-level." It has to be said that to achieve something this complex with just 12 neurons is remarkable. However you also have to take into account the increased complexity of each neuron. To properly compare like with like you would need to match the number of parameters being adjusted in the "real" neural model and in the much simpler neural networks that we typically use. It has long been argued that an accurate model of the way a blological neuron works may not be necessary to achieve the same results. For example an aeroplane does not fly by flapping its wings but both birds and aeroplanes make use of the same principles of fluid dynamics. This is not to say that this approach to neural networks might have more than just a theoretical interest. If 12 neurons can learn to park a car how many does it take to drive one?

More InformationRe-purposing Compact Neuronal Circuit Policies to Govern Reinforcement Learning Tasks Ramin M. Hasani, Mathias Lechner, Alexander Amini and Daniela Rus, Radu Grosu Technische Universität Wien (TU Wien) Institute of Science and Technology (IST) Austria, Computer Science and Artificial Intelligence Lab (CSAIL), MIT Related ArticlesWorm Balances A Pole On Its Tail A Worm's Mind In An Arduino Body OpenWorm Building Life Cell By Cell To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 24 October 2018 ) |